As the demand for generative AI grows rapidly, the landscape of Large Language Models (LLMs) has become a vibrant yet complex ecosystem. Companies are enthusiastically exploring these innovative tools, but the challenge remains: how do you select the right LLM for your specific use case? Enter Arthur Bench, an open-source solution developed by Arthur, a machine learning monitoring startup. This tool aims to streamline the evaluation process, making it easier for organizations to discover the best model tailored to their needs.

The Genesis of Arthur Bench

In an environment where generative AI solutions are proliferating, Arthur’s CEO and co-founder, Adam Wenchel, highlighted a major gap: the absence of a structured approach to measure and compare the effectiveness of various models. Wenchel stated that less than a year after the launch of ChatGPT, many businesses remain unsure of which model to adopt, given a multitude of options.

Arthur Bench comes as a dedicated response to this challenge. It enables users to systematically test and assess LLMs based on their specific data, offering a practical way to evaluate performance against tailored prompts. As Wenchel noted, the tool’s unique capability allows users to measure how different LLMs respond to prompts likely to be employed by their customers.

How Arthur Bench Works

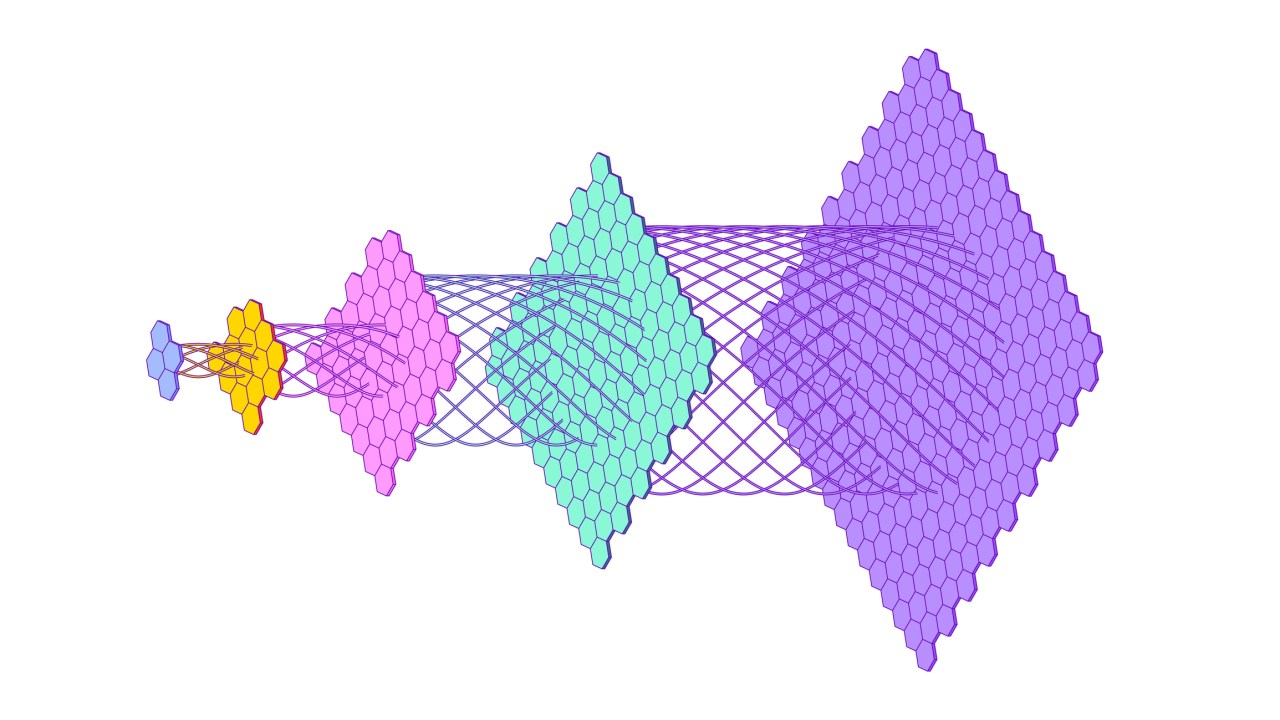

Arthur Bench provides a suite of tools designed for rigorous testing of LLM performance. Here’s a breakdown of its key features:

- Performance Testing: Users can test a multitude of prompts—up to 100 at a time—and see how different models, such as Anthropic or OpenAI, react. This granular approach enables firms to make informed decisions based on empirical data.

- Scalability: Beyond initial testing, the tool supports scaling efforts, allowing organizations to evaluate multiple models concurrently, thus facilitating rapid iteration and optimization.

- Open Source Approach: Arthur Bench is available as an open-source tool, making it accessible to a broader audience. For businesses seeking ease of use without dealing with the complexities of open-source management, a SaaS version will also be developed.

A Complementary Offering: Arthur Shield

Arthur is simultaneously enhancing its offerings with another product, Arthur Shield, released earlier this year. This unique tool acts as a firewall for LLMs, designed to detect imperfections such as hallucinations while protecting against the dissemination of toxic information and private data leaks. Together, Arthur Bench and Shield promise comprehensive solutions for navigating the LLM space responsibly.

Conclusion

As the landscape of AI evolves, tools like Arthur Bench will be essential in helping organizations navigate the crowded field of LLMs. By providing an organized, systematic approach to model evaluation, Arthur is empowering companies to make data-driven decisions that enhance their AI applications. If you’re considering the integration of LLMs into your business strategy, exploring Arthur Bench could be the key to unlocking the full potential of your AI initiatives.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.