The ever-evolving world of robotics takes one giant leap forward, with research from Google AI Labs joining forces with UC Berkeley to enhance the mobility of quadrupedal robots. As impressive as these machines can be, they will always trail behind their biological counterparts—in this case, dogs. Their inherent agility and instinctive movements pose considerable challenges when formulating robotic replicas. But how can we bridge this gap? With a hybrid approach that incorporates disciplined chaos, researchers have discovered a groundbreaking method to facilitate robotic locomotion.

The Quest for Agile Movements

The primary objective of this research endeavor was straightforward: develop a way to automatically transfer agile behaviors—like the light-footed trot of a dog—to a robotic model. Traditional methods of instilling such skills in machines result in frustrations, often necessitating expert guidance and time-consuming reward tuning for the desired abilities. This not only proves inefficient but also non-scalable. Enter Google’s innovative strategy: embracing controlled randomness to enhance machine learning outcomes.

From Canine Movements to Robotic Gaits

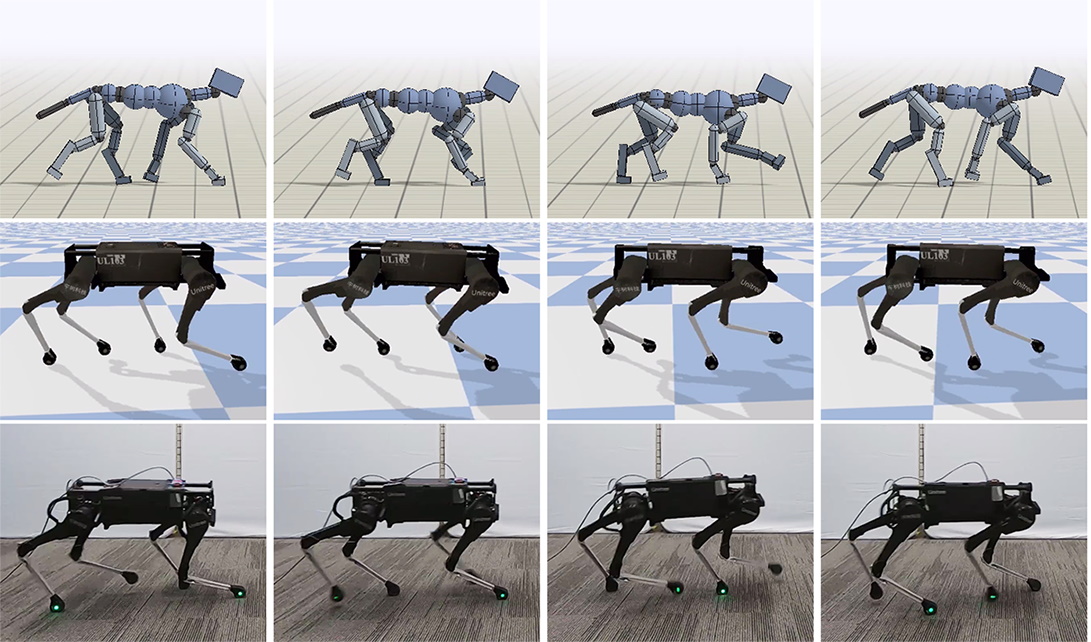

In conventional robotic studies, the motions of a dog are precisely captured and key features such as joint movement are meticulously mapped to a digital model. This model attempts to emulate the dog’s natural gait in a simulation. However, as anyone involved in robotics can attest, transferring simulated movements to real-world applications is where the challenge lies. Issues such as imprecise friction and unexpected physical variables can lead robots to falter or even collapse.

Injecting Randomness for Robust Learning

Here lies the crux of the Google AI breakthrough. By intentionally varying the simulation parameters—altering weight, motor strength, or ground friction—the researchers created a more realistic framework for machine learning. This injected randomness forced the robotic model to adapt and innovate, leading to the development of a learning algorithm that could accommodate fluctuations and real-world challenges.

Self-Learning Robots: A Leap Forward

The focus of the Google AI study extends to another thrilling concept: enabling robots to independently learn to walk while adhering to set parameters. In a collaborative project with UC Berkeley and the Georgia Institute of Technology, robots gained essential skills and learned to navigate their environments without human assistance. Not only did they master the art of locomotion, but they also adapted to their surroundings, avoiding designated areas and righting themselves after a fall.

The Future of Robotics: What Lies Ahead?

This transformative research paves the way for further advancements in robotic locomotion. With robots increasingly capable of learning through experience and employing agility reminiscent of their animal counterparts, the possibilities for applications are extensive—from delivery systems to complex outdoor explorations. Moreover, as we fine-tune these models, the potential for automation in various fields becomes evident, enhancing efficiency and performance.

Conclusion: A Step Toward Innovation

The advances discussed here are more than just technical achievements; they signify a paradigm shift in how we approach robotics. By learning from nature and adjusting for the unpredictability of the real world, we can design machines that not only mimic animal behaviors but also thrive in diverse environments. At fxis.ai, we believe that such advancements are crucial for the future of AI as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.