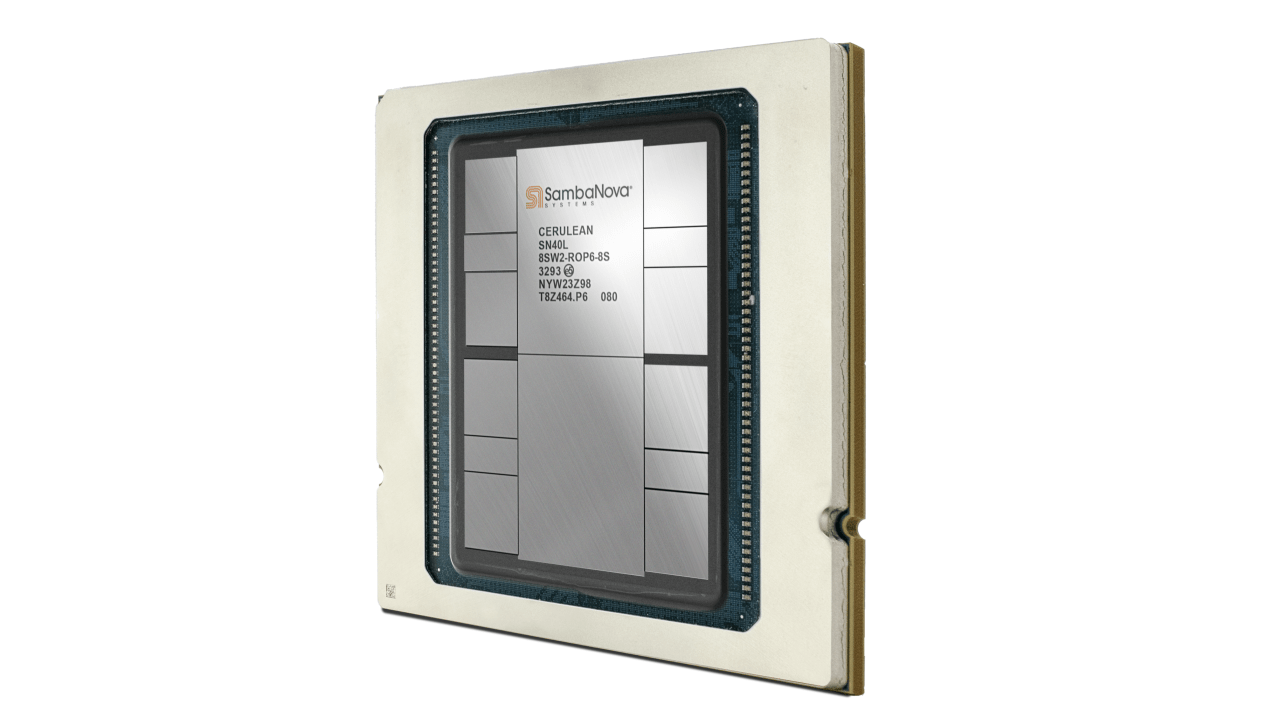

In the ever-evolving landscape of artificial intelligence, the buzz around generative AI and large language models has reached an unprecedented level, primarily ignited by OpenAI’s groundbreaking release of ChatGPT. However, the underlying mechanics that power these innovations—especially in terms of hardware—remain a field of continuous refinement and investment. Enter SambaNova, a company that has quietly, yet forcefully, stepped into the spotlight with its newly unveiled SN40L chip, designed to dramatically cut costs and manage a staggering 5 trillion parameter model.

A New Standard in AI Hardware

While SambaNova may not yet be a name synonymous with giants like Google or Microsoft, its ambitions are undeniably significant. The company has raised over $1 billion in funding with major backers including Intel Capital and SoftBank Vision Fund. What sets SambaNova apart is its commitment to developing a holistic solution encompassing both hardware and software, with an emphasis on efficiency and proprietary control.

Rodrigo Liang, the founder and CEO of SambaNova, articulates a vision to streamline the current “brute force” methodology by which many companies operate—using an overwhelming number of chips to execute complex models. Liang claims that with the SN40L, this model will see a revolutionary change. He emphasizes efficiency; a feat achieved by condensing what would traditionally require up to 200 chips into a mere eight sockets. This radical reduction promises to reshape how enterprises approach large-scale AI deployments.

The Science Behind SN40L

One standout feature of the SN40L is its adaptability. Not only is it generated specifically for massive language models, but it also retains compatibility with prior chip generations. This approach is crucial for companies looking to make gradual transitions without incurring exorbitant costs. Liang states, “I can deliver fully optimized on that hardware, and you get state-of-the-art accuracy.” What this means is that users can expect high performance without the uncertainties or high operational costs typically associated with AI infrastructure.

- Resource Efficiency: The SN40L chips are reportedly 30 times more efficient compared to competitors, setting a benchmark for processes that previously required an overwhelming number of resources.

- Model Ownership: SambaNova emphasizes that once a model is trained with a customer’s data on these chips, the ownership remains entirely with the company, allowing for better data governance.

- Subscription Flexibility: By offering a multi-year subscription model, companies can enjoy enhanced financial predictability without sacrificing performance.

Real-World Applications

The implications of deploying SambaNova’s SN40L chip are enormous for industries that rely heavily on AI. Healthcare, finance, and retail can all leverage the new capabilities to process vast amounts of data while maintaining high accuracy. In sectors where AI modeling can enable better decision-making, such as supply chain optimization, this innovation stands to yield both operational benefits and cost savings.

In particular, for organizations struggling with the complexities of training large language models, the SN40L provides a simplified path. They can quickly create bespoke models suited to their proprietary data—turning data into actionable AI assets.

Conclusion: The Future of AI Infrastructure

SambaNova’s SN40L chip marks a pivotal moment in AI hardware evolution. As industries continue their shift toward adopting large language models, the need for efficient and cost-effective solutions will only grow. SambaNova is not just another player in the game; it’s actively reshaping how enterprises will interact with AI technologies in the future.

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.