As artificial intelligence continues to permeate every facet of our lives, the challenge of ensuring equitable outcomes in its applications becomes increasingly pressing. One area garnering significant attention is the detection of hate speech—a task that may seem straightforward but is fraught with complexities rooted in cultural nuances and social contexts. Recent research from the University of Washington has shed light on the disturbing trend of racial bias in AI-driven hate speech detection systems, particularly concerning the treatment of African American English (AAE). This blog explores the implications of these biases and what they mean for the future of AI.

The Reckoning with Language

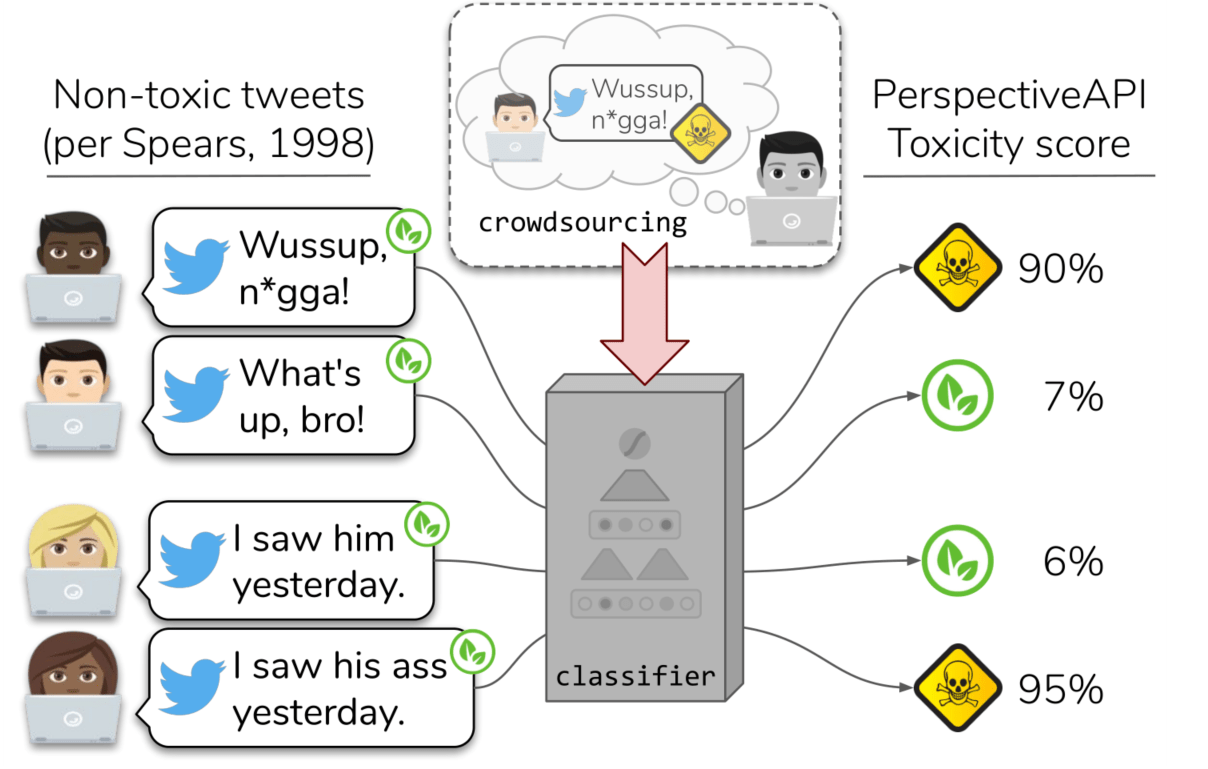

Language is inherently subjective, and discerning offensiveness is a multifaceted endeavor. AI models, including those developed by Jigsaw (an Alphabet subsidiary), strive to classify language through an algorithmic lens. However, the impact of context—both linguistic and sociocultural—can lead to misinterpretations. The study revealed that slang deeply rooted in the African American vernacular is frequently mislabeled as toxic, raising questions about how AI learns from datasets that may not be representative of diverse speech patterns.

Unpacking the Research Findings

The researchers evaluated thousands of tweets annotated with descriptors such as “hateful” and “offensive.” Their analysis revealed a worrying correlation between the dialect used and its classification:

- African American English expressions were labeled as offensive significantly more often than white-aligned speech.

- The disparities were notable, with a strong association between black vernacular and negative labels across several datasets.

This correlation implies a systematic bias baked into the datasets that AI models are trained on, perpetuating harmful stereotypes and misrepresenting the richness of African American culture. Notably, when annotators were made aware that a tweet originated from a black speaker, the likelihood of labeling that speech as offensive decreased—suggesting that context profoundly affects perceptions of speech.

The Implications for AI Development

This research serves as a critical reminder of the importance of inclusive practices when developing AI systems. Algorithms trained on biased data can inadvertently reinforce existing societal prejudices. As AI becomes an integral part of moderating online discourse, failing to address entrenched biases can lead to broader implications for marginalized communities. Maarten Sap, lead author of the study, aptly highlighted the need for collaboration among AI developers and social scientists to create more accurate models and datasets.

Looking Forward: What Needs to Change?

The findings of this study call for a reevaluation of hate speech detection methodologies:

- Inclusion of Diverse Linguistic Samples: Training datasets must incorporate a broad spectrum of vernaculars and dialects to provide a comprehensive view of language use across different communities.

- Contextual Awareness: AI models should be designed to consider the context in which speech occurs, including the identity and experiences of the speakers.

- Engagement with Social Sciences: By partnering with experts in linguistics, sociology, and psychology, AI developers can better understand how different cultures interpret language and offense.

Conclusion

The road ahead for AI in hate speech detection is laden with obstacles, but it is also ripe with opportunities for transformation. With intelligent and inclusive approaches, we can mitigate bias and improve the fairness of AI technologies. As we continue to unravel the complexities of human language, collaboration across disciplines will be crucial in building more effective and equitable AI solutions.

At **[fxis.ai](https://fxis.ai)**, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations. For more insights, updates, or to collaborate on AI development projects, stay connected with **[fxis.ai](https://fxis.ai)**.