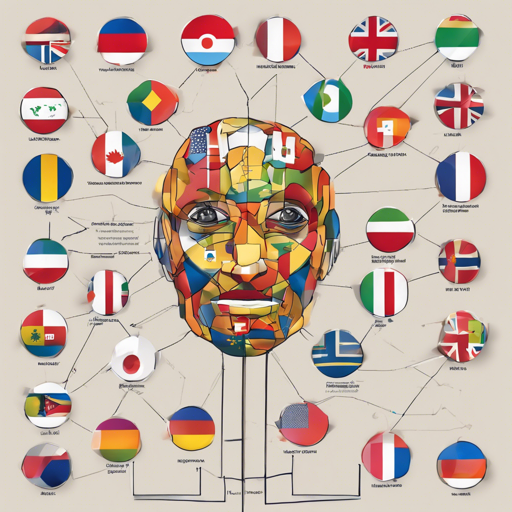

In the realm of natural language processing, BERT has become a formidable player, especially when it comes to multilingual capabilities. Today, we dive into the smaller versions of bert-base-multilingual-cased specifically tailored for handling a custom number of languages while preserving the accuracy of the original model. Let’s make the most of this compact and efficient model!

Understanding the Code

Below, we share a snippet of code that demonstrates how to load the bert-base-pt-cased model using Python’s transformers library. To illustrate the process, think of it as choosing a specific dish from a menu at a restaurant:

- AutoTokenizer – This is like your waiter who describes each dish and helps you pick the one that suits your taste (your language). It prepares the ingredients needed for the model.

- AutoModel – Once the dish is chosen (the model), the chef (the system) is brought in to get cooking and prepare the outputs you desire using the ingredients (the data).

Here’s the code to use:

python

from transformers import AutoTokenizer, AutoModel

tokenizer = AutoTokenizer.from_pretrained("Geotrend/bert-base-pt-cased")

model = AutoModel.from_pretrained("Geotrend/bert-base-pt-cased")

Generating Smaller Versions

If you’re interested in creating other smaller versions of multilingual transformers, you can check out our Github repo. It’s a treasure trove of tools and resources waiting for you!

Troubleshooting Tips

If you encounter any issues while implementing the model, here are some steps you can follow:

- Ensure you have the correct library versions installed. A common remedy is to update the transformers library:

pip install --upgrade transformersFor more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

Utilizing the bert-base-pt-cased model can significantly enhance your multilingual NLP tasks by maintaining high accuracy with smaller, more efficient models. This versatility allows developers to tailor their language processing needs aptly.

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.