In the world of natural language processing (NLP), understanding syntactic structures is crucial for many applications. If you’re looking to enhance your applications with the ability to categorize tokens effectively, you’ve come to the right place! In this article, we’ll guide you through using the Chinese RoBERTa Base UPOS model for part-of-speech (POS) tagging and dependency parsing. This model is pre-trained on a wealth of Chinese Wikipedia texts, making it suitable for diverse applications. Let’s dive in!

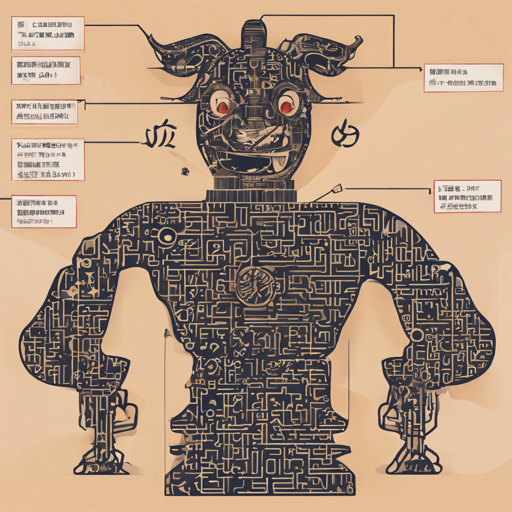

Model Overview

The Chinese RoBERTa Base UPOS model is a BERT-based architecture tailored for the Chinese language. It has been specifically pre-trained using texts from both simplified and traditional Chinese Wikipedia, preparing it to perform well in POS-tagging and dependency parsing tasks. POS-tagging identifies the grammatical role of each word, while dependency parsing visualizes the relationship between words in a sentence.

How to Use the Model

Integrating the Chinese RoBERTa Base UPOS model into your project is straightforward. Below are two methods you can use to set it up:

- Using Transformers Library:

from transformers import AutoTokenizer, AutoModelForTokenClassification tokenizer = AutoTokenizer.from_pretrained("KoichiYasuoka/chinese-roberta-base-upos") model = AutoModelForTokenClassification.from_pretrained("KoichiYasuoka/chinese-roberta-base-upos") - Using esupar:

import esupar nlp = esupar.load("KoichiYasuoka/chinese-roberta-base-upos")

Understanding the Code through an Analogy

Imagine you’re preparing for a theatrical play, where each actor has a specific role – some are heroes, others villains, and some play side characters. In our analogy, the Chinese RoBERTa Base UPOS model acts as the director.

The first code snippet embodies the initial preparation stages of the play. The AutoTokenizer and AutoModelForTokenClassification are akin to auditioning actors – they learn their lines and understand their roles (in this case, token classification). Here, the tokenizer breaks down the script (your text data) into manageable pieces (tokens) while the model recognizes their specific types (UPOS tags).

In the second snippet, the esupar library serves as a specialized director’s assistant, effectively coordinating actors and ensuring that the performance runs smoothly. By loading the needed model with minimal hassle, it saves time and effort.

Troubleshooting Ideas

Even the most well-crafted processes may experience hiccups. Here are some troubleshooting tips:

- If you encounter an error related to missing packages, ensure that you have both the

transformersandesuparlibraries installed in your Python environment. - In case of a

ModelNotFoundError, double-check the model name for typos or misconfigurations when initializing the tokenizer and model. - If performance issues arise, consider checking your computer’s resources. More intensive models may require significant RAM.

- For further assistance or insights, feel free to connect with the community at fxis.ai.

See Also

If you’re looking for additional resources, check out esupar, a tokenizer POS-tagger and dependency parser that leverages various BERT models.

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.