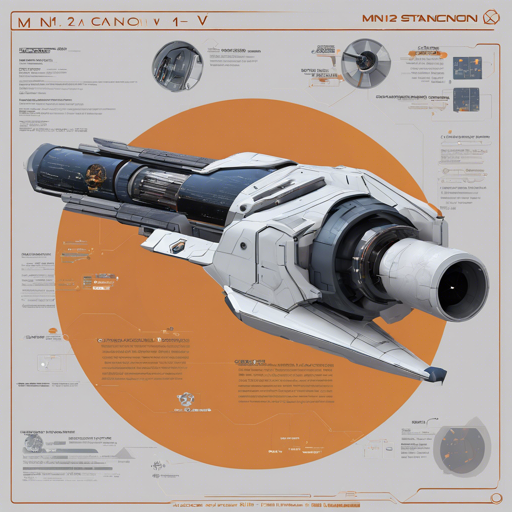

Welcome to your comprehensive guide on utilizing the MN-12B-Starcannon-v5 unofficial model, a cutting-edge language model that can help power various AI applications. Not only will we explore how to use this model efficiently, but we will also delve into its quants and provide troubleshooting tips. Let’s embark on this exciting journey together!

Understanding Quants

Quants are quantized models—think of them as streamlined versions of the original model. This process reduces the size and computational requirements, making it more feasible to run the model on less powerful hardware.

Getting Started with MN-12B-Starcannon-v5

To start using the MN-12B-Starcannon-v5 model effectively, follow these steps:

- Download the Model: You can access various quants from HuggingFace. Each quant has different sizes and quality levels, so choose based on your needs.

- Refer to Documentation: If you’re unsure how to use GGUF files, refer to one of TheBlokes READMEs for detailed instructions, including how to concatenate multi-part files.

- Select Your Quant: Depending on your requirements, here are some available quants:

- i1-IQ1_S: 3.1 GB – for the desperate.

- i1-Q3_K_S: 5.6 GB – IQ3_XS probably better.

- i1-Q4_K_S: 7.2 GB – optimal size-speed-quality.

- i1-Q5_K_M: 8.8 GB – robust performance.

Using the Model

Once you have downloaded the appropriate quant, you can start making requests to the model. It operates similarly to a library where you check out books (or data) and write your queries.

Example Usage

Imagine the model as a wise librarian. You ask specific questions using the quant you’ve selected, and based on the request, the librarian fetches the best possible answers from the vast collection of knowledge.

Troubleshooting Common Issues

If you encounter issues while using the MN-12B-Starcannon-v5 model or its quants, don’t worry! Here are a few troubleshooting tips:

- Model Loading Issues: If the model fails to load, ensure you have the correct quant and check for any missing dependencies.

- Performance Problems: If the model is slower than expected, consider using a quant with a smaller size or optimizing your computing environment.

- Inaccurate Responses: If the model produces unsatisfactory answers, remember that quality varies between different quants. Try switching to a higher-quality quant for better results.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

Using the MN-12B-Starcannon-v5 model can significantly enhance your AI development projects, offering great flexibility and a variety of quants to suit your needs. Explore the offered quants, utilize the documentation effectively, and troubleshoot any issues that arise.

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.