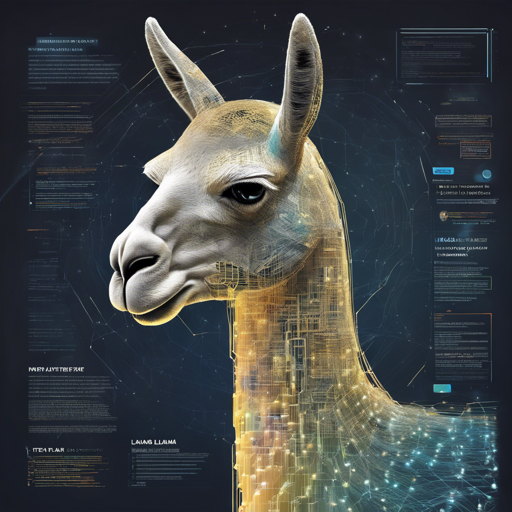

Welcome to the future of natural language processing! With the introduction of Meta Llama 3, creating sophisticated text generation applications has never been easier. In this guide, we will walk you through how to get started with Llama 3, including its setup, usage examples, and troubleshooting tips. Let’s dive in!

Getting Started with Meta Llama 3

The Meta Llama 3 family includes large language models designed for multiple use cases, particularly optimized for dialogue. Here’s how you can harness its power:

Installation Requirements

- Python 3.8 or later

- PyTorch framework

- Transformers library by Hugging Face

Basic Usage with Transformers Pipeline

To utilize Llama 3, you need to follow specific coding steps. Imagine you are a chef preparing a recipe. In our analogy, the ingredients are your coding libraries and methods, and the recipe is essentially your script to get everything cooking! Here’s a basic step-by-step breakdown:

1. Import Required Libraries

First, you need to gather your ingredients:

import transformers

import torch2. Set Up Your Model

Next, it’s time to prepare the main dish:

model_id = "meta-llama/Meta-Llama-3-8B-Instruct"

pipeline = transformers.pipeline(

task="text-generation",

model=model_id,

model_kwargs={"torch_dtype": torch.bfloat16, "device_map": "auto"},

)3. Prepare Input Messages

Now, let’s create a wholesome mix of user interactions:

messages = [

{"role": "system", "content": "You are a pirate chatbot who always responds in pirate speak!"},

{"role": "user", "content": "Who are you?"}

]4. Generate Responses

Finally, let’s get ready to serve!

outputs = pipeline(messages, max_new_tokens=256)

print(outputs[0]['generated_text'])This code snippet initializes a text generation model and simulates a conversation where the chatbot responds in pirate language!

Troubleshooting Tips

While working with Meta Llama 3, you might encounter some hiccups. Here are a few troubleshooting tips to ensure smooth sailing:

- If you face import errors, make sure all required libraries are installed correctly. You can do this via pip by running:

pip install transformers torch. - Check the model ID in your script to ensure it matches what is available on the Hugging Face model hub.

- In case of low performance, consider using a compatible GPU for optimal processing.

- For issues related to token limits or unexpected responses, review the max_new_tokens parameter and adjust it as needed.

- Remember, for more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

Meta Llama 3 is a robust tool for transforming text generation applications. By following the steps outlined above, you can easily implement it in your projects to create engaging and dynamic conversational interfaces.

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.

Ready to Explore Further?

With the growing capabilities of AI and tools like Meta Llama 3, there’s a world of possibilities waiting for you. Happy coding!