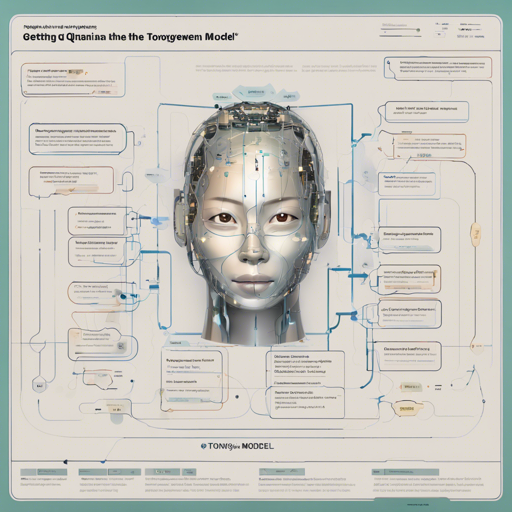

Welcome to your guide on using the Tongyi-Qianwen model for text generation! This model is finely tuned to emulate the elegant prose of the Claude 3 models and is designed to enhance your projects in AI text creation. Let’s dive in!

Understanding the Model

The Tongyi-Qianwen model is the latest addition in a series aimed at replicating high-quality prose, specifically through the Sonnet and Opus styles. It has undergone extensive training on the Qwen1.5 32B model to achieve its refined capabilities. Before you start, remember that this model is built with complex architecture, much like a skilled chef crafting a gourmet dish—a mix of high-quality ingredients, meticulously fine-tuned recipes, and the right preparation techniques.

How to Prompt the Model

To effectively engage with the Tongyi-Qianwen model, you need to format your prompts correctly. The model is tuned with the ChatML formatting, ensuring that the interaction feels natural and conversational. Here’s a typical input example:

pyim_startuserHi there!im_endim_startassistantNice to meet you!im_endim_startim_startuserCan I ask a question?im_endim_startassistantUsing the Model

The following steps will guide you through using the Tongyi-Qianwen model:

- Ensure you have the model downloaded from Hugging Face.

- Integrate the model into your script or application.

- Use the prompt format shown above to interact with the model.

Credits

This project stands as a collaborative effort. Special thanks go to the contributors who provided valuable datasets and insights:

- NobodyExistsOnTheInternet/claude_3.5s_single_turn_unslop_filtered

- NobodyExistsOnTheInternet/PhiloGlanSharegpt

- Magpie-Reasoning-Medium-Subset

- kalomaze/Opus_Instruct_25k

- And many more contributors!

Training Details

The training phase was a significant step toward the model’s success. Conducted over 2 epochs, it used 8x NVIDIA H100 Tensor Core GPUs for full-parameter fine-tuning, ensuring efficient learning and adaptation.

Troubleshooting

If you encounter issues while using the Tongyi-Qianwen model, consider the following pointers:

- Check if your model is correctly loaded and initialized.

- Ensure that your prompt follows the ChatML formatting accurately.

- Watch for GPU memory availability; running variations in input size can help mitigate memory overloads.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.