Minitron is an intriguing family of small language models that stem from the advanced Nemotron-4 15B model. These models have been pruned and fine-tuned for efficiency and performance, making them suitable for research and development tasks. In this blog, we will guide you through the steps to set up and use the Minitron 8B model for text generation, along with some troubleshooting tips.

Understanding Minitron: The Power of Pruning

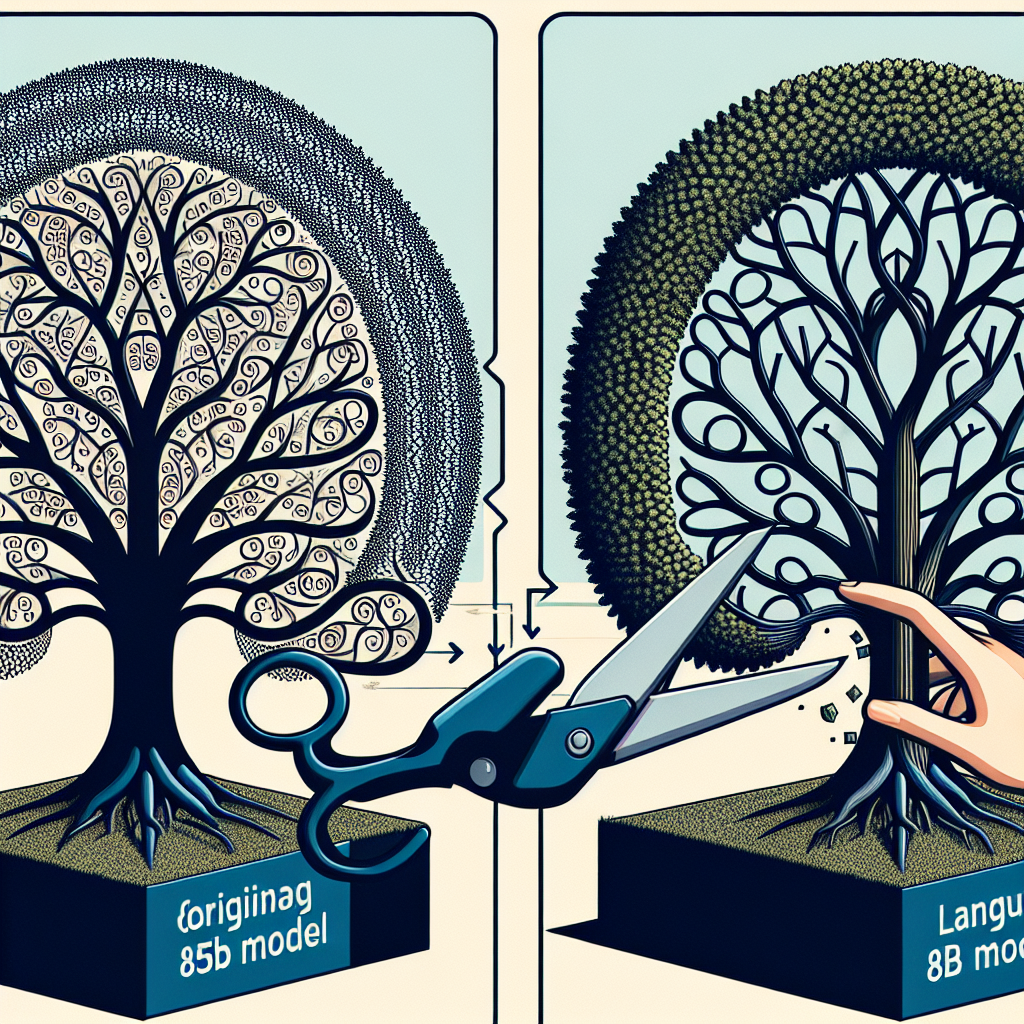

Imagine you’re trying to create the most efficient version of a massive novel, similar to how a movie adapts a book. You would keep the key dialogues and themes while trimming down unnecessary details. Minitron works in a similar way but does it with vast language models. It takes the sprawling 15B model and prunes it down to create smaller (8B and 4B) models. This pruning process allows Minitron to maintain high performance while being less resource-intensive — think of it as having a sleek, efficient version of a cinematic blockbuster!

With Minitron, you benefit from:

– 40x fewer training tokens needed, which reduces learning time significantly.

– Compute cost savings of 1.8x compared to training a model from scratch.

– Excellent performance equivalent to other community models while outperforming traditional compression techniques.

Getting Started with Minitron on Hugging Face

To start using Minitron 8B, you’ll need to perform a few straightforward setup steps on your machine. Below are the steps to clone and install the necessary repositories.

Installation Steps

1. Clone the Transformers repository:

“`sh

git clone git@github.com:suiyoubi/transformers.git

“`

2. Navigate into the cloned directory:

“`sh

cd transformers

“`

3. Checkout the specific commit:

“`sh

git checkout 63d9cb0

“`

4. Install the required packages:

“`sh

pip install .

“`

Loading the Minitron-8B Model

Once you’ve installed the specified version of the Transformers library, you can start using the Minitron-8B model for text generation. Here’s a code snippet that demonstrates how to load the model and prompt it for text output:

import torch

from transformers import AutoTokenizer, AutoModelForCausalLM

# Load the tokenizer and model

model_path = "nvidia/Minitron-8B-Base"

tokenizer = AutoTokenizer.from_pretrained(model_path)

device = 'cuda'

dtype = torch.bfloat16

model = AutoModelForCausalLM.from_pretrained(model_path, torch_dtype=dtype, device_map=device)

# Prepare the input text

prompt = "To be or not to be,"

input_ids = tokenizer.encode(prompt, return_tensors="pt").to(model.device)

# Generate the output

output_ids = model.generate(input_ids, max_length=50, num_return_sequences=1)

# Decode and print the output

output_text = tokenizer.decode(output_ids[0], skip_special_tokens=True)

print(output_text)

In this snippet:

– We define the model and tokenizer paths.

– Set up the device configurations for computation.

– Feed an initial prompt into the model to get a creative output.

Troubleshooting Common Issues

While working with Minitron 8B, you might encounter some issues. Below are common problems and their respective solutions:

1. Model Not Found Error:

– Ensure that you have correctly set the `model_path` variable to `”nvidia/Minitron-8B-Base”` and that your internet connection is stable for downloading.

2. CUDA Out of Memory Error:

– Lower the `max_length` parameter in the `model.generate` function or try reducing the input size.

3. Incompatibility with Other Libraries:

– Make sure that your versions of PyTorch and Transformers are compatible. Refer to the library documentation for version requirements.

For more troubleshooting questions/issues, contact our fxis.ai data scientist expert team.

Conclusion

With this guide, you should be ready to dive into the exciting world of Minitron 8B! By leveraging its efficiency and advanced capabilities, you can enhance your development projects significantly. Dive in, experiment, and generate some captivating text with Minitron! Happy coding!