Welcome to our guide on how to fine-tune a BERT Base model (uncased) on the Jigsaw Unintended Bias in Toxicity Classification dataset. We will break it down step-by-step, ensuring you can seamlessly implement this powerful machine learning technique.

What is BERT?

BERT, or Bidirectional Encoder Representations from Transformers, is a state-of-the-art language representation model. It processes text data effectively by understanding the context of each word in a sentence instead of treating each word in isolation. It is particularly notable for its ability to capture nuanced meanings in texts.

Getting Started

To begin, ensure you have the necessary libraries installed. You will need:

- Transformers: This library from Hugging Face makes it easy to use pre-trained models such as BERT.

- Pandas: For data manipulation and analysis.

- Scikit-learn: Useful for evaluating the model performance.

Steps to Fine-Tune BERT

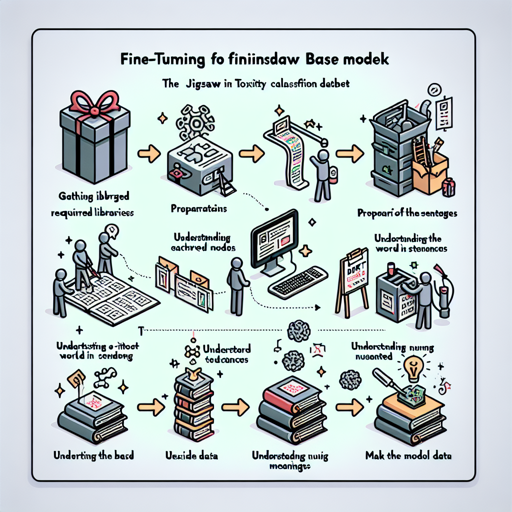

Let’s break down the steps involved in fine-tuning the BERT model on the Jigsaw dataset into manageable steps:

- Load the dataset from Kaggle.

- Preprocess the text data, which includes tokenization, which involves converting the input text into a format that the BERT model can understand.

- Set up the model configuration and make any necessary adjustments.

- Split your dataset into training and validation sets.

- Begin the training process, where the model learns to classify toxicity based on your dataset.

- Evaluate the model using precision, recall, and F1 scores to ensure it generalizes well on unseen data.

Understanding the Code with an Analogy

Think of fine-tuning BERT as training a chef (the model) to create a specialized dish (toxicity classification) using a set of ingredients (your dataset). The chef already knows basic cooking skills (pre-trained BERT) but requires specific knowledge of the dish’s flavors (toxicity nuances) through practice (fine-tuning).

As the chef prepares dishes using various ingredients, every time they taste (evaluate) their food, they make necessary adjustments (training iterations) based on feedback (model performance metrics) until the dish is just right!

Troubleshooting Tips

If you encounter issues during the fine-tuning process, here are some troubleshooting ideas:

- Memory Errors: Ensure your system has enough RAM and GPU memory to handle the model’s requirements.

- Data Quality: Double-check your dataset for missing values or incorrect formats that might lead to errors during training.

- Training Time: Fine-tuning may take time. Make sure you are allowing sufficient epochs for the model to learn effectively.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

Fine-tuning the BERT model on this unique dataset can equip you with a powerful tool to classify toxicity while mitigating unintended biases. Remember, continuous experimentation and evaluation are key to successful machine learning projects. At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.