In the world of AI, size and performance often clash, much like trying to carry an oversized backpack while hiking. We’ve come across a transformative solution: converting models like CodeLlama-7b to 4-bit precision using BitsAndBytes. This process helps in making models more efficient while maintaining their performance. In this blog post, we’ll walk you through what this means, how to implement it, and troubleshoot common issues you might encounter along the way.

Understanding 4-Bit Conversion

Imagine you have a colorful paint palette with ten different colors. If you were to restrict yourself to just four colors, you could still create beautiful artwork—albeit with fewer options. Similarly, converting models into 4-bit representations means reducing the bit precision of weights and activations, leading to lower memory usage without a significant loss in model performance.

Steps to Convert CodeLlama-7b to 4-Bit

- Step 1: Ensure you have CodeLlama-7b downloaded and ready for conversion.

- Step 2: Install the BitsAndBytes library if you haven’t already.

- Step 3: Follow the conversion instructions provided in the library documentation to start the conversion process.

- Step 4: Evaluate the performance of your converted model against the original.

Impact on Performance

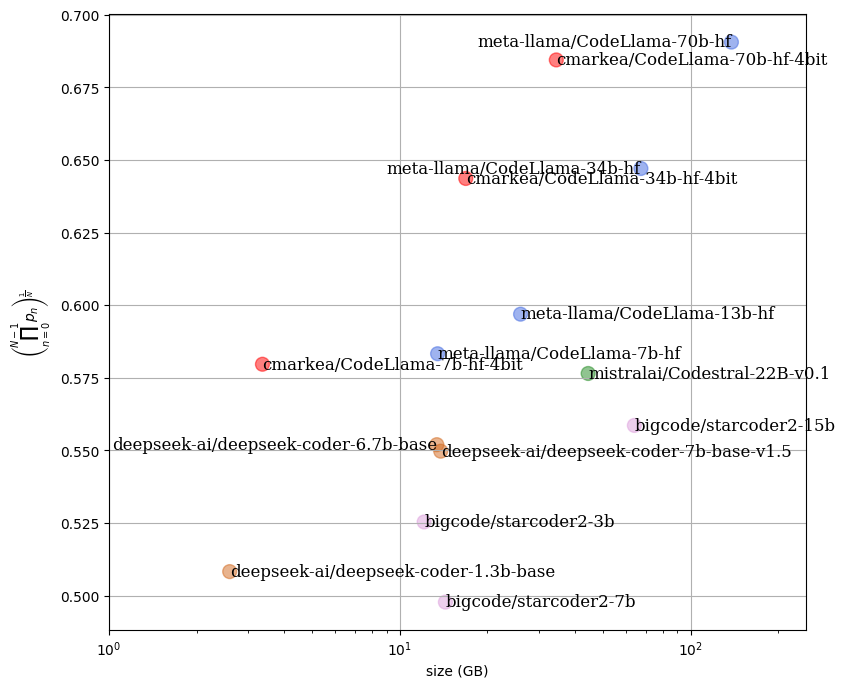

The beauty of this process is that it allows you to optimize your models without sacrificing their capabilities. As illustrated in the performance figure below, you will see that quantized models exhibit equivalent performance while requiring significantly less RAM. This optimization can be a game-changer, especially when working with limited hardware resources.

Troubleshooting Common Issues

While the conversion process is straightforward, you may run into some bumps in the road. Here are common issues you might encounter:

- Model Crashes: If your model crashes after conversion, check to ensure that you have sufficient RAM available. Converting to 4-bit should help, but your hardware still needs to support the model’s requirements.

- Performance Loss: If you notice a drop in performance, consider revisiting the conversion settings. The accepted parameters might need to be adjusted for optimal results.

- Incompatible Libraries: Ensure that your versions of the libraries (like BitsAndBytes) are up-to-date and compatible with your model.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

Optimizing models via 4-bit conversion can significantly improve your resource management and keep your models running smoothly. As you explore this area, remember that technical challenges are part of the journey. At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.