Have you ever wanted to take a small, blurry image and transform it into a magnificent high-resolution masterpiece? Well, today, we are diving into the world of image super-resolution through the power of the Cascading Residual Network (CARN)! This model is designed to upscale images by a factor of 2x, 3x, or even 4x, making it an incredible tool for enhancing image quality.

What is CARN?

CARN is a model pre-trained on the DIV2K dataset, consisting of 800 high-quality images that have been augmented to 4000 images for training, with a special validation set of 100 images. It was introduced in the seminal paper titled Fast, Accurate, and Lightweight Super-Resolution with Cascading Residual Network by Ahn et al. in 2018. The goal is to take a low-resolution (LR) image and restore it into a high-resolution (HR) image seamlessly.

Understanding the Code: The CARN Model

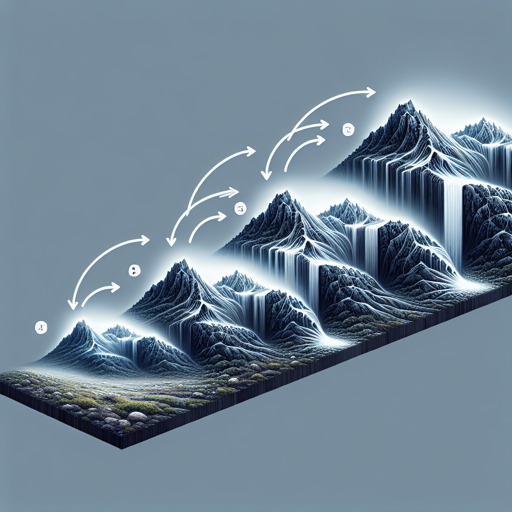

To grasp how this model works, let’s compare the CARN model to a traditional catering service. You might start with a basic dish (the low-resolution image), and to enhance it, several chefs (the cascading layers) carefully add ingredients and seasoning (the residual connections) to improve its taste (the image quality). As the chefs layer in the flavors, they ensure that the final presentation (the upscaled image) is not only larger but also more delectable and visually appealing.

How to Use CARN?

Using the CARN model to upscale images involves a few simple steps:

- Install the required library using the command:

- Here’s how you can use a pre-trained model to upscale your image:

pip install super-imagefrom super_image import CarnModel, ImageLoader

from PIL import Image

import requests

url = "https://paperswithcode.com/media/datasets/Set5-0000002728-07a9793f_zA3bDjj.jpg"

image = Image.open(requests.get(url, stream=True).raw)

model = CarnModel.from_pretrained("eugenesiow/carn", scale=2) # scale can be 2, 3, or 4

inputs = ImageLoader.load_image(image)

preds = model(inputs)

ImageLoader.save_image(preds, ".scaled_2x.png") # save the output 2x scaled image

ImageLoader.save_compare(inputs, preds, ".scaled_2x_compare.png") # compare with bicubic scalingTraining the Model

If you want to train your own model, you can follow these steps:

- Preprocess the data using this code:

- Next, initiate training:

from datasets import load_dataset

from super_image.data import EvalDataset, TrainDataset, augment_five_crop

augmented_dataset = load_dataset("eugenesiow/Div2k", "bicubic_x4", split="train").map(augment_five_crop, batched=True, desc="Augmenting Dataset")

train_dataset = TrainDataset(augmented_dataset)

eval_dataset = EvalDataset(load_dataset("eugenesiow/Div2k", "bicubic_x4", split="validation"))from super_image import Trainer, TrainingArguments, CarnModel, CarnConfig

training_args = TrainingArguments(

output_dir=".results", # output directory

num_train_epochs=1000, # total number of training epochs

)

config = CarnConfig(scale=4, bam=True) # scale to upscale 4x

model = CarnModel(config)

trainer = Trainer(

model=model,

args=training_args,

train_dataset=train_dataset,

eval_dataset=eval_dataset,

)

trainer.train()Evaluation Metrics

To assess the model’s performance, we compare it against PSNR (Peak Signal-to-Noise Ratio) and SSIM (Structural Similarity Index). The results indicate how well our upscaled images compare to the original high-resolution images. Example datasets include:

Troubleshooting Ideas

If you encounter any issues while using CARN, consider the following troubleshooting steps:

- Ensure that your installation of the required libraries is successful.

- Double-check the URL for the images you wish to upscale to confirm they are valid.

- If the model fails to load, confirm you have the correct model path or name.

- Validate your GPU setup if training, as insufficient GPU resources may lead to errors.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Final Thoughts

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.