Welcome to the world of natural language processing! Today, we will explore how to make the most out of the miniature version of the [alpindale/magnum-72b-v1](https://huggingface.co/alpindale/magnum-72b-v1) model. This robust model is designed to replicate the prose quality of Claude 3 models and is fine-tuned for effective communication. Let’s dive into its usage, functionalities, and some handy troubleshooting tips.

Understanding the Model

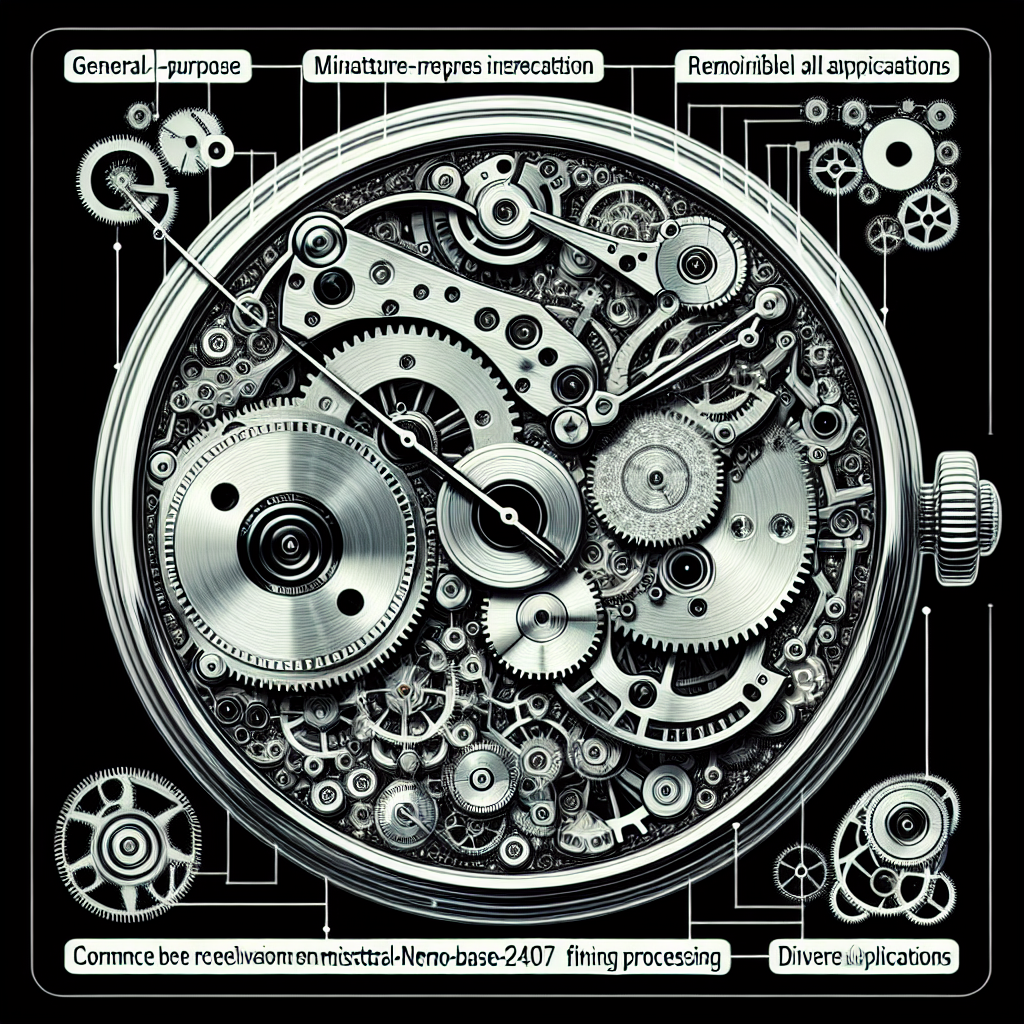

The miniature Magnum model is like a finely crafted Swiss watch. Just as Swiss watchmakers meticulously refine every cog and wheel, this model has been fine-tuned using the Mistral-Nemo-Base-2407 and enhanced with a general-purpose instruction dataset for better coherence and alignment. The end product is a model that can generate responses with remarkable clarity and context, perfect for various applications.

Prompting the Model

To effectively use this model, you will need to interact with it using specifically formatted prompts. Think of this as sending a text message to a well-trained assistant. Here’s how you can format your input:

"""[INST] Hi there! [/INST]Nice to meet you![INST] Can I ask a question? [/INST]"""

– The `[INST]` tags act as invitation cards for the model, asking it to respond to your input.

– The `/s>` marks the end of one thought and gives the model a cue to pause before picking up the next thread.

This simple format allows you to ask complex questions or give instructions easily.

Troubleshooting Tips

Sometimes, you might encounter hurdles while using the model. Fear not! Here are some troubleshooting ideas to keep in mind:

– Model Doesn’t Respond: Ensure that your prompt is correctly formatted with the [INST] tags. If the model seems unresponsive, it can be helpful to start fresh; sometimes, minor errors can cause hiccups.

– Responses Don’t Make Sense: If the output is incoherent or off-topic, double-check that your input provides enough context. Consider rephrasing your questions or giving clearer instructions. More specific prompts yield better responses!

– Technical Glitches: If you consistently face issues that can’t be resolved with formatting or context improvements, there might be a temporary server issue or a bug. Reboot your environment and try again, or check online for any updates related to the model.

For more troubleshooting questions/issues, contact our fxis.ai data scientist expert team.

Conclusion

The miniature Magnum model opens up new avenues for natural language interaction, providing users with a powerful tool for various applications. By following the proper prompting techniques and keeping these troubleshooting tips in mind, you can maximize your experience with this innovative model. Happy chatting!