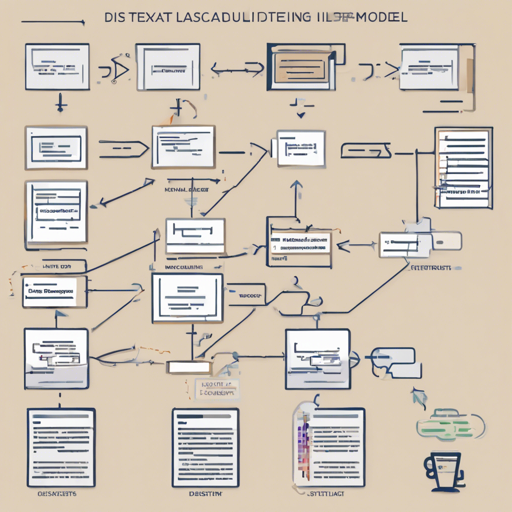

In recent advancements within the realm of AI, the DistilBERT model has emerged as a potent tool for sequence classification tasks. This article will guide you on how to utilize this model effectively by leveraging TextAttack, a framework designed for adversarial attacks and data augmentation in NLP.

Understanding the DistilBERT Model and TextAttack

The DistilBERT model is a distilled version of BERT that maintains much of its performance while reducing its size. It’s particularly effective for quick text classification tasks, such as sentiment analysis, topic categorization, and more. When paired with TextAttack, which employs specialized methods for training and testing models, you can substantially enhance your text classification efforts.

Model Training Overview

This specific model was fine-tuned with the Glue dataset, which is commonly used for evaluating NLP models. The training specifications included:

- Epochs: 5

- Batch Size: 128

- Learning Rate: 2e-05

- Maximum Sequence Length: 256

Due to its nature as a classification task, the model employed a cross-entropy loss function during training. After its initial epoch, the model achieved an accuracy of approximately 0.563. This is a solid foundation for subsequent improvements.

Implementing the Model

To start implementing the DistilBERT model for your text classification tasks using TextAttack, follow these steps:

from textattack import Attack

from textattack.datasets import HuggingFaceDataset

from textattack.models.helpers import transformers

from textattack import Attacker

# Load your dataset

dataset = HuggingFaceDataset('glue', 'sst2')

# Load the pre-trained model

model = transformers.DistilBERT('distilbert-base-uncased', num_labels=2)

# Define your attack

attack = Attack(model)

# Define the attacker

attacker = Attacker(attack, dataset)

# Perform the attack

results = attacker.attack_dataset()

This code snippet serves as an illustration for loading the dataset, model, and performing the attack. Think of it as assembling the pieces of a jigsaw puzzle: each piece has its specific place, but together they form a complete picture of how the model processes and classifies text data.

Troubleshooting Common Issues

While working with the DistilBERT model through TextAttack, you may encounter some common issues. Here are a few troubleshooting tips:

- **Problem:** Model fails to converge.

**Solution:** Experiment with adjusting the learning rate or increasing the batch size. - **Problem:** Low accuracy.

**Solution:** Ensure your dataset is properly preprocessed and consider fine-tuning the model with more epochs. - **Problem:** Incompatibility issues.

**Solution:** Verify that all dependencies are properly installed and compatible versions are being used.

If you continue to experience challenges, we recommend checking out the comprehensive resources available on GitHub, including documentation and community discussions about TextAttack.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

The Path Ahead

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.