In the world of Natural Language Processing (NLP), understanding the structure and meaning of text is crucial. This is where part-of-speech (POS) tagging and dependency parsing come into play. Today, we’ll explore how to utilize the Chinese RoBERTa Large UPOS model, a powerful tool trained on Chinese Wikipedia texts. By the end of this article, you will be able to implement this model for your own text classification needs!

Model Overview

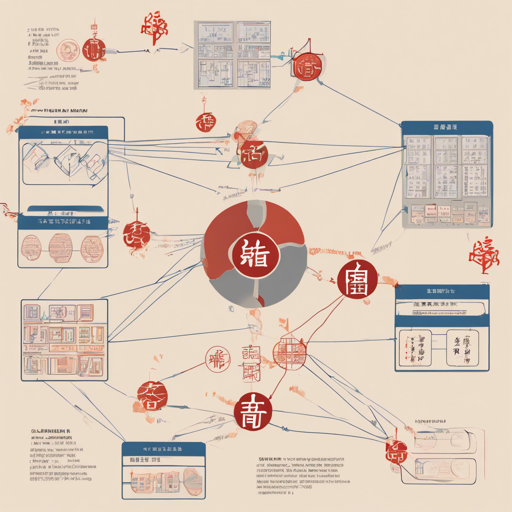

The Chinese RoBERTa Large UPOS model is a BERT-based model specifically engineered for token classification tasks, such as POS-tagging and dependency-parsing. It has been pre-trained on a rich dataset consisting of both simplified and traditional Chinese texts sourced from Chinese Wikipedia. The model assigns a Universal Part-Of-Speech (UPOS) tag to each word in a sentence, which helps in understanding its grammatical role.

Installation

Before jumping into how to use the model, make sure you have the necessary libraries installed. You can do this using pip:

pip install transformers esuparHow to Use the Model

Now that you have everything set up, let’s dive into the code to see how to deploy the model.

1. Using Transformers Library

The following code demonstrates how to use the model with the Transformers library:

from transformers import AutoTokenizer, AutoModelForTokenClassification

tokenizer = AutoTokenizer.from_pretrained("KoichiYasuoka/chinese-roberta-large-upos")

model = AutoModelForTokenClassification.from_pretrained("KoichiYasuoka/chinese-roberta-large-upos")2. Using esupar Library

If you prefer another approach, you can easily employ the esupar library:

import esupar

nlp = esupar.load("KoichiYasuoka/chinese-roberta-large-upos")Understanding the Code with an Analogy

Imagine you are a translator in a foreign country, trying to understand the roles of different words in sentences written in Chinese. The Chinese RoBERTa Large UPOS model acts like your trusty bilingual dictionary that gives insights into the grammatical roles of words (nouns, verbs, adjectives, etc.) in context. Using the library functions is akin to opening this dictionary with the specific phrase you want to understand. Each time you call the tokenizer or model, it offers you a clarified understanding of your chosen text, allowing you to interact with the subtleties of the language.

Troubleshooting

If you encounter issues while using the Chinese RoBERTa model, here are some troubleshooting tips:

- Ensure that you have installed the

transformersandesuparlibraries correctly. - Check for internet connectivity issues if your model fails to download or load.

- For incompatible model versions, verify the names and versions of the models you are trying to load.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Additional Resources

If you’re looking for more capabilities such as advanced dependency parsing and tokenization, you might want to explore the esupar library, which works hand in hand with the Chinese RoBERTa model.

Conclusion

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.