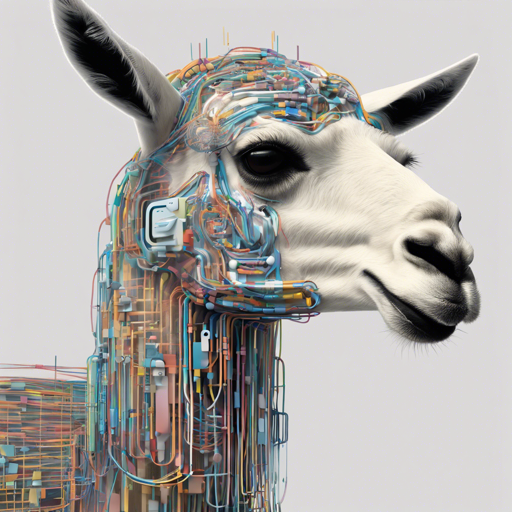

Welcome to the wonderful world of image-to-text models! Today, we’re diving deep into an experimental marvel called Llama-3-EvoVLM-JP-v2. This general-purpose Japanese Vision-Language Model (VLM) seamlessly integrates text and image inputs to create intelligent responses. As you embark on this journey, I’m here to ensure you have a smooth ride into the realm of cutting-edge AI. Let’s roll up our sleeves and get started!

What is Llama-3-EvoVLM-JP-v2?

Llama-3-EvoVLM-JP-v2 is a fascinating model developed by Sakana AI. Think of it like a virtual conversation partner who speaks Japanese fluently, analyzing both words and images to provide insightful answers. Its brainchild is the Evolutionary Model Merge, combining elements from various models to create an intelligent powerhouse. You can refer to the original paper for deeper understanding here, and more details can be found on their blog.

Setting Up the Model

To set this model in motion, follow these simplified steps:

Step 1: Install Necessary Packages

You first need to install the packages required for inference using Mantis. Use the following command:

bash

pip install git+https://github.com/TIGER-AI-Lab/Mantis.git

Step 2: Import Required Libraries

Next, you need to import several libraries to help with image processing and model interactions:

python

import requests

from PIL import Image

import torch

from mantis.models.conversation import Conversation, SeparatorStyle

from mantis.models.mllava import chat_mllava, LlavaForConditionalGeneration, MLlavaProcessor

from mantis.models.mllava.utils import conv_templates

from transformers import AutoTokenizer

Step 3: Setting up the Model

Just like a chef preparing the ingredients before cooking, we configure the model:

python

conv_llama_3_elyza = Conversation(

system=start_header_id,

systemend_header_id,

nnあなたは誠実で優秀な日本人のアシスタントです。特に指示が無い場合は、常に日本語で回答してください。,

roles=(user, assistant),

messages=(),

offset=0,

sep_style=SeparatorStyle.LLAMA_3,

sep=eot_id,

)

device = 'cuda' if torch.cuda.is_available() else 'cpu'

model_id = 'SakanaAI/Llama-3-EvoVLM-JP-v2'

processor = MLlavaProcessor.from_pretrained('TIGER-Lab/Mantis-8B-siglip-llama3')

processor.tokenizer.pad_token = processor.tokenizer.eos_token

model = LlavaForConditionalGeneration.from_pretrained(model_id, torch_dtype=torch.float16, device_map=device).eval()

Step 4: Generating Configurations

Now we prepare some configurations for generating responses:

python

generation_kwargs = {

'max_new_tokens': 128,

'num_beams': 1,

'do_sample': False,

'no_repeat_ngram_size': 3,

}

Step 5: Generate Responses

Finally, let’s have a conversation with the model!

python

text = 'imageの信号は何色ですか?'

url_list = [

'https://images.unsplash.com/photo-1694831404826-3400c48c188d?q=80&w=2070&auto=format&fit=crop&ixlib=rb-4.0.3&ixid=M3wxMjA3fDB8MHxwaG90by1wYWdlfHx8fGVufDB8fHx8fA%3D%3D',

'https://images.unsplash.com/photo-1693240876439-473af88b4ed7?q=80&w=1974&auto=format&fit=crop&ixlib=rb-4.0.3&ixid=M3wxMjA3fDB8MHxwaG90by1wYWdlfHx8fGVufDB8fHx8fA%3D%3D'

]

images = Image.open(requests.get(url_list[0], stream=True).raw).convert('RGB')

response, history = chat_mllava(text, images, model, processor, **generation_kwargs)

print(response) # 信号の色は、青色です。

Step 6: Engaging in Multi-Turn Conversations

Expand the dialogue with your AI companion one image at a time:

python

text = 'では、imageの信号は?'

images += Image.open(requests.get(url_list[1], stream=True).raw).convert('RGB')

response, history = chat_mllava(text, images, model, processor, history=history, **generation_kwargs)

print(response) # 赤色

Troubleshooting Ideas

- Installation Errors: Double-check the installation command and ensure that you have all necessary permissions. Sometimes, running the command in a virtual environment resolves these issues.

- Model Not Loading: Ensure your PyTorch version is compatible and that your hardware supports the required resources. Switching from ‘cuda’ to ‘cpu’ might resolve few loading issues.

- Image Loading Issues: Make sure URLs are correct and accessible. If images don’t load, try using different links.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

In Conclusion

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.