In the era of information overload, text summarization has emerged as an essential tool to distill crucial insights from vast amounts of text. This guide walks you through implementing the state-of-the-art Transformer Model for abstractive text summarization, inspired by the seminal work “Attention is All You Need” by Vaswani et al. Ready to enhance your understanding of this technology? Let’s dive in!

What is Transformer-based Abstractive Summarization?

Abstractive summarization is all about generating concise summaries that reflect the underlying meaning of the original text, rather than merely extracting sentences verbatim. Transformers, especially the architecture introduced by Vaswani et al., have revolutionized this field with their ability to capture relationships over long distances in text data!

Key Components Required

- Inshorts Dataset: A dataset filled with concise news articles that you can utilize to train your model. You can find it on Kaggle.

- Transformer Model: The backbone of your summarization process, leveraging self-attention mechanisms to weigh the significance of various words in a sentence.

Implementation Steps

Here’s a high-level overview of the steps you’ll take to implement the Transformer-based summarization model:

- Prepare your data: Clean the Inshorts dataset to ensure quality input for training.

- Configure your Transformer model: Set parameters such as number of layers, heads, and embedding size for optimal performance.

- Train the model: Utilize libraries like TensorFlow or PyTorch for building and training your model.

- Generate summaries: Input longer texts to produce succinct summaries after your model is trained.

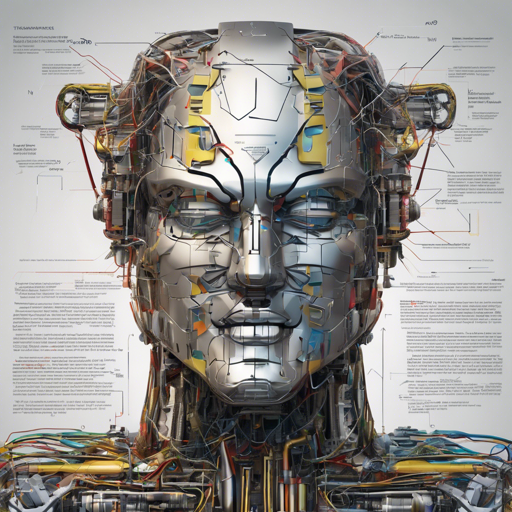

A Closer Look: Understanding the Transformer Model

The Transformer architecture can be likened to an expert chef preparing a gourmet dish. The ingredients (words) are collected, but they need to be carefully examined for quality and flavor (context). The self-attention mechanism allows the chef to focus on the specific ingredients that add the most zest to the dish. Only the most relevant flavors are emphasized, while less significant ones might be downplayed or omitted entirely. Through this process, a well-structured, delicious summary emerges just like a finely balanced dish!

Troubleshooting Common Issues

As with any implementation, you might run into challenges along the way. Here are some troubleshooting tips:

- Issue: Model not converging during training.

- Solution: Double-check your data preprocessing steps for inconsistencies or errors.

- Issue: Generated summaries are too long or incoherent.

- Solution: Experiment with different hyperparameters or review your model architecture for improvements.

- Issue: Resource limitations impacting training.

- Solution: Consider using cloud services or optimizing your code for efficiency.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Additional Resources

To deepen your knowledge of the Transformer model and its applications, explore these links:

Final Thoughts

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.

Conclusion

Implementing an abstractive summarization model using the Transformer architecture opens up new avenues for understanding and processing information. With patience and practice, you can master the skill of summarization and contribute significantly to the field. Happy coding!