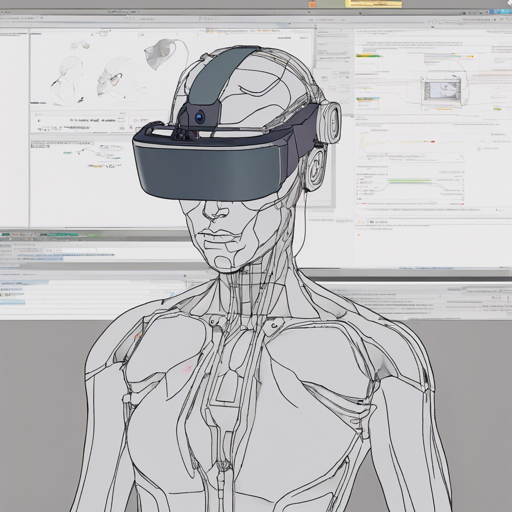

Welcome to the fascinating world of VTuber animation with OpenVHead! This ongoing project allows you to create a vision-based head motion capture system that enhances the realism of virtual characters. In this article, we will guide you through the setup process, usage, and some troubleshooting tips to ensure your experience is seamless. Let’s embark on this exciting journey!

1. Introduction

OpenVHead is designed to capture head motion and facial expressions, enhancing the animation of VTubers. Using a combination of C# and Python within a Unity3D environment, this project employs various filters and control methods to ensure smooth and robust performance. If you are eager to try it out and even contribute to its development, you’re encouraged to do so!

2. Prerequisites

2.1 Hardware

- PC

- RGB camera (can be a built-in webcam or an external USB camera)

2.2 Software

2.2.1 Environment

- Windows System

- Python 3.6.x

- Unity 2018.4.x

2.2.2 Package Dependencies

- opencv-python (tested with version 3.4.0)

- dlib (tested with version 19.7.0)

3. Usage

3.1 Quick Start

To get started with OpenVHead, follow these steps:

- Configure your environment by installing the required packages using

pip. The .whl files foropencv-pythonanddlibcan be retrieved from here and here. - Clone the OpenVHead repository to your workspace. You could also download the .zip file of the latest release.

- Open the folder in Unity as a project.

- Drag the MainScene from Assets – scenes to the Hierarchy and remove the Untitled scene.

- Press the Play button to run the project.

- Press the Start Thread button to initiate the C# socket server. This will enable communication between the Unity environment and the Python script.

- Once everything is set up, your virtual character comes to life! You can stop the program by pressing the Play button again; avoid using the Stop Thread button to prevent bugs.

3.2 Model Selection

The system supports two character models. To switch models, simply select the desired GameObject in the Scene hierarchy and use the toggle in the inspector to unhide it.

3.3 Adding New Models

If you’re interested in adding your own character model, ensure that it has blend shapes set up in a 3D modeling application. First, export it as an FBX file, then follow these steps:

- Import your FBX into Unity.

- Drag the imported model into the main scene.

- Copy and paste the ParameterServer and HeadController GameObjects from the existing models to your model.

- Add the Blend Shapes Controller script to control the shapes.

- Unhide your model and enjoy the customization!

3.4 Enabling Green Screen Mode

To use your virtual character in other contexts (like live streaming), select the Main Camera GameObject, and set the Clear Flags option from Skybox to Solid Color with RGB value (0, 255, 0).

3.5 Debug Mode

A debug mode is available to visualize control parameters. Enable this by unhiding the RightData and LeftData child GameObjects of Canvas to see the live plotting of metric values.

4. Understanding the Code

Imagine you are a chef in a bustling kitchen. Your recipe involves multiple ingredients (data) coming from various suppliers (components). Each ingredient must be perfectly measured and blended to create a delicious dish (the virtual character’s motion). In our system, Python serves as the chef who prepares the ingredients, ensuring each piece of data is just right, while Unity acts as the kitchen where the dish is cooked. They communicate using a socket that resembles a delivery service making sure the ingredients arrive on time.

5. Known Issues

- Occasionally, the Python script may fail to terminate after stopping the Play button. If this happens, press ESC in the Output window to stop the thread manually.

- Performance may vary based on your hardware setup. If you encounter issues, please document your hardware condition and create an issue.

Troubleshooting Advice

If you are facing problems, it might be beneficial to check the compatibility of your hardware and software versions. Ensure you have installed the correct package dependencies. Additionally, when debugging in Unity, remember to keep an eye on the output logs from the Python client. For deeper insights or collaboration on AI projects, don’t forget to stay connected with fxis.ai.

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.