In the realm of Natural Language Processing (NLP), understanding text diversity is crucial for improving the quality of generated language. Today, we will delve into how to analyze text diversity metrics using the QCPG++ dataset paired with specific learning rates and...

Understanding the Quants of Qwen1.5 110B Chat

With the release of Qwen1.5 110B Chat, the AI community has taken note of the advancements in quantization, specifically regarding how much information can be stored in a model's weights. In this article, we'll delve into the intricacies of quants, their implications,...

How to Generate Instructions with T5 and PyTorch

In this blog post, we will explore how to use the T5 model from Hugging Face Transformers to generate paraphrased instructions dynamically. This powerful technique can be handy in situations like responding to user queries in applications. Let’s dive into the code and...

How to Configure Your Neural Network: A Step-by-Step Guide

In the ever-evolving world of artificial intelligence, fine-tuning your neural network is akin to adjusting the dials on a sophisticated machine. Each parameter plays a crucial role in ensuring that your model learns accurately. In this article, we will delve into how...

How to Train an Experimental LORA Model Using Rogan as a Viking

In this article, we will take you through the process of training an experimental LORA model that utilizes a unique and exciting dataset based on the image of Rogan styled as a Viking. This tutorial is designed to be user-friendly, guiding you step by step through the...

Unlocking the Power of MathBERT: A Guide to Using Pre-trained Mathematical Language Model

Welcome to the fascinating world of MathBERT! This cutting-edge model is designed to understand the intricate language of mathematics, making it easier for developers and researchers to harness the power of AI for math-related tasks. In this blog, we'll explore how to...

How to Get Started with SpeechGPT: Empowering Conversations Across Modalities

Welcome to the future of conversational AI! SpeechGPT is a revolutionary large language model that effortlessly bridges the gap between text and spoken dialogue using its intrinsic cross-modal abilities. With SpeechGPT, you can interact in multiple ways—be it through...

How to Fine-Tune the CoMet-based OCR System using TROCR

Are you ready to step into the world of Optical Character Recognition (OCR) with the power of TROCR (Transformer OCR)? In this guide, we will walk you through the process of setting up and fine-tuning a specificity-driven OCR model using the beit+roberta architecture....

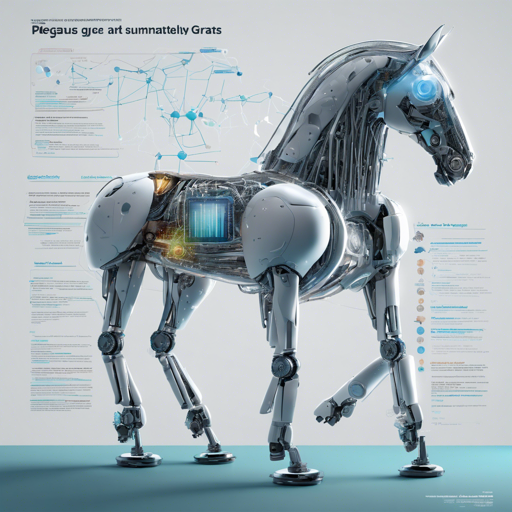

Pegasus for COVID-19 Literature Summarization: A Step-by-Step Guide

In the wake of the COVID-19 pandemic, researchers have flooded the field with scholarly articles, leading to an overwhelming amount of literature. Enter Pegasus—a natural language processing model finely tuned to effectively summarize COVID-19 literature. This guide...