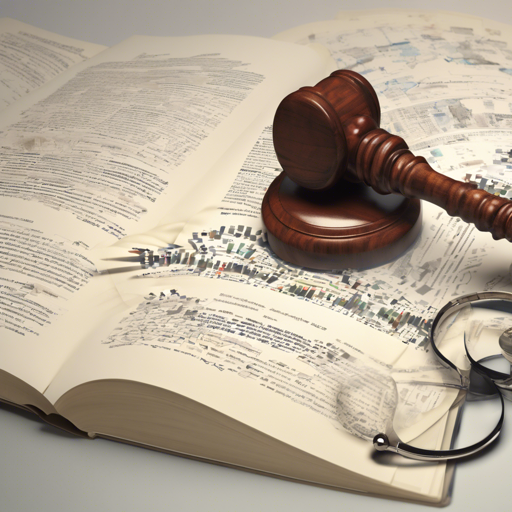

Welcome to our exploration of the groundbreaking work by Brugger, Sturmer, and Niklaus, presented in the paper titled "MultiLegalSBD: A Multilingual Legal Sentence Boundary Detection Dataset." In the realm of Natural Language Processing (NLP), the ability to...

BERT-Based Temporal Tagged Token Classifier Using German Gelectra Model

In today’s tutorial, we will explore how to build a BERT-based temporal tagged token classifier using the German Gelectra model. This innovative model allows us to tag specific tokens in plain text with temporal classifications, making it incredibly useful for various...

Exploring the BERT-mini Model Finetuned with M-FAC

In today's AI landscape, fine-tuning language models has become essential for achieving high performance on various tasks. One such model is the BERT-mini, which has been finetuned using the M-FAC optimizer on the QQP (Quora Question Pairs) dataset. This article...

How to Fine-Tune the ALBERT Model for Sequence Classification Using TextAttack

In the realm of Natural Language Processing (NLP), model fine-tuning is akin to training a young athlete to excel in their sport. Just as a coach tailors their training regimen to improve specific skills, we can customize pre-trained models to achieve higher accuracy...

How to Generate Poetry Lines Using Nextline: A Guide

In the world of artificial intelligence and natural language processing, creating poetry can be a fascinating challenge. Today, we'll explore how to use the Nextline tool, built upon the robust model, to generate lines of poetry based on...

How to Set Up a Transformer-VAE Model in PyTorch

Welcome to the world of advanced deep learning! Today, we'll guide you through the steps to set up a Transformer-VAE (Variational Autoencoder) model using PyTorch. This model not only utilizes transformers for enhanced capabilities but also incorporates an MMD...

How to Utilize RoBERT-base for Romanian Language Processing

In this article, we will walk through the process of using the RoBERT-base model, a powerful tool for natural language processing specifically tailored for the Romanian language. With its unique architecture and extensive training data, it is a fantastic choice for...

Understanding MultiLegalSBD: A Multilingual Legal Sentence Boundary Detection Dataset

In the realm of Natural Language Processing (NLP), the need for accurate Sentence Boundary Detection (SBD) cannot be overstated, especially in complex domains like law. With the advent of the MultiLegalSBD, we now have a robust multilingual dataset designed...

How to Retrain the CLIP Model on a Subset of the DPC Dataset

The CLIP model, a powerful tool for understanding images and text, can be retrained to optimize its performance on specific datasets. In this guide, you will learn how to use the first steps in retraining the CLIP model over a subset of the DPC dataset, making it...