Welcome to the future of artificial intelligence in database queries! In this article, we'll explore how to effectively utilize the T5 model fine-tuned on the Spider dataset, enriched with schema serialization for improved context understanding. If you've ever wanted...

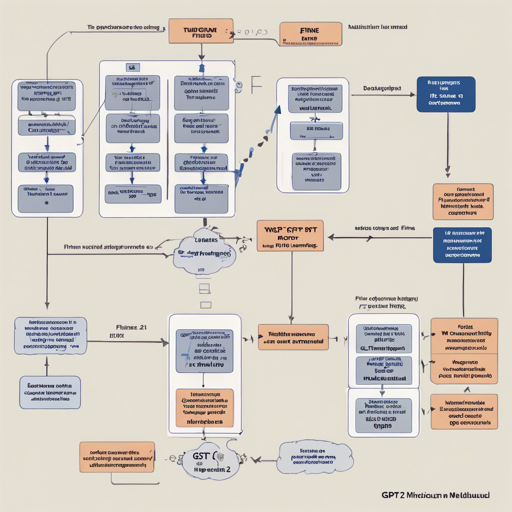

How to Fine-Tune the GPT-2 Medium Model on MultiWOZ21

Fine-tuning a pre-trained model can elevate your AI application to new heights. In this article, we'll walk you through the process of fine-tuning the GPT-2 Medium model using the MultiWOZ21 dataset. If you've been curious about enhancing your natural language...

Multilingual Joint Fine-tuning for Identifying Trolling, Aggression, and Cyberbullying

In an age driven by online communication, understanding user behavior has become crucial. This guide will walk you through implementing multilingual joint fine-tuning of transformer models that identify various forms of online misconduct, such as trolling, aggression,...

Automatic Diacritics Restoration for the Yorùbá Language with mT5_base_yoruba_adr

Welcome to the world of natural language processing, where we explore the capabilities of models that can understand and enhance human language. In this article, we will dive deep into the mT5_base_yoruba_adr model, a remarkable tool for automatic diacritics...

How to Use the Pre-trained BERT Model for MNLI Tasks

If you are venturing into Natural Language Processing (NLP), you might have heard about BERT (Bidirectional Encoder Representations from Transformers), a revolutionary model that has significantly improved the performance of various NLP tasks. In this guide, we will...

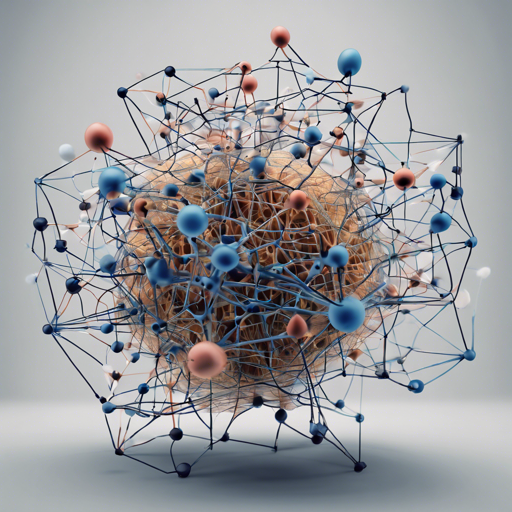

How to Train Your Own Random RoBERTa Mini Model

Welcome to the fascinating world of natural language processing! In this guide, we’ll walk through how to utilize the random-roberta-mini, an unpretrained version of a mini RoBERTa model that can truly spark your creativity and exploration. Understanding the Random...

How to Transform Informal Text into Formal Style Using AI

The evolution of language styles is fascinating, and with the help of artificial intelligence, we can bridge the gap between informal and formal writing. This tutorial will guide you through the process of using the `BigSalmon Informal to Formal Dataset` for text...

How to Implement Roberta-Large for Natural Language Inference (NLI)

Natural Language Inference (NLI) is a crucial task in the field of Natural Language Processing (NLP) that involves determining the relationship between a given pair of sentences. If you're looking to dive deeper into this fascinating domain, you can utilize the...

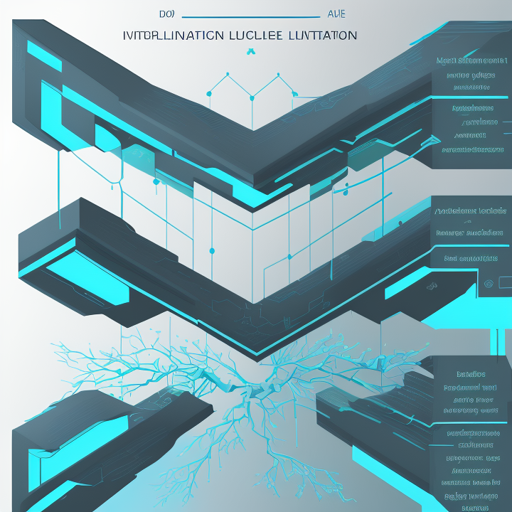

How to Access the BigCode Star Encoder Model

Welcome to your comprehensive guide on navigating the access restrictions of the BigCode Star Encoder model! This model holds immense potential for various AI applications, but unfortunately, access to it is limited to an authorized list of users. Understanding Access...