In the realm of AI and machine learning, especially within the area of natural language processing (NLP), cross-lingual capabilities are gaining more traction. One outstanding model that has emerged is the CLSRIL-23 (Cross Lingual Speech Representations on Indic...

How to Utilize Pre-trained Models for the Khmer Language

In this article, we will explore how to leverage pre-trained models specifically designed for the Khmer language. These models can help improve various tasks related to Natural Language Processing (NLP) in this unique linguistic context. Let’s dive into the details!...

Understanding the Fine-Tuning of a KlueRoberta Model

In the world of Natural Language Processing (NLP), fine-tuning large pre-trained models is essential for achieving optimal performance on specific tasks. In this article, we will guide you on how to fine-tune the KlueRoberta model using some specific hyperparameters...

How to Utilize Pretrained Models for the Khmer Language

In the evolving landscape of artificial intelligence and natural language processing, pretrained models serve as a great launching pad for various applications. If you're working with Khmer language processing, you're in luck! The pretrained models created by the...

How to Utilize T2I-Adapter for Enhanced Text-to-Image Generation

Welcome to our guide on T2I-Adapter! This innovative technology optimizes the capabilities of text-to-image diffusion models, giving you more control over the image generation process. Let’s dive into how to effectively implement this model, troubleshoot common...

How to Fine-Tune Sparse BERT Models for SQuADv1

The world of natural language processing is constantly evolving, especially with the advent of transformer-based models like BERT. In this article, we will explore how to fine-tune a set of unstructured sparse BERT-base-uncased models specifically for the SQuADv1...

How to Request Access to the CohereForAI/c4ai-command-r-plus-4bit Model

If you're diving into the world of artificial intelligence and need access to the CohereForAI/c4ai-command-r-plus-4bit model, you've come to the right place! This article will guide you through how to request access to this powerful model, ensuring that you can start...

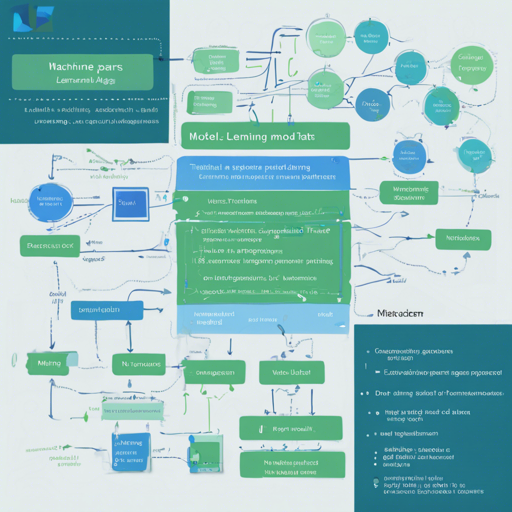

Understanding Naming Patterns in Model Training

Welcome to a deep dive into the intricacies of naming patterns used in model training, specifically within the context of DistilBERT and the Microsoft MAchine Reading COmprehension (MS MARCO) dataset. This guide will cover the various naming conventions employed,...

Getting Started with RuCLIP: The Russian Contrastive Language-Image Pretraining Model

Welcome to the guide on RuCLIP, the innovative model that combines the powers of language and images to find similarities and rearrange content. Whether you're embarking on a project in computer vision or natural language processing, RuCLIP might just be what you...