In the realm of artificial intelligence, utilizing robust models is essential for delivering insightful and interactive experiences. This guide walks you through the process of employing the YanoljaEEVE-Korean-Instruct-10.8B model, especially when it is quantized...

How to Use the GPT-2 Indonesia Model for Text Generation

In this blog post, we will explore how to utilize the GPT-2 Indonesia model for generating text in Indonesian. This powerful model allows you to create sentences from an initial prompt, showcasing the richness of the Indonesian language. Getting Started Before we dive...

How to Utilize Useful Scripts for Confidence Scoring and Mass PR Updates

Welcome to a user-friendly guide on how to leverage some useful scripts contained in this repository. Whether you're looking to create confidence scores or streamline mass pull requests to update your model card, this article will provide comprehensive insights and...

Unlocking Behavior Change Conversations with Bert-Base-German-Cased

In the realm of artificial intelligence and natural language processing, understanding human behavior and communication is pivotal, especially in the context of health and lifestyle changes. This article will walk you through utilizing the Bert-base-german-cased...

How to Run Megatron BERT Using Transformers

Are you ready to harness the power of large transformer models? Today, we will journey through how to run the Megatron BERT model, developed by the brilliant minds at NVIDIA. This model, packed with 345 million parameters, is efficient for various NLP tasks. Let's...

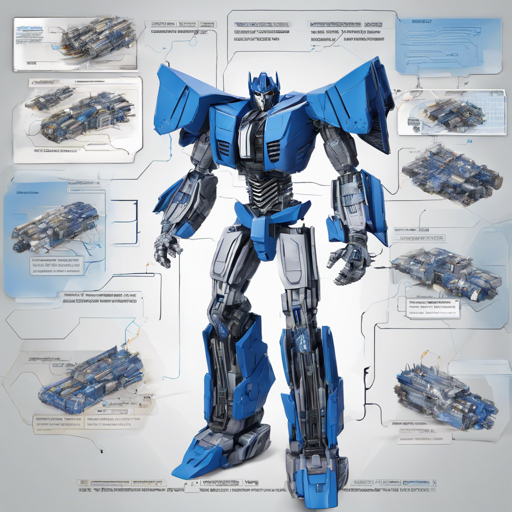

How to Use Graphcore’s RoBERTa-Large IPU Model with Optimum

Graphcore's new open-source library and toolkit offers developers a powerful way to harness IPU-optimized models certified by Hugging Face. This blog will walk you through using the Graphcoreroberta-large-ipu model efficiently and address any hiccups you might...

How to Use the M2M100 Translation Model for English to Yorùbá

The world is becoming increasingly interconnected, and the ability to communicate across languages is more essential than ever. If you're looking to translate texts from English to Yorùbá, look no further than the **m2m100_418M-eng-yor-mt** model. This guide will walk...

How to Utilize the m2m100_418M Model for Yorùbá to English Translation

Welcome to the world of machine translation where technology bridges the gap between languages! In this article, we'll explore how to use the m2m100_418M model, a robust machine translation tool specifically designed to translate texts from the Yorùbá language to...

How to Use Pretrained Models for Khmer Language Processing

Welcome to your go-to guide on utilizing pretrained models designed specifically for Khmer language processing! These models can significantly enhance your natural language processing tasks, providing a strong foundation for various applications. What Are Pretrained...