In this article, we'll take a journey through the Kinyarwanda RoBERTa model, known as xlm-roberta-base-finetuned-kinyarwanda. We’ll dive into its capabilities, how to effectively use it, and how to troubleshoot any potential issues along the way. What is...

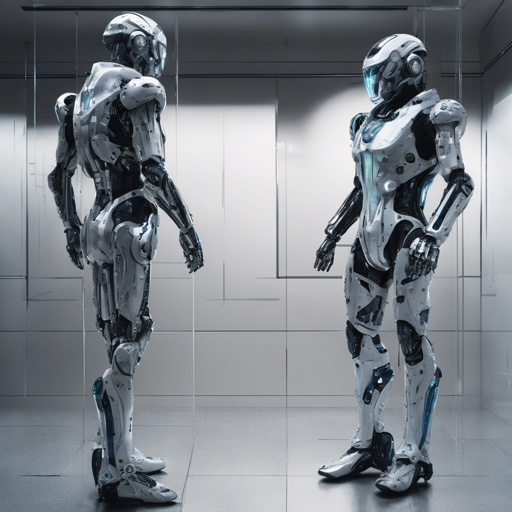

How to Utilize the Mech Cybersuit Embeddings for Stable Diffusion 2.0

Welcome to the future of image generation where machine learning meets captivating aesthetics! Today, we’re diving into how to leverage newly trained embeddings specifically built for Stable Diffusion 2.0, featuring mech cybersuits. Buckle up as we explore the power...

How to Optimize Your AI Models with Graphcore’s ViT on IPUs

In the world of artificial intelligence, optimizing models for speed and efficiency is paramount, especially when dealing with complex architectures like Vision Transformers (ViT). Graphcore's new open-source library, in conjunction with Hugging Face, enables...

Semi-Supervised Knowledge-Grounded Pre-training for Task-Oriented Dialog Systems

In the ever-evolving field of artificial intelligence, the development of effective dialog systems is crucial for a seamless interaction experience. In our latest endeavor, we present models crafted for Track 2 of the SereTOD 2022 challenge, focusing on building...

How to Use ByT5 Base Portuguese Product Reviews for Sentimental Analysis

Welcome to your gateway for understanding how to harness the power of the ByT5 Base Portuguese model for sentimental analysis of product reviews! In this blog, we will walk through how to set up the model, utilize it, and troubleshoot common issues. Let’s get started!...

Mastering Multilingual Legal Sentence Boundary Detection with MultiLegalSBD

In the realm of Natural Language Processing (NLP), accuracy is paramount, especially when dealing with the intricacies of legal texts. An essential aspect of NLP is Sentence Boundary Detection (SBD), which acts as a critical gateway to deciphering and processing text...

How to Fine-Tune RoBERTa on Winograd Schema Challenge Data

In the world of AI and natural language processing, fine-tuning models like RoBERTa on specific datasets can lead to significant improvements in performance. This article will guide you through the fine-tuning process of the RoBERTa model on the Winograd Schema...

Generating Negative Movie Reviews with GPT-2: A User-Friendly Guide

Welcome to the exciting world of AI and natural language processing! In this blog post, we will explore how to set up a language model, specifically a GPT-2 model fine-tuned to produce negative movie reviews. This model utilizes the IMDB dataset, a popular collection...

Mastering Multilingual Joint Fine-Tuning of Transformer Models for Trolling Detection

Welcome to the world of Natural Language Processing (NLP)! In this article, we will walk you through the process of fine-tuning transformer models for identifying trolling, aggression, and cyberbullying across multiple languages, as demonstrated at the TRAC 2020...