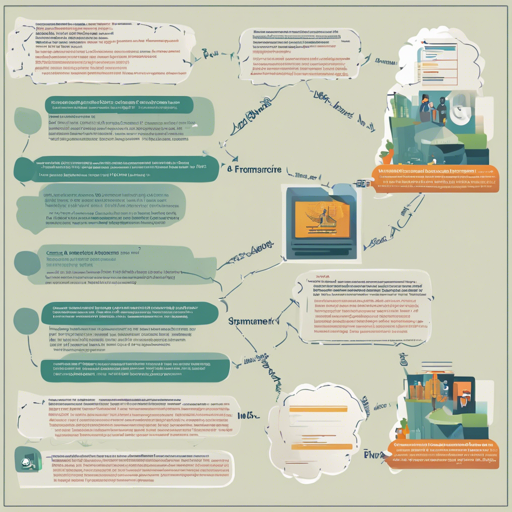

In the landscape of artificial intelligence, model fine-tuning is akin to teaching a student how to ace an exam after they've grasped the fundamentals. In this article, we will explore how to fine-tune the BART-base model specifically for the temporal definition...

How to Leverage AI for Creative Content Generation

In today's digital age, artificial intelligence (AI) plays an essential role in generating content that resonates with audiences. Utilizing AI tools like transformers can enhance your storytelling capabilities significantly. In this guide, we will explore how to...

How to Enhance Sentence Boundary Detection in Natural Language Processing

In the realm of Natural Language Processing (NLP), ensuring that sentences are identified correctly is vital. This essential task, known as Sentence Boundary Detection (SBD), serves as a foundational element that significantly influences the quality of various...

Implementing RoBERTa on Hugging Face: A Comprehensive Guide

Are you ready to dive deep into the world of natural language processing with RoBERTa? This guide will walk you through the implementation of a state-of-the-art RoBERTa transformer model based on the notable Hugging Face platform. Let’s unlock the door to advanced AI...

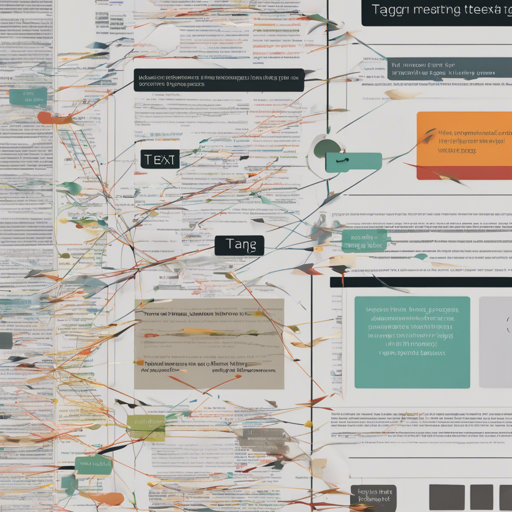

How to Use Transformers for Formalizing Informal English

If you've ever wanted to transform informal English into a more formal style—much like the eloquent words of Abraham Lincoln—you're in the right place. Here’s a guide to achieve this transformation using the Transformers library from Hugging Face. Getting Started To...

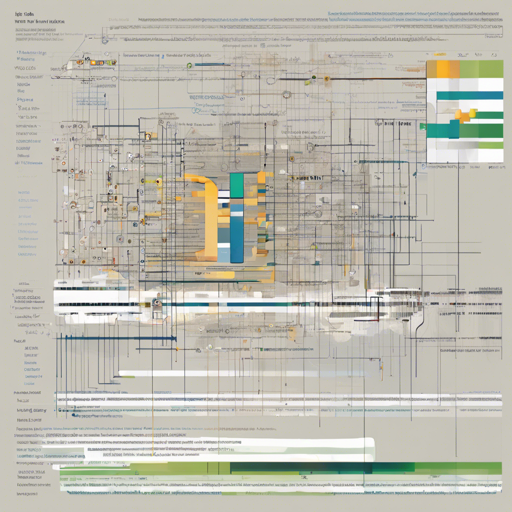

How to Utilize the BERT2BERT Temporal Tagger for Temporal Tagging of Text

In this article, we will explore how to employ the BERT2BERT temporal tagger, an innovative **Seq2Seq model** designed for temporal tagging of plain text using the BERT language model. This model is outlined in the paper "BERT got a Date: Introducing Transformers to...

Getting Started with MarkupLM for Question Answering

Welcome to your guide on utilizing MarkupLM, an advanced model fine-tuned for Question Answering tasks! Derived from Microsoft’s MarkupLM and tuned specifically on a subset of the WebSRC dataset, this model aims to enhance your experience with visually-rich document...

How to Fine-Tune BERT-Small on the CORD-19 QA Dataset

The BERT-Small model, when fine-tuned on the CORD-19 QA dataset, can effectively answer questions related to COVID-19 data. This blog post will guide you step-by-step on how to build and test a fine-tuned BERT-Small model using the CORD-19 dataset. Understanding the...

How to Utilize the RDR Question Encoder Model

The RDR (Retriever-Distilled Reader) Question Encoder is a robust model that channels the strength of a reader while retaining the efficiency of a retriever. By leveraging knowledge distillation from its forebear, the DPR (Dense Passage Retrieval), the RDR boasts...