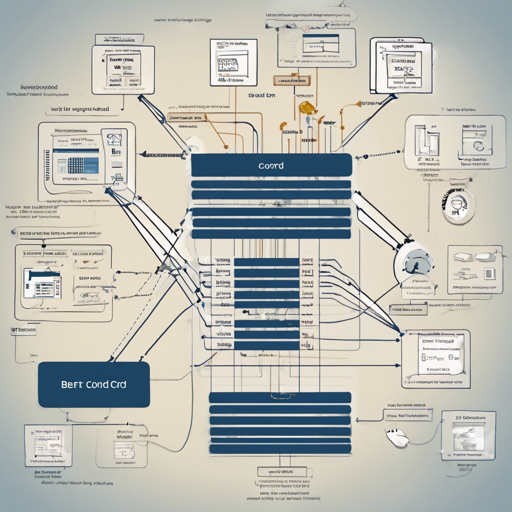

In the world of natural language processing (NLP), language models like BERT (Bidirectional Encoder Representations from Transformers) serve as powerful tools for a variety of applications. In this article, we will guide you through building a BERT-Small CORD-19 model...

How to Work with MS-BERT: A Guide

Welcome to your one-stop guide on navigating through the intricacies of MS-BERT! This blog will provide you with all the necessary steps to utilize MS-BERT effectively, a cutting-edge model pre-trained specifically for addressing the nuances of Multiple Sclerosis (MS)...

How to Utilize SPARTA for Efficient Open-Domain Question Answering

In the realm of artificial intelligence, question answering systems have become increasingly important. One such remarkable approach is the SPARTA model, designed to enhance open-domain question answering through sparse transformer matching retrieval. In this guide,...

How to Generate Educational Question Groups Using EQGG

Educational Question Group Generation (EQGG) is a cutting-edge tool designed to assist educators and learners by creating groups of questions based on specific topics or details. In this article, we will walk you through everything you need to know to get started with...

How to Use MiniCPM-MoE-8x2B: A Guide to Generative Language Modeling

Welcome to our exploration of the MiniCPM-MoE-8x2B, a powerful decoder-only transformer-based generative language model that employs a Mixture-of-Experts (MoE) architecture. This guide will walk you through using this cutting-edge model, ensuring that you can harness...

Fine-Tuning a Cloob-Conditioned Latent Diffusion Model: A Step-by-Step Guide

Welcome to the world of advanced AI! In this article, we’ll delve into the intricate process of fine-tuning a Cloob-conditioned latent diffusion model, as initiated during the exciting #huggan event. With a guide that breaks down the complexities, you’ll be up and...

Guide to Understanding Llama-3 8B Instruct: Measuring Performance

With advancements in AI models, understanding the intricacies of their performance is paramount. In this article, we'll dive deep into the Llama-3 8B Instruct model by examining different quantization levels, their implications, and how to troubleshoot any issues you...

How to Use BigSalmonGPTNeo1.3B for Language Transformation

In the ever-evolving world of artificial intelligence, fine-tuning language models to match desired styles can be a challenging yet rewarding venture. This article will walk you through using the BigSalmonGPTNeo1.3B model to transform informal English into formal...

Navigating Mental Health: A Comprehensive Guide

Mental health is a vital component of our overall well-being. In this blog, we will explore various factors that influence mental health, including anxiety, depression, self-care, resilience, and the importance of seeking help. Let's break them down into manageable...