The iSTFTNet provides a powerful way to work with music data, having been specifically trained to handle audio at 22 kHz. In this guide, we'll explore how to utilize these pre-trained models, understand their structure, and troubleshoot any issues you may encounter....

How to Convert Fairseq Wav2Vec2 to Hugging Face Transformers

Embarking on the journey of converting a Fairseq Wav2Vec2 model to a Hugging Face Transformers model can seem daunting. However, with the right guidance, you can smoothly navigate through the conversion process. Below, I'll break down the steps you need to follow, and...

How to Fine-tune a Roberta-Base Model with TextAttack for Sequence Classification

Welcome to the world of Natural Language Processing (NLP)! In this article, we will explore how to fine-tune a Roberta-Base model using TextAttack, specifically targeted for sequence classification. We’ll also troubleshoot common issues you may encounter along the...

How to Create a Personalized Speech-to-Text Model

Understanding accents can be challenging for many speech-to-text models. If you've ever struggled with a system that just doesn’t get your voice right, you're not alone! In this article, we'll walk you through how to fine-tune an existing speech-to-text model using...

How to Use the random-albert-base-v2 Model for Your Language Processing Needs

In the realm of natural language processing, having the right tools can make all the difference. Today, we're diving into the exciting world of the random-albert-base-v2 model. This unpretrained version of the Albert model is specifically designed for scenarios where...

How to Use T5 for Paraphrasing Questions in Python

Welcome to the world of Natural Language Processing (NLP)! Today, we are going to delve into how to leverage the power of the T5 (Text-to-Text Transfer Transformer) model for paraphrasing questions. This article aims to guide you through the steps involved in setting...

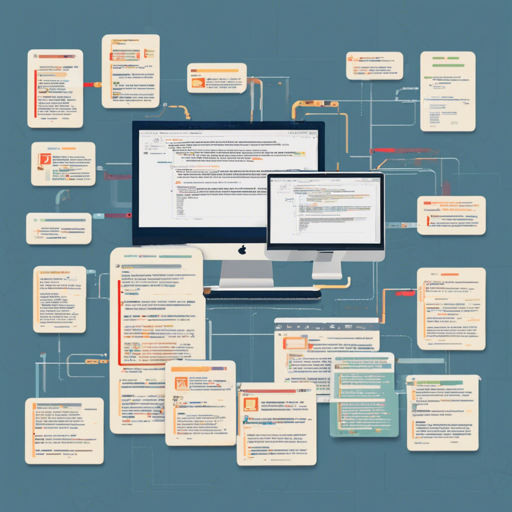

How to Run the RotoBART Script: A Step-by-Step Guide

RotoBART is a powerful tool designed to assist in various natural language processing tasks. In this article, we’ll break down how to run the RotoBART script effectively, equip you with essential arguments, and provide troubleshooting tips to ensure a smooth...

Fine-Tuning the BART-Base Model for Temporal Definition Modelling: A How-To Guide

In the landscape of artificial intelligence, model fine-tuning is akin to teaching a student how to ace an exam after they've grasped the fundamentals. In this article, we will explore how to fine-tune the BART-base model specifically for the temporal definition...

How to Leverage AI for Creative Content Generation

In today's digital age, artificial intelligence (AI) plays an essential role in generating content that resonates with audiences. Utilizing AI tools like transformers can enhance your storytelling capabilities significantly. In this guide, we will explore how to...