Introduction In the intriguing world of AI and machine learning, the QCPG++ Dataset presents a remarkable opportunity for researchers and developers alike. This blog post is designed to walk you through the various components of the QCPG++ dataset, providing a...

How to Use the k-Diffusion Latent Upscaler

In the world of image processing and AI, upscaling images while retaining high quality is a significant challenge. Fortunately, Katherine Crowson has made it easier for enthusiasts and developers alike with the creation of a temporary model repository for the...

How to Generate New Stories with MyModelNameBorges02

Welcome to the exciting world of AI-assisted storytelling! In this article, we will dive into using MyModelNameBorges02, a powerful model designed to generate new short stories inspired by the works of the renowned author Jorge Luis Borges. Whether you're a budding...

Unlocking DialogLED: A Comprehensive Guide to Long Dialogue Understanding and Summarization

Welcome to the world of DialogLED, a remarkable model designed specifically for the nuances of long dialogue understanding and summarization! With the advent of this technology, efficient communication is no longer a distant dream. In this guide, we'll delve into the...

How to Preprocess Your Tweets for Tokenization

In the age of social media, understanding and processing tweets for various applications like sentiment analysis or trend detection is essential. One of the key steps in this process is text preprocessing, especially when using a tokenizer trained on specific...

How to Navigate and Utilize Profiles in the AFP Global Community

In our interconnected world, networking has never been more crucial. The AFP Global Community provides an excellent platform for professionals to connect, share knowledge, and strengthen their professional ties. In this guide, we will walk you through how to...

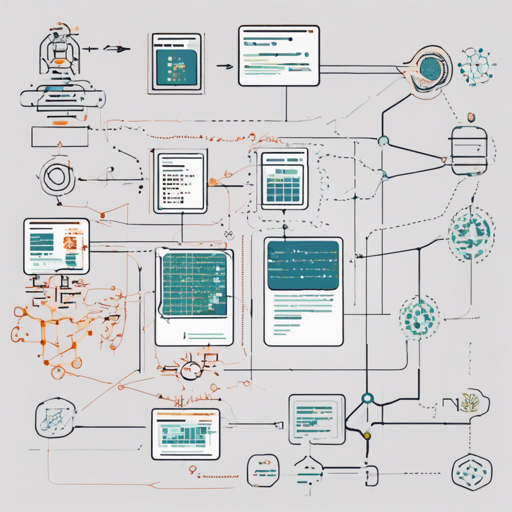

How to Use Pretrained K-mHas with Multi-label Model and koElectra-v3

In the age of artificial intelligence, leveraging pretrained models can significantly enhance your machine learning projects. This article will guide you on how to utilize the pretrained K-mHas with a multi-label model using koElectra-v3. With step-by-step...

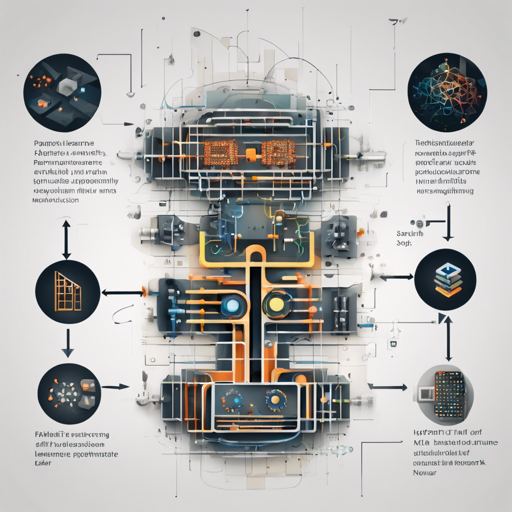

How to Fine-Tune the BERT-Mini Model with M-FAC Optimization

The BERT-mini model is a lightweight version of BERT that can efficiently handle tasks like natural language inference. In this article, we will guide you through the process of fine-tuning the BERT-mini model using the state-of-the-art second-order optimizer M-FAC,...

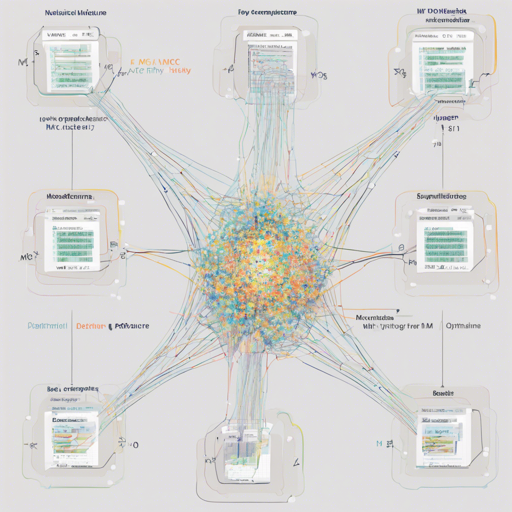

How to Fine-Tune BERT-Tiny with M-FAC Optimizer

In this blog, we will explore the step-by-step process of fine-tuning the BERT-tiny model using the M-FAC optimizer on the MNLI dataset. Whether you are a seasoned AI developer or just starting your journey in natural language processing, this guide aims to be...