Deep learning has ushered in a new era of natural language processing (NLP), and one remarkable player on this field is SqueezeBERT. It's not just another variant of BERT; this model is designed for efficiency without sacrificing the performance that BERT is known...

How to Use the RDR Question Encoder

In the world of artificial intelligence and natural language processing, effective retrieval of information is crucial. The RDR (Retriever-Distilled Reader) model stands out as a powerful approach that integrates the benefits of both the retriever and the reader...

How to Transform Informal English to the Style of Abraham Lincoln

In today's world, we often find ourselves navigating the delightful chaos of informal speech. But what if you wanted to add a touch of grandeur and eloquence reminiscent of historical figures like Abraham Lincoln? Fear not! This article will guide you step-by-step on...

How to Use the Monkey Model for Improved Image and Text Understanding

If you're looking to enhance your artificial intelligence projects with improved image captioning and text analysis, the Monkey model is a fantastic choice. Developed by researchers at Huazhong University of Science and Technology and Kingsoft, this model efficiently...

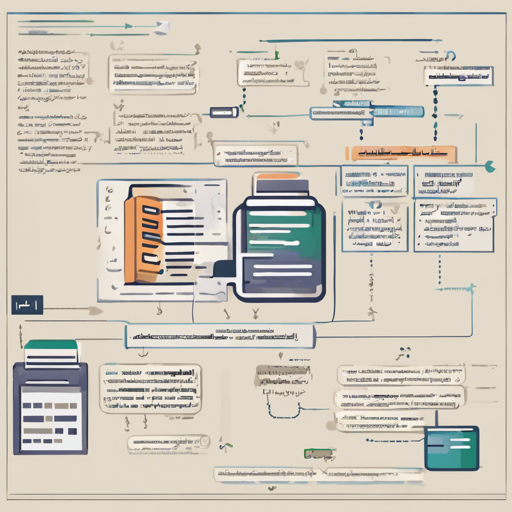

How to Implement a Host Model for Named Entity Recognition in Microbiome Analyses

Welcome to your ultimate guide on building a Named Entity Recognition (NER) model specifically designed to identify hosts of microbiome samples in texts. This user-friendly approach will help you navigate the process of leveraging a fine-tuned BioBERT model for your...

Unlocking the Power of Wolof Language with xlm-roberta-base-finetuned-wolof

Welcome to the world of language processing! Today, we're diving into the exciting capabilities of the xlm-roberta-base-finetuned-wolof model. Fine-tuned on Wolof texts, this model brings a new dimension to named entity recognition in the Wolof language. Let’s explore...

How to Implement DistilBERT with 256k Token Embeddings

DistilBERT is an efficient version of BERT, designed to reduce the model size while maintaining its performance. In this guide, we will explore how to initialize DistilBERT with a 256k token embedding matrix derived from word2vec, which has been fine-tuned through...

Unlocking Temporal Tagging with BERT: A Guide

In the dynamic world of natural language processing, temporal tagging stands as a significant task that allows us to identify and classify time-related information within texts. Leveraging the power of BERT, an advanced transformers model, we can achieve remarkable...

How to Use the AraBERTMo Arabic Language Model

If you're looking to harness the power of the Arabic language in your AI applications, the AraBERTMo model is a fantastic choice. This pre-trained language model is based on Google's BERT architecture and is specifically tailored for Arabic. In this article, we will...