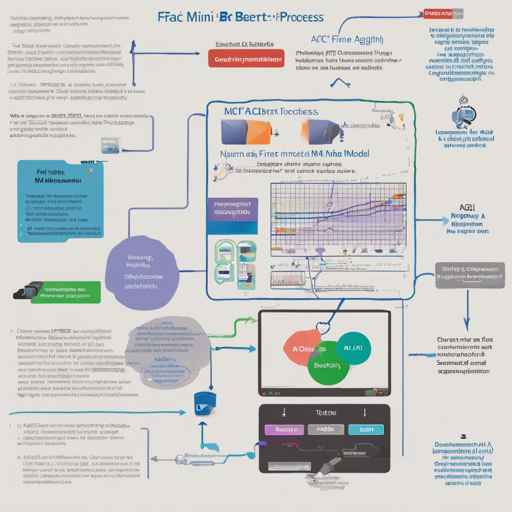

Welcome to a journey where we fine-tune the BERT-mini model using the cutting-edge M-FAC optimizer! This guide will take you through the process of setting up the BERT-mini model for the QNLI dataset and show you how to make the most of this advanced optimization. By...

How to Quantize Yi-VL-34B and the Visual Transformer

Welcome to this insightful guide on quantizing the Yi-VL-34B model and enhancing its performance through the application of a specific pull request. In this blog post, we will navigate through the steps you need to take to successfully apply these changes and optimize...

Multilingual Joint Fine-tuning of Transformer Models for Cyberbullying Detection

In the age of digital communication, identifying negative online behavior such as trolling, aggression, and cyberbullying is crucial. This blog will guide you through the process of utilizing transformer models for this purpose, based on the findings from the TRAC...

How to Access and Utilize the spiderverse-v1-pruned Model

The spiderverse-v1-pruned model is an intriguing tool for AI enthusiasts and developers, designed for generating creative outputs under the CreativeML OpenRAIL-M license. This article will guide you on how to download and use this model, adhering to the specified...

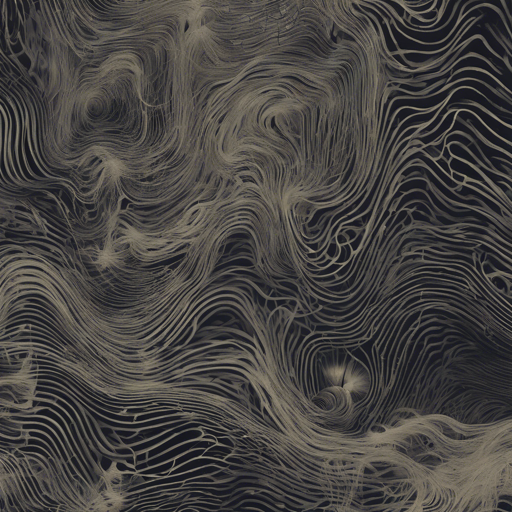

How to Use the Ito Junji Diffusion Model

If you're a fan of the eerie and intricate art style of Junji Ito, you'll be thrilled with the capabilities of the Ito Junji Diffusion model. This model is meticulously trained using a multitude of images from Junji Ito's manga, making it an incredible tool for...

How to Use and Understand the test_trainer_1 Financial Model

If you're venturing into the world of financial NLP (Natural Language Processing), you might come across the test_trainer_1 model. This guide will walk you through understanding this financial model, its architecture, intended uses, and possible troubleshooting steps....

How to Utilize the MultiLegalSBD: A Multilingual Legal Sentence Boundary Detection Dataset

Welcome to an insightful journey into the realm of Natural Language Processing (NLP) and sentence boundary detection (SBD) in the legal domain. Today, we will explore how to work with the "MultiLegalSBD" dataset, which heralds a new dawn in multilingual SBD,...

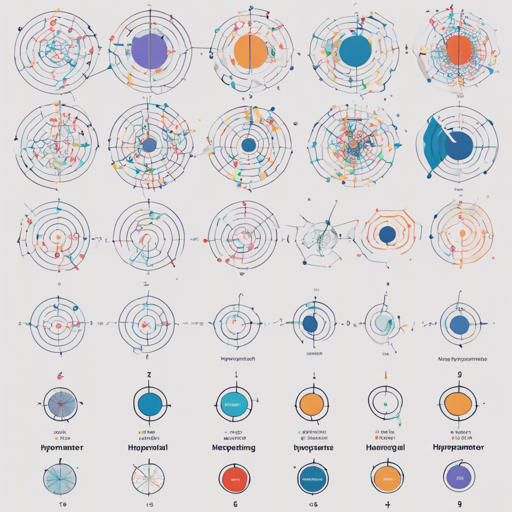

Mastering Hyperparameters in Deep Learning: A Quick Guide

Deep learning can seem daunting, especially when you're faced with a plethora of hyperparameters that need tuning. In this blog, we'll delve into some key hyperparameters—max sequence length, batch size, learning rate, and more—to better understand their roles and how...

The Usage of Tokenizer for Myanmar Language

In the world of natural language processing (NLP), tokenization is a crucial step that prepares text for analysis by splitting it into manageable pieces, or tokens. This blog will focus on the similarities of tokenizer usage for the Myanmar language, akin to that of...