The Metas Llama-3 8B model has been making waves in the AI community, primarily due to its unique approach to providing helpfulness without compromising on ethical boundaries. In this guide, we will walk you through using this powerful tool, ensuring you can harness...

How to Use PhoGPT: Generative Pre-training for Vietnamese

Welcome to your exciting journey into the world of AI language models with PhoGPT! In this article, we will guide you through using the PhoGPT model series, including PhoGPT-4B and its interactive chat variant, PhoGPT-4B-Chat. Whether you are a developer, researcher,...

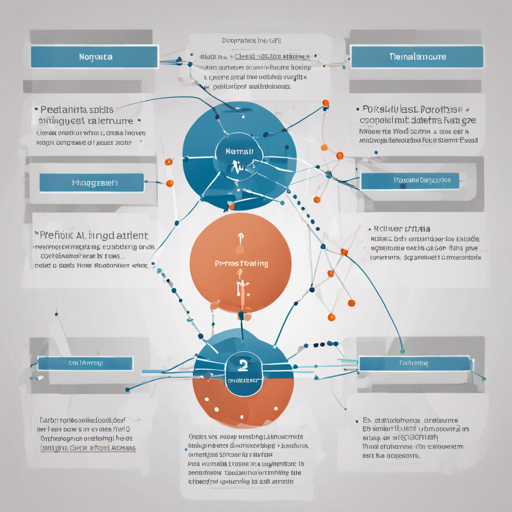

How to Fine-tune BERT-mini Model with M-FAC

In the world of Natural Language Processing (NLP), fine-tuning models for specific tasks can significantly enhance performance. In this guide, we will walk you through the process of fine-tuning the BERT-mini model using the M-FAC optimizer, an innovative approach...

Pretraining RoBERTa on Smaller Datasets: A Step-by-Step Guide

If you're diving into the world of Natural Language Processing (NLP) and want to explore how to pretrain the powerful RoBERTa model on smaller datasets, you've landed in the right place! In this article, we’ll take you through the process, including the essential...

How to Summarize Open-Domain Code-Switched Conversations with Gupshup

Welcome to our guide on utilizing the Gupshup framework for summarizing open-domain code-switched conversations, particularly useful for handling Hinglish dialogues. This easy-to-follow article will walk you through the steps to set up your environment, understand the...

How to Create a Chat-With-PDF Script Using Google Gemini Pro Models

Are you looking to have an interactive experience with your PDFs or .Docx files right from your Windows CMD console? Today, we will guide you through creating a Python script that utilizes Google Gemini Pro models with your own API Key. This article will walk you...

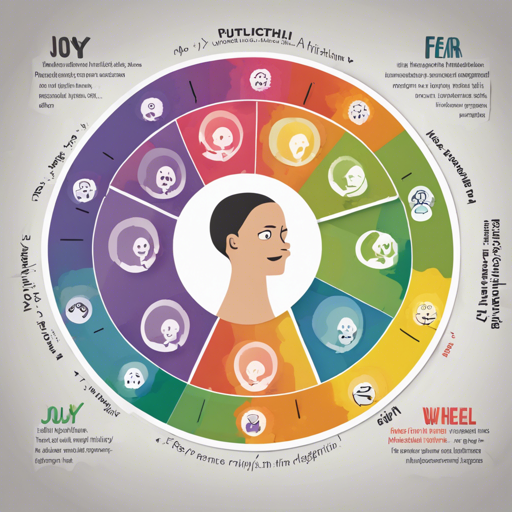

How to Understand and Utilize the Plutchik Emotion Model in AI Development

In the world of artificial intelligence, understanding emotions is pivotal for developing responsive and intuitive applications. Here, we’ll dive deep into a model that leverages the Plutchik's wheel of emotions, trained on the XED dataset, while discussing its...

Creating Stunning Dragons with Dragon Diffusion

Are you ready to embark on an enchanting journey into the world of digital dragons? If you’ve ever dreamt of designing fantastical dragons using AI, the Dragon Diffusion model is your key to success. This blog will guide you through how to use this Dreambooth model...

How to Download and Extract Models from WeNet E2E

If you're looking to leverage the power of WeNet End-to-End (E2E) models, you’ll find this guide useful. In this article, we will walk you step-by-step through the process of downloading and extracting a model from GitHub. Step 1: Downloading the Model The first step...