In this guide, we will explore how to utilize the RoBERTa model pre-trained on Thai Wikipedia texts for POS-tagging and dependency parsing. This model significantly enhances the way we can interpret the Thai language through advanced natural language processing...

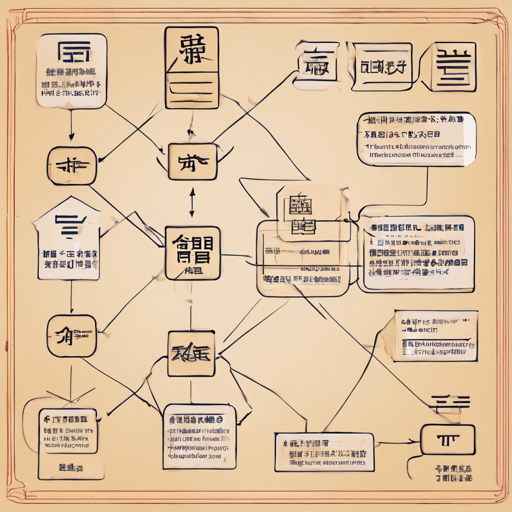

How to Use the RoBERTa Base Chinese UPOS Model for Token Classification

The RoBERTa Base Chinese UPOS model is a remarkable tool designed for part-of-speech tagging and dependency parsing in the Chinese language. This model is pre-trained on a wealth of data from Chinese Wikipedia, supporting both simplified and traditional scripts. With...

How to Use the Axiom Model for Basic Arithmetic Operations

Welcome to the future of arithmetic! The Axiom Model is an innovative neural network designed to execute basic arithmetic operations like addition, subtraction, multiplication, and division. In this guide, we will unveil a step-by-step process to utilize this beta...

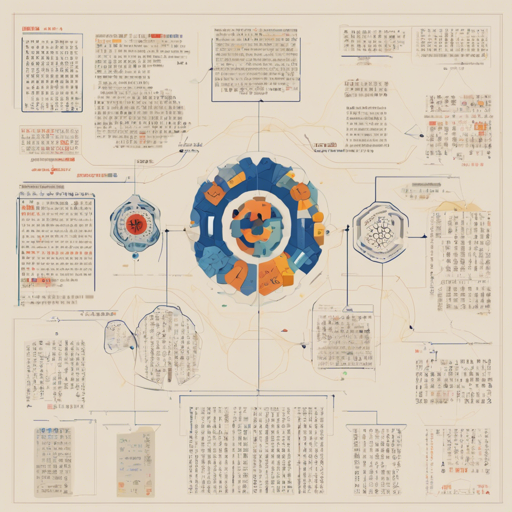

Exploring the RoBERTa-Large Korean Hanja Model: A Step-by-Step Guide

Welcome to the fascinating world of Natural Language Processing (NLP) with the RoBERTa-Large Korean Hanja model! This article will guide you through the process of utilizing this powerful model for various tasks. Let’s dive into the intricacies of working with this...

How to Work with the Tokyotech LLM Swallow Model

The Tokyotech LLM Swallow (MS-7b v0.1) is an exciting model designed for various AI applications. Today, we’ll explore how to utilize this model, particularly its quantized versions, and provide a step-by-step guide to ensure a smooth experience. Think of it like a...

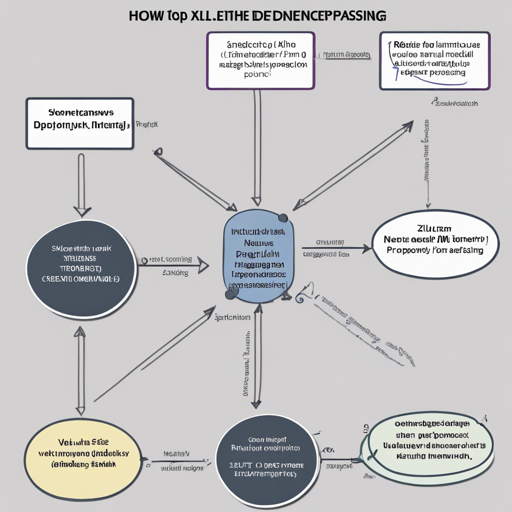

How to Use the XLM-RoBERTa Model for POS Tagging and Dependency Parsing

The world of Natural Language Processing (NLP) has evolved with remarkable tools that help us understand languages better, and one such stellar model is the XLM-RoBERTa. This guide will walk you through using the XLM-RoBERTa model pre-trained for Part-Of-Speech (POS)...

Getting Started with RoBERTa for Japanese Token Classification

If you're venturing into Natural Language Processing (NLP), specifically in Japanese language tasks like Part-of-Speech (POS) tagging and dependency parsing, this guide will help you use the RoBERTa model effectively. What is RoBERTa? RoBERTa (A Robustly Optimized...

How to Utilize the Dolus 14b Mini Model from Cognitive Machines Labs

Welcome to your comprehensive guide on using the Dolus 14b Mini model! If you're venturing into the realm of natural language processing and have landed at the doorstep of this exciting model, you're in for a treat. With its impressive capabilities, the Dolus 14b Mini...

How to Use the RoBERTa Japanese POS-Tagging and Dependency Parsing Model

In this article, we will explore the process of utilizing the RoBERTa model tailored for Japanese language tasks, specifically focusing on part-of-speech (POS) tagging and dependency parsing. This model, known as roberta-base-japanese-luw-upos, has been pre-trained to...