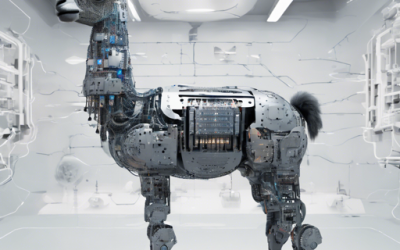

Welcome to our guide on how to effectively use the Vikhr-Llama-3.2-1B-Instruct model! This innovative model, based on Llama architecture, is specifically fine-tuned to yield excellent performance on Russian-language outputs. Designed for low-power and mobile devices,...

How to Utilize the Qwen2.5 Language Model

The Qwen2.5 language model is an advanced tool designed for various applications, particularly those requiring enhanced knowledge in coding and mathematics. Whether you're a researcher, developer, or AI enthusiast, this guide will walk you through the essentials of...

How to Use the All-MPNet-Base-V2 Model for Sentence Transformation

If you've ever wondered how machines understand the essence of sentences or paragraphs, you're in the right place! Today, we're going to explore the all-mpnet-base-v2 model from the sentence-transformers library. This powerful tool maps sentences into a...

How to Enhance Your ChatML Merges with MergeKit

Are you looking to improve your ChatML merges but facing challenges? Fear not! In this article, we'll guide you on how to enhance your ChatML merges using a library called MergeKit. We'll walk through the steps needed, helpful settings to consider, and potential...

How to Use the Qwen 2.5 Coder Model for AI Applications

Welcome to the ultimate guide on utilizing the Qwen 2.5 Coder Model, a cutting-edge tool for AI enthusiasts and developers. In this article, we’ll explore how to integrate this remarkable model into your projects, understanding quantized files and resolving potential...

How to Use EvolveDirector for Advanced Text-to-Image Generation

Welcome to the world of advanced text-to-image generation where imagination meets technology! In this blog, we will guide you through the setup and usage of the EvolveDirector model, a cutting-edge tool developed by EvolveDirector Team. Let's get started! Setting Up...

How to Leverage the Galgame Whisper Model for Speech Recognition

Welcome to the world of Galgame Whisper, a budding star in the realm of speech recognition! If you're interested in exploring this innovative model, you're in the right place. In this guide, we'll walk you through how to utilize the Galgame Whisper model and...

How to Finetune Llama 3.2 with Unsloth: Step by Step Guide

Finetuning your Llama 3.2 model just got a whole lot faster and more efficient! With the Unsloth framework, you can achieve remarkable speed improvements while using significantly less memory. Here, we will break down the process to make it user-friendly so that even...

How to Generate Stunning Images Using iPhone 15 Style LoRA with Diffusers

Welcome to a creative journey where coding meets imagination! This article will guide you through the process of using the iPhone 15 model with LoRA (Low-Rank Adaptation) to generate captivating images. Whether you are a beginner or have some experience, you'll find...