The Absolute-Rating Multi-Objective Reward Model (ArmoRM) powered by Mixture-of-Experts (MoE) is a revolutionary approach for evaluating AI responses across multiple objectives. If you're stepping into the realm of AI model evaluations, this guide will ensure you have...

How to Harness the Power of the Phi-3.5-mini Instruct Model

In the ever-evolving world of artificial intelligence, the Phi-3.5-mini model stands out as a lightweight, yet robust tool for those looking to enhance their NLP capabilities. In this guide, we'll walk you through how to use this model effectively, along with...

How to Work with the QuantFactory Model: A Comprehensive Guide

The QuantFactory Mistrilitary-7b-GGUF is a specialized machine learning model fine-tuned on US Army field manuals. This article will guide you through the setup and provide insights on how to effectively use this model for factual question-and-answer tasks. Getting...

How to Utilize the Falcon Mamba 7B Language Model

The Falcon Mamba 7B is an advanced language model designed to make text generation tasks smoother and more effective. In this article, we’ll explore how to use this model effectively, dive into its technical aspects, and troubleshoot common issues. Table of Contents...

How to Utilize ChemLLM-7B-Chat: Your Go-To Model for Chemistry and Molecule Science

Welcome to the fascinating world of ChemLLM-7B-Chat, an innovative open-source large language model catered specifically for chemistry and molecule science! If you're eager to delve into the essentials of this powerful tool, this guide will walk you through its setup,...

How to Effectively Fine-Tune and Use the xomadgliner-model-merge-large-v1.0 for Named Entity Recognition

In the world of Natural Language Processing, fine-tuning a model is akin to polishing a diamond—it can turn a rough initial model into a brilliant tool for various applications. Today, we’ll explore how to fine-tune and use the xomadgliner-model-merge-large-v1.0...

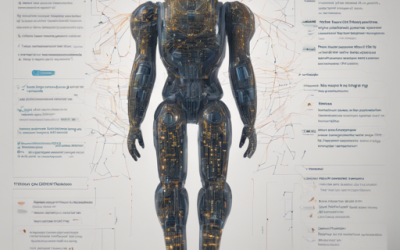

How to Harness the Power of Qwen2-VL-72B Instruct

Welcome to the future of visual language models with **Qwen2-VL**, an advanced system designed to revolutionize how we interpret and interact with images and videos. This guide will walk you through the features, functionalities, and how to get started with...

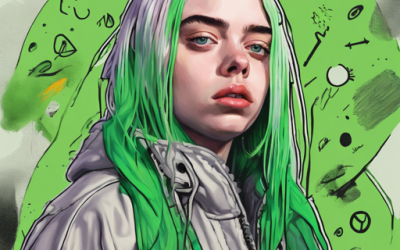

How to Create Stunning Images of Billie Eilish Using Text-to-Image Generators

Are you an aspiring visual artist or simply want to capture the essence of Billie Eilish through captivating images? Using a text-to-image generator can be an exciting way to bring your creative visions to life. In this guide, we will explore how to leverage text...

How to Leverage ESPnet and VITS for Advanced Speech Processing

In today's technologically driven world, speech processing plays a pivotal role in enhancing communication between humans and machines. With the rise of AI frameworks like ESPnet and VITS, developers can create exceptional speech synthesis and recognition...