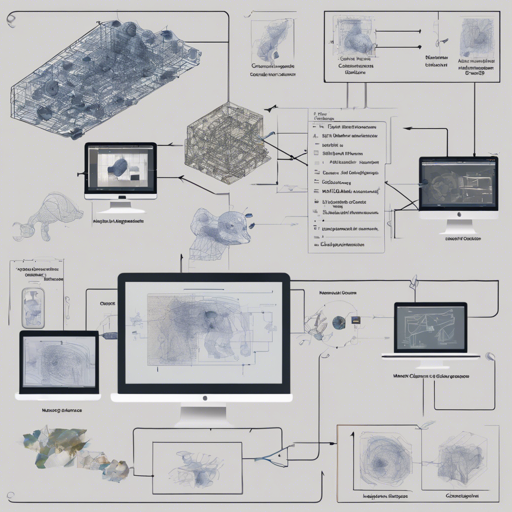

The world of image classification is ever-evolving, and one of the latest titans in this realm is the ConvNeXt_Pico model, designed by Ross Wightman. In this article, we'll go step-by-step on how to use this state-of-the-art model to classify images, extract feature...

How to Leverage the ConvNeXt Nano for Image Classification

The world of image classification is continuously evolving, and the ConvNeXt Nano model is a prime example of how far we've come. Developed by Ross Wightman and trained on the renowned ImageNet-1k dataset, this model stands out due to its efficiency and performance....

A Comprehensive Guide to Using the ConvNeXt Image Classification Model

The ConvNeXt model, specifically convnext_atto_ols.a2_in1k, is a state-of-the-art image classification tool developed by Ross Wightman. This guide will provide a user-friendly walkthrough of how to utilize this powerful model for your image classification tasks, along...

How to Use CapyLake-7B-v2-Laser for AI Development

The CapyLake-7B-v2-Laser model is an advanced tool designed for generating rich and creative text outputs. Finetuned from the WestLake-7B-v2-Laser model, this model utilizes the argilla distilabel capybara dpo 7k binarized dataset to enhance its performance. In this...

How to Use ResNet-B (resnet18.a1_in1k) for Image Classification

In this guide, we will walk through how to utilize the ResNet-B model for image classification using the timm library. We will cover the essential steps, including setting up the environment, loading images, and making predictions. If you encounter any issues along...

How to Generate Dark Fantasy Images Using Stable Diffusion

Welcome to the magical world of image generation! In this article, we will guide you through the process of creating stunning dark fantasy-themed images inspired by the artistic styles of the 1970s and 1980s using the Stable Diffusion model. Whether you're a seasoned...

A Guide to Using Mixtral Instruct with AWQ Quantization

Welcome to our step-by-step guide on utilizing Mixtral Instruct, a model that has been optimized through AWQ quantization. This blog will help you navigate this robust tool, providing tips, troubleshooting support, and illuminating insights into the world of...

How to Build a Russian Mistral-based Chatbot with SaigaMistral 7B

In the realm of AI development, creating conversational agents has never been more exciting. Today, we’ll explore how to build a chatbot named Saiga, based on the impressive Mistral 7B architecture. This guide is user-friendly, perfect for developers keen on working...

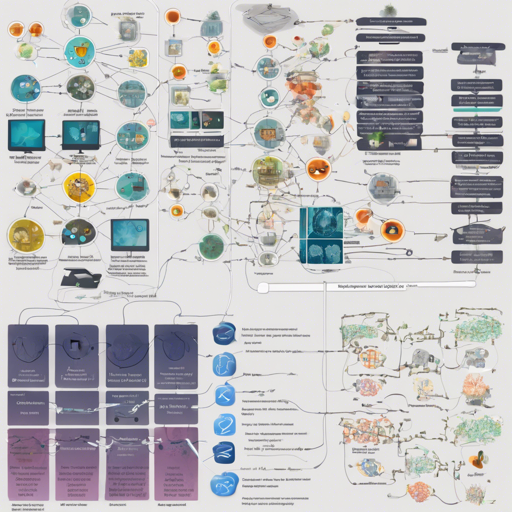

How to Use the CLIP Model with Sentence Transformers

In the rapidly evolving world of artificial intelligence, the CLIP (Contrastive Language-Image Pretraining) model offers an exciting way to connect images and text through the power of shared vector spaces. With libraries such as sentence-transformers, implementing...