Welcome to this exciting journey of analyzing job data from Lagou. In this blog, we will break down the process step-by-step, making it user-friendly even for those new to data analysis and web scraping. We’ll also explore how to troubleshoot common issues you might encounter along the way. So, let’s dive in!

Introduction

This repository contains the code for extensive job data analysis sourced from Lagou. The main functions included in this project are:

- Crawling job data from Lagou to get the latest job information in the Internet sector.

- Collecting IP proxies from XiCiDaiLi.

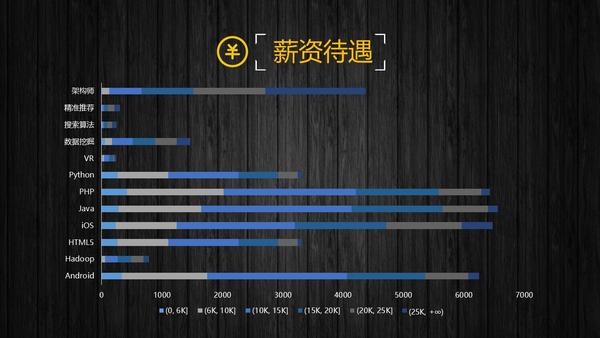

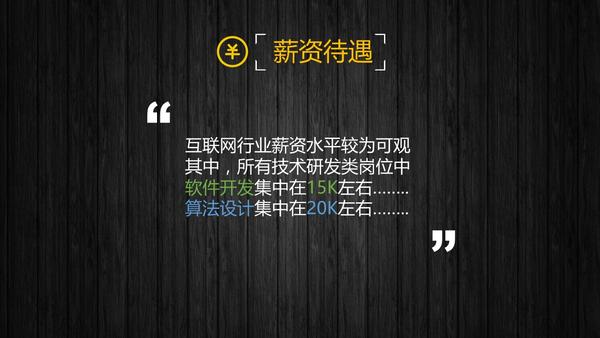

- Performing data analysis and visualization.

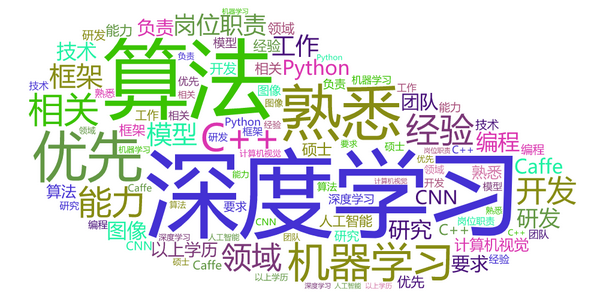

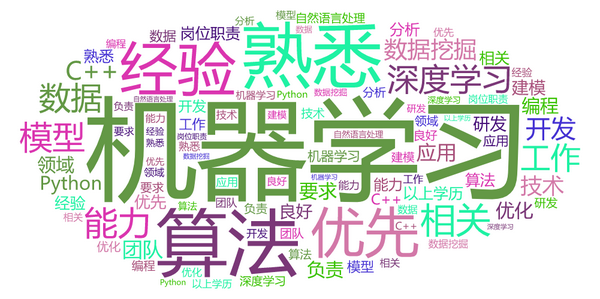

- Crawling job details and generating a word cloud, termed as __Job Impression__.

- Storing interviewee comments in MongoDB for training an NLP task with machine learning.

Prerequisites

Before you start, ensure you have the following prerequisites installed:

- 3rd party libraries – Install them with the command:

sudo pip3 install -r requirements.txtsudo service mongod startHow to Use

Now that your setup is ready, it’s time to dive into the usage of our tool:

- Clone this project from GitHub.

- Due to frequent updates to Lagou’s anti-spider strategy, it’s recommended you run

proxy_crawler.pyto acquire IP proxies before executing the code with PhantomJS. - Run

m_lagou_spider.pyto crawl job data. It will generate a collection of Excel files in the.datadirectory. - Execute

hot_words_generator.pyto process sentences and generate the __TOP-30__ hot words along with a word cloud visualization.

Understanding the Code: An Analogy

Think of coding as baking a cake. Each ingredient in your recipe represents a line of code, working in harmony to create the final product. Just like how you need to measure your flour, sugar, and eggs precisely, each line of code needs to be written correctly to ensure the operations run smoothly.

Your crawler is akin to a mixer that whips your ingredients together—fetching job data, which is the batter ready to be baked. The visualization you generate is your cake’s icing that presents the delicious results of your process. Just like you wouldn’t want to skip checking your oven temperature, monitoring for errors and tweaking your code is just as vital in ensuring success!

Analysis Results

Here are some results of our data analysis:

Troubleshooting

If you encounter issues such as failure to scrape data or empty Excel files, consider the following troubleshooting tips:

- Ensure your IP proxies are functioning properly; re-run the

proxy_crawler.pyscript to collect new proxies. - Check your installation of PhantomJS. Make sure it is updated to avoid compatibility issues.

- Verify the internet connection to ensure the crawler can access Lagou.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.

Additional Resources

For technical details, please refer to my answer at Zhihu. The PDF report can also be downloaded here.

Change Log

- [V2.0] – 2019.04. Upgraded to PhantomJS and IP proxies.

- [V1.2] – 2017.05. Rewrite WordCloud visualization module.

- [V1.0] – 2017.04. Upgraded to mobile Lagou.

- [V0.8] – 2016.05. Finish Lagou PC web spider.

Happy coding!