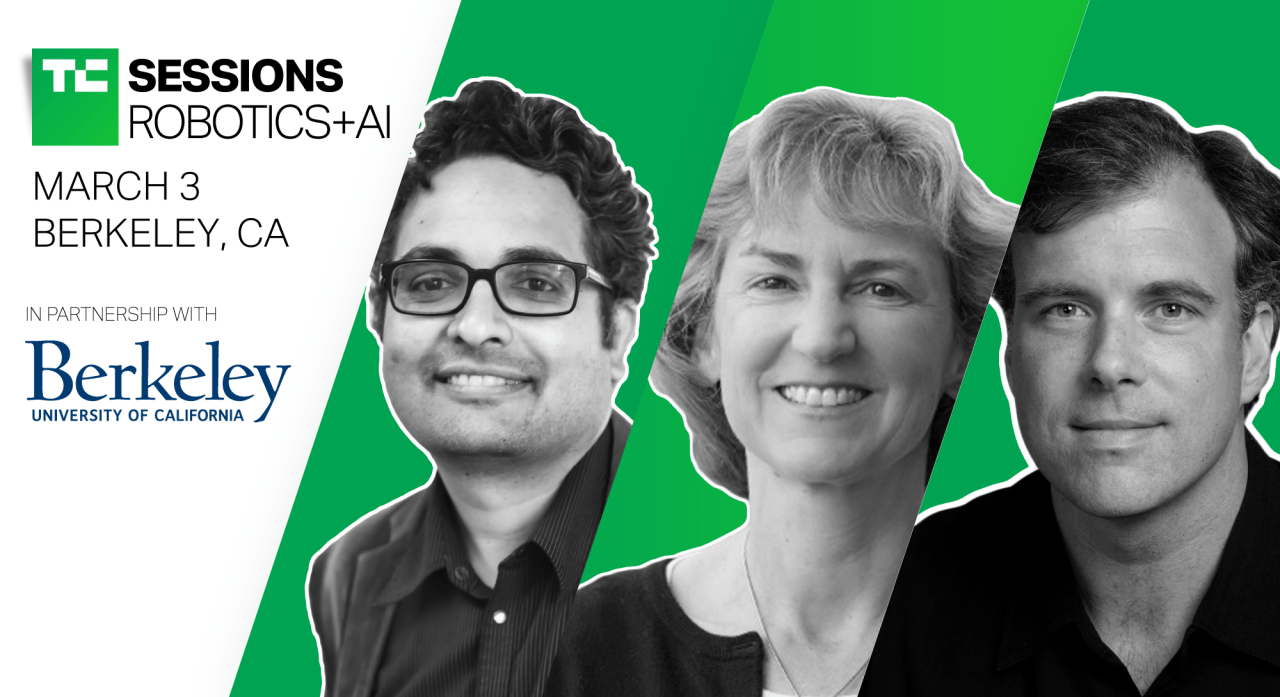

As artificial intelligence continues to seep into every facet of our lives—from smart home devices and autonomous cars to AI-driven decision-making in businesses—it is crucial for us to comprehend the ‘how’ and ‘why’ behind the decisions made by these complex systems. This is where the concept of explainable AI (XAI) comes into play. On March 3, industry professionals and academic experts will unite on stage at TC Sessions: Robotics+AI to engage in meaningful dialogue about the intricacies of making AI systems more transparent.

What Does Explainable AI Really Mean?

At its core, explainable AI is about transparency. It’s the process of making AI’s decision-making pathways comprehensible to human users. But understanding doesn’t merely entail breaking down code into layman’s terms; it involves a comprehensive analysis of model choices and the implications they hold. As AI models become increasingly complex and opaque, the challenge lies in presenting this information in a way that is accessible and usable without oversimplifying critical aspects.

The Panel of Experts

The panel at TC Sessions will feature:

- Trevor Darrell: A professor from UC Berkeley’s Computer Science department, Trevor’s research aims to enhance human-AI interactions while addressing smart transportation’s evolving landscape. His views on perception and human understanding will offer valuable insights.

- Krishna Gade: With experience from Facebook, Pinterest, Twitter, and Microsoft, Krishna witnessed firsthand how flaws in AI development can lead to unintentional biases. Co-founding Fiddler, he has been actively working towards solutions that foster greater fairness and transparency in AI systems.

- Karen Myers: Representing SRI International, Karen brings her extensive experience in AI development, focusing on collaboration and multi-agent systems. Her role as moderator promises to navigate the conversation towards practical implementations.

Addressing Key Questions

The panel aims to tackle several pressing questions concerning explainable AI:

- Do we need to start from scratch? As we evolve our understanding of AI, are foundational models still relevant?

- How do we avoid exposing proprietary data and methods? This is a critical concern for many organizations.”

- Will there be a performance hit? Will enhancing explainability come at the cost of efficiency or accuracy?

- Whose responsibility is it to ensure proper implementation? Establishing accountability in AI deployment is essential.

The Importance of Explainability

As AI systems integrate into more areas of our lives, the stakes are higher for clear accountability. Brushing over the importance of explainability may lead to eroded trust and acceptance of AI technologies. At the same time, lack of transparency could perpetuate biases and unfavorable outcomes, jeopardizing both user safety and corporate integrity.

Conclusion

Technology is advancing rapidly, but with this progression comes the responsibility to ensure that AI remains transparent and fair. The discussions at TC Sessions: Robotics+AI are essential for paving the way for a future where AI can be trusted and understood. As we endeavor to peel back the layers obscuring machine learning algorithms, we are not just making technological advancements—we’re also laying the groundwork for a more ethical AI landscape.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai. At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.