By Radek Daněček · Michael J. Black · Timo Bolkart

CVPR 2022

This repository is the official implementation of the CVPR 2022 paper EMOCA: Emotion-Driven Monocular Face Capture and Animation. For a more advanced face reconstruction network, check the inferno library, specifically its FaceReconstruction module.

Understanding EMOCA

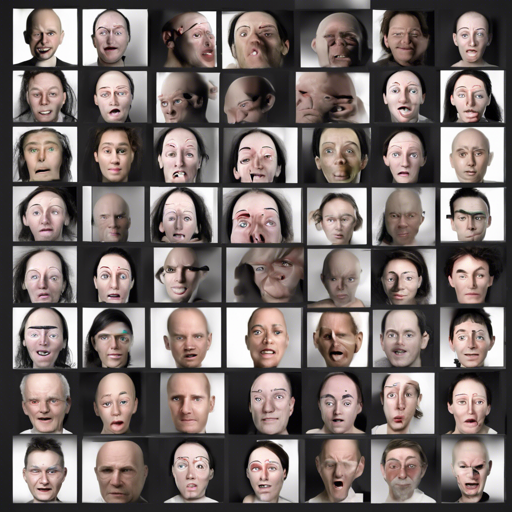

EMOCA is like a digital sculptor that takes a single, candid photograph of a face as its raw material and meticulously crafts a 3D representation that not only resembles the person but also conveys their emotions. Imagine you have a beautiful piece of clay (the image), and with each careful tap and stroke, the sculptor (EMOCA) brings the features of the face to life in a way that captures the subtle nuances of the person’s expression.

With EMOCA, the goal is to accurately capture emotional expressions from images, enabling a realistic portrayal of feelings that could be used in various applications, from animation to virtual reality.

Installation Guide

To unleash the power of EMOCA, follow these installation steps:

Dependencies

- Install conda.

- Install mamba.

- Clone the repository with submodules:

- Run the installation script:

git clone --recurse-submodules bash install_38.shIf this procedure ran without any errors, you now have a functioning conda environment!

For those facing installation hurdles

Sometimes installations can be as tricky as assembling IKEA furniture. Here are some common troubleshooting tips:

- If you encountered issues with certain packages (like CUDA or PyTorch), ensure Pytorch is installed with the correct version:

mamba install pytorch==1.12.1 torchvision==0.13.1 torchaudio==0.12.1 cudatoolkit=11.3 -c pytorchbash conda activate work38_cu11pip install -U opencv-pythonFor more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Usage Instructions

Once your installation is successful, you can begin using EMOCA:

- Activate the environment:

- To run EMOCA examples, navigate to the EMOCA directory.

- For emotion recognition examples, visit the EmotionRecognition directory.

bash conda activate work38_cu11Project Structure

This repository contains two primary subpackages:

- gdl: A library with research code, including deep learning modules, data sets, and utilities.

- gdl_apps: Prototypes utilizing the GDL library, focusing on training, testing, and analyzing models.

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.

Conclusion

EMOCA is a powerful tool in the world of facial expression and animation, harnessing the capability of neural networks to transform simple images into expressive 3D models. By managing your installations and troubleshooting issues with the above guidelines, you can step confidently into this exciting realm.