Data analysis forms the backbone of informed decision-making in today’s digital landscape. Moreover, exploratory data analysis (EDA) serves as the crucial first step in understanding datasets before diving into complex modeling. Consequently, mastering EDA techniques enables data professionals to extract meaningful insights and identify hidden patterns within their data.

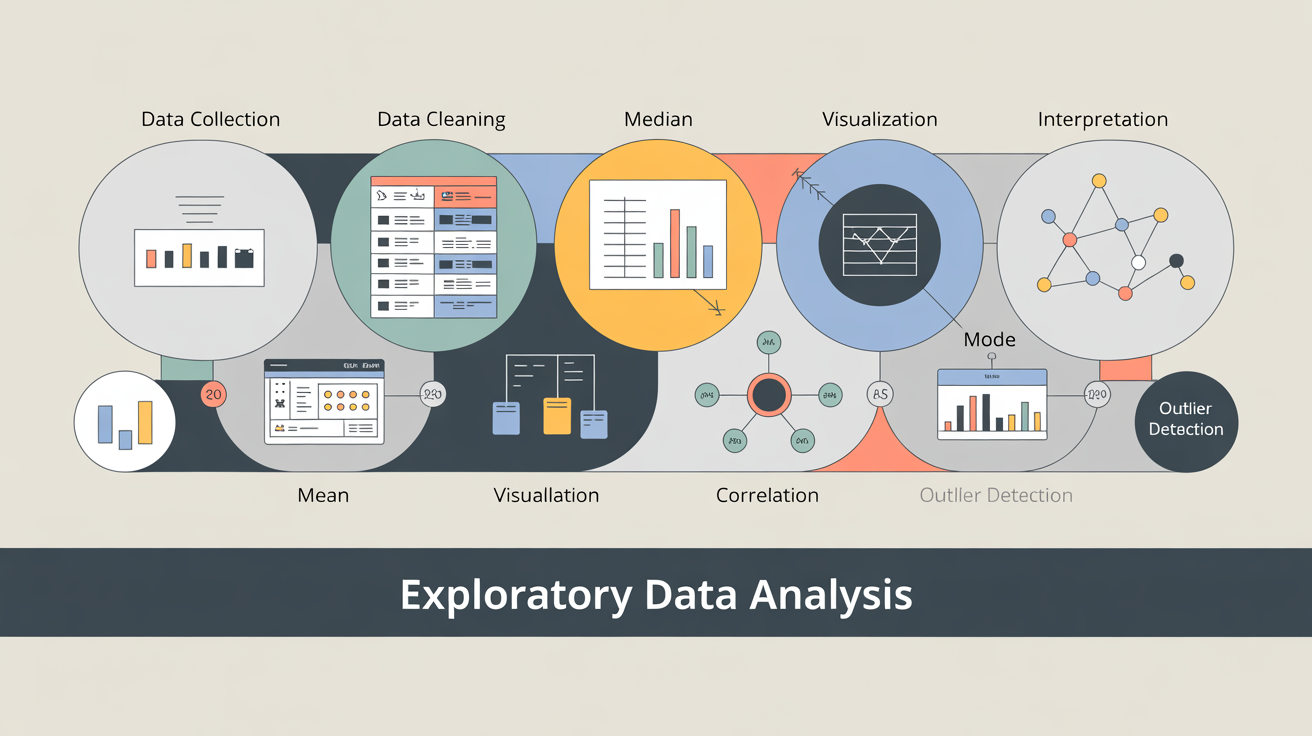

Exploratory Data Analysis encompasses a systematic approach to examining datasets through statistical summaries and visual representations. Furthermore, this methodology helps analysts understand data structure, detect anomalies, and formulate hypotheses for further investigation. Therefore, implementing proper EDA practices significantly improves the quality of subsequent analytical work.

What is Exploratory Data Analysis?

EDA is the process of analyzing datasets to summarize their main characteristics using visual and statistical methods. Moreover, it serves as the crucial first step in any data science project. Additionally, EDA helps data scientists understand data structure, identify patterns, and formulate questions for deeper investigation.

Unlike traditional statistical analysis that tests specific hypotheses, EDA focuses on discovering what the data reveals. Furthermore, this approach acts as the bridge between raw data and meaningful insights. Therefore, learning EDA is essential for anyone beginning their data analysis journey.

Descriptive Statistics: Mean, Median, Mode, Standard Deviation

Descriptive statistics provide the foundation for understanding data characteristics. Additionally, these measures offer quantitative summaries that reveal central tendencies and variability patterns within datasets. Subsequently, analysts can make informed decisions about data preprocessing and modeling approaches.

Mean represents the arithmetic average of all data points. However, it can be sensitive to extreme values or outliers. For instance, in salary data, a few exceptionally high earners might skew the mean upward, making it less representative of typical salaries.

Median offers the middle value when data points are arranged in order. Therefore, it provides a more robust measure of central tendency, especially when dealing with skewed distributions. Unlike the mean, the median remains relatively stable even when extreme values are present.

Mode identifies the most frequently occurring value in a dataset. Consequently, it proves particularly useful for categorical data analysis. For example, in customer preference surveys, the mode reveals the most popular product choice among respondents.

Standard deviation measures how spread out data points are from the mean. Furthermore, it quantifies the variability within datasets, helping analysts understand data consistency. A small standard deviation indicates data points cluster closely around the mean, while a large standard deviation suggests greater variability.

Data Distribution Analysis: Histograms, Box Plots, Q-Q Plots

Understanding data distribution patterns is essential for selecting appropriate analytical techniques. Moreover, visualization tools help reveal the underlying structure of datasets and identify potential issues that might affect analysis outcomes.

Histograms display the frequency distribution of continuous variables. Additionally, they reveal whether data follows normal, skewed, or multimodal distributions. For instance, examining income distribution through histograms often reveals right-skewed patterns, indicating that most people earn moderate incomes while few earn extremely high amounts.

Box plots provide a comprehensive view of data distribution through five key statistics: minimum, first quartile, median, third quartile, and maximum. Furthermore, they effectively highlight outliers and compare distributions across different groups. Therefore, box plots serve as excellent tools for identifying data quality issues and understanding variability patterns.

Q-Q plots (quantile-quantile plots) compare sample data against theoretical distributions. Subsequently, they help determine whether data follows specific probability distributions, such as normal or exponential. Consequently, Q-Q plots guide analysts in selecting appropriate statistical tests and modeling approaches.

Correlation and Covariance: Pearson, Spearman, Kendall Coefficients

Correlation analysis reveals relationships between variables, enabling analysts to understand how different factors influence each other. Moreover, different correlation coefficients capture various types of relationships, providing comprehensive insights into data interdependencies.

Pearson correlation coefficient measures linear relationships between continuous variables. Furthermore, it ranges from -1 to +1, where values near -1 indicate strong negative relationships, values near +1 indicate strong positive relationships. However, Pearson correlation only captures linear relationships and assumes normally distributed data. Additionally, remember that correlation does not imply causation – a fundamental concept in statistical analysis.

Spearman correlation coefficient evaluates monotonic relationships using rank-based calculations. Therefore, it proves more robust than Pearson correlation when dealing with non-normal distributions or ordinal data. For example, Spearman correlation effectively captures relationships in customer satisfaction ratings where responses follow ordinal scales.

Kendall’s tau coefficient offers another rank-based correlation measure that performs well with small sample sizes. Furthermore, it provides more accurate results when datasets contain tied ranks or outliers. Consequently, Kendall’s tau serves as a reliable alternative when other correlation measures might be compromised.

Visualization Best Practices: Matplotlib, Seaborn, Plotly

Effective data visualization transforms complex datasets into comprehensible insights. Additionally, modern Python libraries provide powerful tools for creating professional-quality visualizations that communicate findings clearly.

Matplotlib serves as the foundational plotting library in Python. Moreover, it offers extensive customization options for creating publication-ready figures. However, achieving polished visualizations often requires detailed coding and formatting specifications.

Seaborn builds upon Matplotlib to provide statistical visualization capabilities with minimal code. Furthermore, it includes built-in themes and color palettes that produce aesthetically pleasing plots automatically. Therefore, Seaborn accelerates the visualization process while maintaining professional quality standards.

Plotly enables interactive visualizations that enhance user engagement and exploration capabilities. Additionally, it supports web-based deployment, making it ideal for sharing insights with stakeholders. Consequently, Plotly visualizations allow users to zoom, filter, and interact with data dynamically.

When creating visualizations, several best practices ensure maximum impact:

- Choose appropriate chart types based on data characteristics and analytical objectives

- Maintain consistent color schemes throughout visualizations to avoid confusion

- Include clear labels and titles that explain what the visualization represents

- Remove chart junk such as unnecessary gridlines or decorative elements

Identifying Data Anomalies and Outliers

Outlier detection plays a critical role in maintaining data quality and ensuring accurate analysis results. Moreover, different types of anomalies require specific identification and handling strategies to prevent them from skewing analytical conclusions.

Statistical methods for outlier detection include z-score analysis and interquartile range (IQR) calculations. Additionally, the z-score method identifies data points that deviate significantly from the mean, typically beyond two or three standard deviations. Similarly, the IQR method flags values that fall below Q1 – 1.5×IQR or above Q3 + 1.5×IQR.

Visual inspection through scatter plots, box plots, and histograms often reveals outliers that statistical methods might miss. Furthermore, combining multiple visualization techniques provides comprehensive outlier identification coverage. Therefore, visual methods complement statistical approaches for robust anomaly detection.

Domain expertise remains crucial for determining whether outliers represent genuine anomalies or valuable extreme cases. For instance, in financial data, extremely high transaction amounts might indicate fraud or legitimate high-value purchases. Consequently, business context helps differentiate between data errors and meaningful outliers.

Handling strategies for outliers include removal, transformation, or separate analysis depending on their nature and impact. Additionally, transformation techniques such as logarithmic scaling can reduce outlier influence while preserving data integrity. Therefore, careful consideration of outlier treatment ensures optimal analytical outcomes.

Implementing EDA in Practice

Successful EDA implementation requires systematic approaches that combine statistical analysis with domain knowledge. Moreover, following structured processes helps beginners develop good analytical habits.

Step-by-Step EDA Process

Begin by examining basic dataset characteristics using functions like df.info() and df.describe() in pandas. Furthermore, create appropriate visualizations for each variable type and calculate descriptive statistics. Additionally, explore relationships between variables using correlation analysis and scatter plots.

Next, examine complex relationships involving multiple variables simultaneously. Moreover, document findings throughout the process and create reproducible analysis scripts. Finally, compile EDA findings into clear, actionable reports that serve both technical and business audiences.

FAQs:

- How long should the EDA process take for a typical dataset?

EDA duration varies significantly based on dataset complexity and analytical objectives. Generally, allocating 20-30% of total project time to Exploratory Data Analysis ensures thorough understanding while maintaining project momentum. However, complex datasets with multiple data sources might require extended exploration periods. - Which correlation coefficient should I use for my analysis?

Choose Pearson correlation for linear relationships between continuous, normally distributed variables. Use Spearman correlation for monotonic relationships or ordinal data. Select Kendall’s tau for small sample sizes or when datasets contain many tied values. - How do I decide whether to remove or keep outliers?

Outlier treatment depends on their cause and impact on analysis objectives. Remove outliers caused by data entry errors or measurement mistakes. Keep outliers that represent genuine extreme cases relevant to your analysis. Consider separate analysis for outliers when their inclusion significantly affects results. - What’s the difference between EDA and confirmatory data analysis?

EDA focuses on discovering patterns and generating hypotheses without predetermined expectations. Confirmatory analysis tests specific hypotheses using formal statistical methods. EDA serves as the exploratory phase that informs subsequent confirmatory analysis approaches. - Can I perform EDA on non-numerical data?

Yes, EDA applies to categorical, ordinal, and text data through appropriate techniques. Use frequency tables, bar charts, and chi-square tests for categorical variables. Apply text mining and natural language processing methods for unstructured text data analysis. - How do I handle missing data during EDA?

First, identify missing data patterns and percentages for each variable. Then, investigate whether data is missing completely at random, at random, or not at random. Finally, choose appropriate handling methods such as deletion, imputation, or specialized missing data analysis techniques. - What sample size is required for effective EDA?

Exploratory Data Analysis can be performed on datasets of any size, but larger samples generally provide more reliable insights. For statistical significance testing, aim for at least 30 observations per group. However, even small samples can reveal valuable patterns through careful exploratory analysis.

Stay updated with our latest articles on fxis.ai