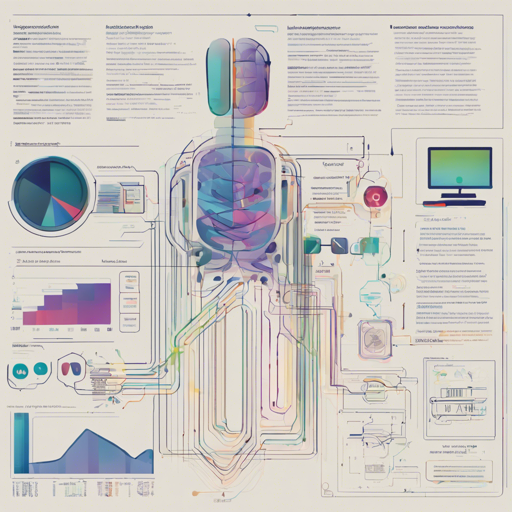

In the world of speech recognition, fine-tuning pre-trained models is crucial for improving their accuracy on specific datasets. This article will guide you through the process of fine-tuning the wav2vec2-base model on the Librispeech dataset, a popular collection for speech recognition tasks. By the end of this tutorial, you’ll have a solid understanding of the process and what results you can expect.

Overview of Fine-Tuning wav2vec2-base

On March 3, 2021, the wav2vec2-base model was fine-tuned in a rigorous experiment using 100 hours of Librispeech training data. The results indicated that while the model performed admirably on clean data, its performance dropped significantly on noisy data, suggesting an overfitting issue to the clean data.

Setting Up the Environment

Before you start the training process, make sure your environment is set up correctly:

- Use 2 GPUs (Titan RTX) for optimal performance.

- Install necessary libraries such as PyTorch and Hugging Face’s Transformers.

Training Configuration

The fine-tuning took place using the librispeech-clean-train.100, with the following hyper-parameters:

- Total update steps: 13,000

- Batch size per GPU: 32, resulting in an approximate total batch size of 1500 seconds

- Optimizer: Adam with a linearly decaying learning rate including 3,000 warmup steps

- Dynamic grouping for batch processing

- Floating point 16 training (fp16)

- Attention mask was not used during training

Understanding the Training Process

To help understand this technical process, let’s use an analogy. Imagine you are a painter preparing to create a masterpiece. You have a chance to learn techniques from a seasoned artist (pre-trained model), but you must also adapt those techniques to your unique style (specific dataset). In this case, the wav2vec2-base model is the seasoned artist, while the Librispeech dataset is your canvas. By combining both effectively, you can create a piece that reflects the nuances of the dataset.

Results Overview

Upon completing the fine-tuning process, the model’s performance was measured using Word Error Rate (WER) on the Librispeech test set:

- Clean Data WER: 6.5

- Noisy Data WER: 18.7

These results revealed a significant performance gap, hinting at the possibility of overfitting to the clean dataset.

Troubleshooting Tips

If you encounter any issues during the fine-tuning process, here are some troubleshooting steps:

- Ensure that your GPU setup is configured properly. Use

nvidia-smito check available GPUs. - Double-check your dataset and hyper-parameters to make sure they match what was outlined.

- Monitor for any environment-related issues, especially with package versions.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

Fine-tuning the wav2vec2-base model on the Librispeech dataset showcases the delicate balance between performance on clean and noisy data. Understanding the parameters and results can greatly enhance your speech recognition projects.

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.