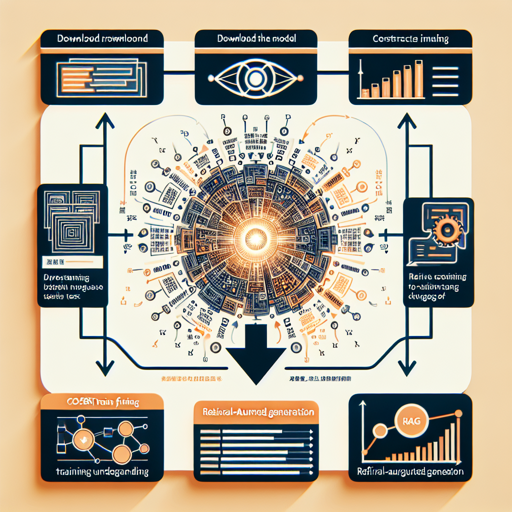

Understanding language through the lens of textual similarity can be a crucial tool in natural language processing (NLP). In this blog, we’re diving into using the CoSENT training framework to assess similarity between Chinese texts using a powerful model. Let’s explore the steps involved in downloading the model, comparing sentence similarity, and building a robust retrieval-augmented generation (RAG) system!

Overview

This model is designed specifically for language understanding of Chinese texts and leverages the **CoSENT** training framework for the **Retrieval-Augmented Generation (RAG)** task. Below, we’ll guide you step-by-step on how to set it up and utilize it effectively.

Download the Model

To get started, you’ll need to download the model. Here’s how to do it:

from transformers import AutoTokenizer, AutoModel

tokenizer = AutoTokenizer.from_pretrained("Mike0307/text2vec-base-chinese-rag")

model = AutoModel.from_pretrained("Mike0307/text2vec-base-chinese-rag")Example of Similarity Comparison

Now that you have the model ready, let’s write a function to compare the similarity between two sentences. Think of this process like comparing two different paintings to see how similar they are in style and color!

import torch

def mean_pooling(model_output, attention_mask):

token_embeddings = model_output[0]

input_mask_expanded = attention_mask.unsqueeze(-1).expand(token_embeddings.size()).float()

return torch.sum(token_embeddings * input_mask_expanded, 1) / torch.clamp(input_mask_expanded.sum(1), min=1e-9)

sentences = [

"福井舞所屬哪家唱片公司?",

"23歲時出道、血型A型的福井舞是出身於京都的日本女創作歌手,所屬唱片公司為J-more。2004年,與WADAGAKI、SHINO組合地下音樂隊Poplar,發表了兩張專輯,天照和夢死物語。在2006年時退出,2007年10月加入了Avex獨立發展。"

]

encode_output = tokenizer(sentences, padding=True, truncation=True, return_tensors="pt", max_length=512)

model_output = model(**encode_output)

embeddings = mean_pooling(model_output, encode_output['attention_mask'])

similarity = torch.cosine_similarity(embeddings[0], embeddings[1], dim=0)

# Output similarity tensorIn this function, we compute the mean pooling of the embeddings and then measure cosine similarity between the two sentences. Just like analyzing two musical compositions, we examine their harmony or dissonance!

RAG with Langchain

Integrating retrieval-augmented generation adds another layer of functionality to our model. Install the necessary packages using:

pip install --upgrade --quiet langchain langchain-community1. Use This Embedding Model to Build a Retriever

Here’s how to create a retriever using the model you downloaded:

from langchain_community.embeddings.huggingface import HuggingFaceEmbeddings

model_name = "Mike0307/text2vec-base-chinese-rag"

embeddings = HuggingFaceEmbeddings(model_name=model_name)An Example of a Retriever with Meta FAISS Vectorstore

Here’s a snippet that demonstrates how to use the embeddings with a FAISS vector store:

from langchain.schema import Document

from langchain_community.vectorstores.faiss import FAISS

documents = [

Document(page_content="埃及聖䴉(學名:Threskiornis aethiopicus)..."),

Document(page_content="隨著科技的不斷發展和革新...")

]

db = FAISS.from_documents(documents, embeddings)

retriever = db.as_retriever(search_kwargs={"k": 1})

retriever.invoke("福井舞所屬哪家唱片公司?") # Outputs relevant document2. Use HuggingFace LLM as Customized Langchain LLM

Sometimes, you won’t want to rely on external APIs. Here’s how to setting up a HuggingFace LLM:

import torch

from transformers import AutoModelForCausalLM, AutoTokenizer

llm_id = "Mike0307/Phi-3-mini-4k-instruct-chinese-lora"

model = AutoModelForCausalLM.from_pretrained(llm_id, device_map="mps", torch_dtype=torch.float32)

tokenizer = AutoTokenizer.from_pretrained(llm_id)With this, you can create a Langchain LLM class using the model and tokenizer you downloaded. Ensure your setups are similar to creating a tailored outfit that fits just right!

3. Make a Simple RAG Chain

Finally, let’s use a prompt and build a simple RAG chain.

import langchain

langchain.debug = True # Check the chain process

prompt = PromptTemplate.from_template(template="<|user|>{documents}\n{question} <|end|>\n<|assistant|>")

llm = CustomLLM(model, tokenizer)

rag = { "question": RunnablePassthrough(), "documents": retriever } | prompt | llm

query = "埃及聖䴉是什麼?"

result = rag.invoke(query) # Outputs answerTroubleshooting

- If you encounter an issue with model downloads, ensure your internet connection is stable.

- Check your Python environment; ensure that dependencies are installed.

- Misconfiguration of the device can lead to errors. Adjust device maps as necessary.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

By following these steps, you can utilize the CoSENT training framework to understand text similarity with ease. Remember, this is just the tip of the iceberg, and there are many other methodologies to explore!

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.