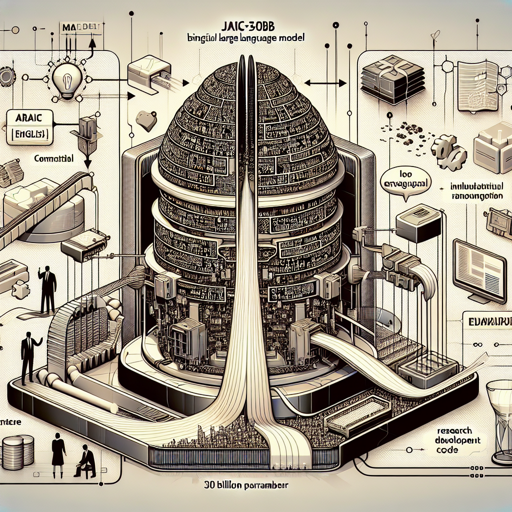

Welcome to the world of Jais-30b, a sophisticated 30 billion parameter bilingual large language model (LLM) designed to facilitate seamless interactions in both Arabic and English. This model is not just an upgrade; it’s built on a rich dataset containing an impressive array of 126 billion Arabic tokens, 251 billion English tokens, and 50 billion code tokens. It uses advanced architecture and cutting-edge techniques to deliver precise context handling and impressive output quality. Whether you’re a researcher, developer, or commercial user, this guide will help you navigate the exciting possibilities offered by Jais-30b.

What Makes Jais-30b Stand Out?

Jais-30b utilizes a transformer-based decoder-only architecture resembling that of GPT-3, integrating SwiGLU non-linearity for enhanced performance. One of its significant features is the implementation of ALiBi position embeddings, which empowers the model to handle long sequences effectively. Just imagine a vast library where every book is perfectly indexed; the model can quickly find the relevant information without losing context over long passages.

How to Get Started

To harness the power of Jais-30b, you’ll need to set up your environment. Below is a sample code to help you get started with the model. Please ensure that you have the required libraries installed and are using the specified version of transformers (4.32.0).

python

# -*- coding: utf-8 -*-

import torch

from transformers import AutoTokenizer, AutoModelForCausalLM

model_path = 'core42/jais-30b-v1'

device = 'cuda' if torch.cuda.is_available() else 'cpu'

tokenizer = AutoTokenizer.from_pretrained(model_path)

model = AutoModelForCausalLM.from_pretrained(model_path, device_map='auto', trust_remote_code=True)

def get_response(text, tokenizer=tokenizer, model=model):

input_ids = tokenizer(text, return_tensors='pt').input_ids

inputs = input_ids.to(device)

input_len = inputs.shape[-1]

generate_ids = model.generate(

inputs,

top_p=0.9,

temperature=0.3,

max_length=200,

min_length=input_len + 4,

repetition_penalty=1.2,

do_sample=True,

)

response = tokenizer.batch_decode(

generate_ids, skip_special_tokens=True, clean_up_tokenization_spaces=True

)[0]

return response

text = 'عاصمة دولة الإمارات العربية المتحدة ه'

print(get_response(text))

text = 'The capital of UAE is'

print(get_response(text))

Code Walkthrough: An Analogy

Think of using Jais-30b as preparing a gourmet meal. Each ingredient (or line of code) contributes to the final dish:

- The

importstatements are like gathering all your ingredients from the pantry. You need to have everything ready before you start cooking. - The tokenizer is your sous-chef, chopping and prepping the text so that it can be used efficiently. It converts human language into a format the model can digest.

- The model itself is the master chef, combining all the prepared ingredients (input data) while adjusting flavors (through parameters like

top_pandtemperature) to serve a delectable response. - Finally, when we plate the dish with

print(get_response(text)), it’s like serving the meal to guests, ready for taking their feedback!

Troubleshooting and FAQs

Like any sophisticated tool, Jais-30b may present some challenges. Here are common issues and how to address them:

- Issue: The model doesn’t load or gives an error about remote code.

- Solution: Make sure you have set

trust_remote_code=Truewhen loading the model. This allows your application to trust the code coming from the model repository. - Issue: The output is not in the expected language or format.

- Solution: Double-check the text input to ensure it is appropriately formatted and in either Arabic or English. Retain focus on the language used based on your tokenizer.

- Issue: Performance is lagging or crashing.

- Solution: Ensure that your hardware meets the model’s requirements. If running on CPU, consider whether you have sufficient RAM to handle the model’s parameters.

- Need help? For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

Jais-30b is a powerful tool for generating text in Arabic and English, suited for research, commercial applications, and more. By understanding its capabilities and properly implementing the setup, you can leverage this model to its fullest potential.

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.