In the world of artificial intelligence, particularly in natural language processing (NLP), merging models to improve context handling is akin to blending fine ingredients in the kitchen to create a culinary masterpiece. Today, we’ll explore the technique of merging various AI models, like the Epiculous Fett-uccine and Mistral models, specifically designed for context-rich tasks.

Understanding the Merge Process

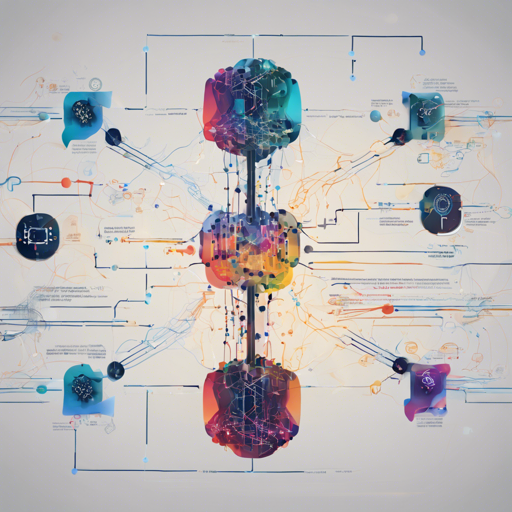

The merge process helps AI models realize their full potential by combining strengths from different models. Imagine this scenario: You have a fantastic recipe for pasta (the Epiculous model) and another for a savory sauce (the Mistral model). By merging the two, you create a delightful dish that utilizes the best features of each. This is essentially what we are trying to achieve by merging models in AI.

Your Recipe for Success: Step-by-Step

- Step 1: Gather Your Models

Select the models that you want to work with. For our task, we’ll focus on: - EpiculousFett-uccine-Long-Noodle-7B-120k-Context

- Mistral-7B-Instruct-v0.2

- Kunocchini-7b-128k-test

- Neural-Story

- MoreHuman

- Step 2: Initiate the Merge

Using your preferred framework, follow the instructions provided to combine the models. This typically involves specifying the weights or the architecture of the models in the merge process. - Step 3: Test the Merged Model

Once merged, it’s important to run tests to ensure that the new model performs well on various tasks. Think of this as sampling your dish before serving it to guests—this helps you adjust seasonings or flavors.

Understanding the Model Family Tree

Each model in our family tree has its roots in various previous iterations. For instance, the Epiculous model is integrated with Mistral Instruct to potentially create a seamless context from 32k to 128k tokens, enhancing the experience significantly. Picture it as a family where each generation inherits traits from its ancestors, making the next one stronger and more capable!

Troubleshooting Your Merging Journey

Even the best chefs encounter issues in the kitchen. Here are some common challenges you might face when merging models and how to address them:

- Conflicting parameters: Ensure that the models share compatible architectures; otherwise, your merge may not work as intended.

- Performance drop: If your new model isn’t performing well, try adjusting the training parameters or retraining parts of the model.

- Insufficient Context Handling: Check whether you’ve merged the right components from the respective models. Some layers may need careful selection.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

By mastering the art of model merging, you can significantly enhance the NLP capabilities of AI systems. Just like a chef fine-tuning a recipe, your skills in these processes will yield a model that delivers outstanding results. At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.