Welcome to your journey into the world of deep reinforcement learning with a pre-trained PPO agent on the LunarLander-v2 environment! In this blog, we will walk you through the setup, code implementation, and troubleshooting tips to successfully use the Stable-Baselines3 library to evaluate this advanced model.

What You Need to Know

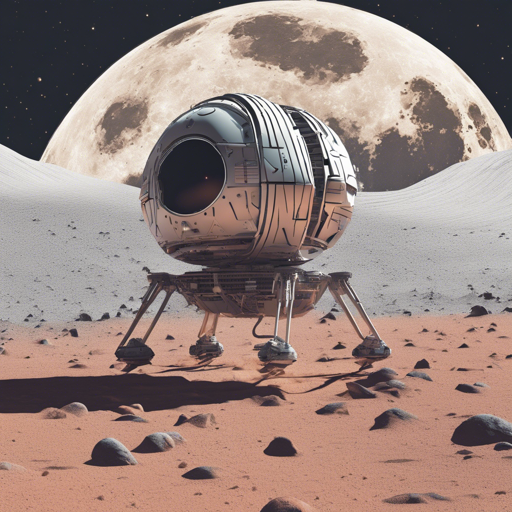

The Proximal Policy Optimization (PPO) agent is designed to solve a variety of reinforcement learning tasks, and LunarLander-v2 is no exception. This environment is like a reality simulation where you have to control a spacecraft to land safely on the moon. The goal is to maximize your landing precision – quite like a skilled pilot! Now let’s learn how to set things up.

Setup and Installation

Before diving into the model usage, you need to install the necessary libraries. Here’s how you can get started:

- Install Stable-Baselines3:

pip install stable-baselines3pip install huggingface_sb3Implementation Guide

Once you have the required libraries installed, you can use the following code to load and evaluate the pre-trained PPO model on LunarLander-v2:

import gym

from huggingface_sb3 import load_from_hub

from stable_baselines3 import PPO

from stable_baselines3.common.evaluation import evaluate_policy

# Retrieve the model from the hub

repo_id = "mrm8488/ppo-LunarLander-v2" # Replace with your repo id

filename = "ppo-LunarLander-v2.zip"

checkpoint = load_from_hub(repo_id=repo_id, filename=filename)

model = PPO.load(checkpoint)

# Evaluate the agent

eval_env = gym.make("LunarLander-v2")

mean_reward, std_reward = evaluate_policy(model, eval_env, n_eval_episodes=10, deterministic=True)

print(f"mean_reward={mean_reward:.2f} +/- {std_reward:.2f}")

# Watch the agent play

obs = eval_env.reset()

for i in range(1000):

action, _state = model.predict(obs)

obs, reward, done, info = eval_env.step(action)

eval_env.render()

if done:

obs = eval_env.reset()

eval_env.close()Understanding the Code: An Analogy

Think of the PPO agent as a pilot training to land a spaceship on the moon (LunarLander-v2). Here’s how the code corresponds to this analogy:

- Importing Libraries: Just like a pilot gathers tools and maps before the flight, we import necessary libraries to prepare our environment.

- Loading the Model: The process of loading the pre-trained model is akin to our pilot using a flight simulator with pre-recorded best landing techniques.

- Evaluating the Model: Evaluating the model is like performing a quality check on the pilot’s landing skills after multiple attempts to gauge improvements over time.

- Watching the Model in Action: Finally, watching the agent play is similar to viewing the pilot’s performance in a real-world exercise, where we can witness the skill developed through lots of training.

Troubleshooting Common Issues

During your exploration with this pre-trained PPO agent, you might encounter a few hiccups. Here are some common solutions:

- Import Errors: Ensure that you have installed

stable-baselines3andhuggingface_sb3correctly. You might need to restart your Python kernel after installation. - Environment Not Found: Verify that your gym environment name is correctly specified. It should match exactly, such as “LunarLander-v2”.

- Model Loading Issues: Make sure the repository ID and filename are correct while loading the model from the Hugging Face Hub.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.