In the magnificent world of NLP (Natural Language Processing), Sentence Transformers have emerged as an invaluable tool for understanding and processing human language. This article will guide you through the steps on how to effectively use the sentence-transformers library, specifically focusing on the all-mpnet-base-v2 model.

Understanding Sentence Transformers

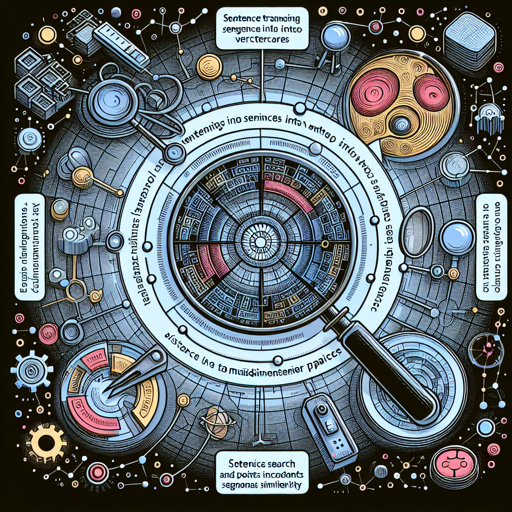

The Sentence Transformers library translates sentences and paragraphs into a 768-dimensional dense vector space. This transformation opens the gateway to numerous functionalities such as clustering, semantic search, and sentence similarity tasks. Imagine your sentences being transformed into a coordinate in a multi-dimensional space where the proximity of points reflects their semantic relationship. Simply put, the closer the points, the more similar the sentences are.

Installation

To begin your journey with Sentence Transformers, you will first need to install the library. Here’s how:

- Open your command line interface (Terminal for macOS/Linux or Command Prompt for Windows).

- Run the following command to install the library:

pip install -U sentence-transformersWith that done, you’re all set to use Sentence Transformers!

Usage of All-MPNet-Base-V2 Model

Once installed, follow these steps to encode your sentences:

- First, import the

SentenceTransformerclass from the library. - Prepare the sentences you want to encode.

- Instantiate the

SentenceTransformerwith the model name. - Call the

encodemethod on your sentences. - Print the resulting embeddings.

from sentence_transformers import SentenceTransformer

sentences = ["This is an example sentence.", "Each sentence is converted"]

model = SentenceTransformer('sentence-transformers/all-mpnet-base-v2')

embeddings = model.encode(sentences)

print(embeddings)Using HuggingFace Transformers

If you prefer not to use the Sentence Transformers library, you can always utilize HuggingFace Transformers. Below is an approach:

from transformers import AutoTokenizer, AutoModel

import torch

import torch.nn.functional as F

def mean_pooling(model_output, attention_mask):

token_embeddings = model_output[0]

input_mask_expanded = attention_mask.unsqueeze(-1).expand(token_embeddings.size()).float()

return torch.sum(token_embeddings * input_mask_expanded, 1) / torch.clamp(input_mask_expanded.sum(1), min=1e-9)

sentences = ["This is an example sentence.", "Each sentence is converted"]

tokenizer = AutoTokenizer.from_pretrained('sentence-transformers/all-mpnet-base-v2')

model = AutoModel.from_pretrained('sentence-transformers/all-mpnet-base-v2')

encoded_input = tokenizer(sentences, padding=True, truncation=True, return_tensors='pt')

with torch.no_grad():

model_output = model(**encoded_input)

sentence_embeddings = mean_pooling(model_output, encoded_input['attention_mask'])

sentence_embeddings = F.normalize(sentence_embeddings, p=2, dim=1)

print("Sentence embeddings:")

print(sentence_embeddings)Evaluation Results

If you want to verify how effective this model is, you can check out the Sentence Embeddings Benchmark.

Background: Project Insights

The model is built on extensive datasets using a self-supervised contrastive learning objective. With a fine-tuned version of microsoft/mpnet-base trained on over a billion sentence pairs, the model is intended to generate sentence and short paragraph embeddings. The architecture allows it to capture semantic information crucial for tasks in information retrieval, clustering, and sentence similarity detection.

Troubleshooting Tips

Should you encounter any issues while working with the Sentence Transformers, consider the following:

- Installation Errors: Ensure that you have the latest version of

pipand proper access rights for installations. - Compatibility Issues: Verify that your libraries are compatible with your Python version (ideally Python 3.6 or higher).

- OutOfMemory/Resource Issues: If you’re running into resource limitations, consider working with smaller batch sizes or on a machine with more memory.

If problems persist, do not hesitate to reach out for further assistance! For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.