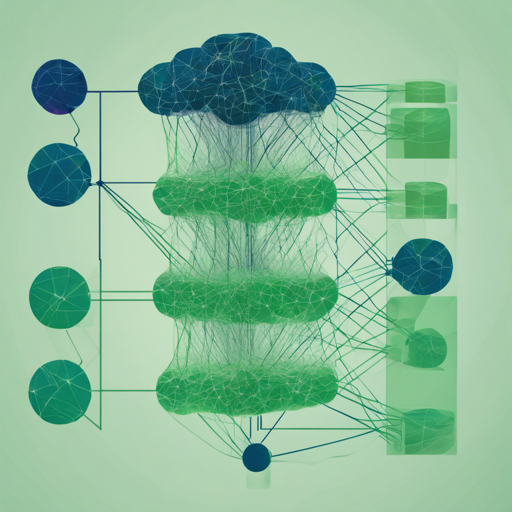

Produces hierarchical interpretations for a single prediction made by a PyTorch neural network. Official code for Hierarchical Interpretations for Neural Network Predictions (ICLR 2019 read here).

Documentation •

Demo notebooks

Note: this repo is actively maintained. For any questions please file an issue.

Overview

Hierarchical Neural-net Interpretations (ACD) is a powerful tool designed to produce hierarchical interpretations specifically for predictions made by neural networks built with PyTorch. This method enhances the transparency of how AI models arrive at their decisions, making it a prized asset for researchers and developers alike.

Getting Started with ACD

To harness the power of ACD in your own projects, follow these straightforward steps:

Installation

- Run the command:

pip install acd - Alternatively, you can clone the repository and execute:

python setup.py install

Examples

The reproduce_figs folder contains several notebooks that showcase many demos of the ACD in action.

Source Files

The source code for the ACD method can be found in the acd folder. You can also experiment with different types of interpretations by adjusting hyperparameters, as explained in the provided examples. All necessary data models and code for reproducing results are included in the dsets folder.

How ACD Works – An Analogy

Think of ACD as an expert tour guide in a vast library filled with thousands of books (your neural network). When you ask the guide about a specific book (a prediction), they don’t just hand it to you. Instead, they take you on a journey through the library, showing you the authors, the categories, and how the book fits into the larger context of that library. Similarly, ACD offers hierarchical insights into what aspects of your data influenced the model’s prediction, making it easier to understand why the model reached a certain conclusion.

Using ACD with Your Own Data

When implementing ACD with your data, the current implementation often works seamlessly with standard network architectures like AlexNet, VGG, or ResNet out of the box. However, if you are using custom layers that are not directly accessible through net.modules(), you may need to craft a custom function to loop through specific layers of your setup. For examples, refer to cd.py.

If you’re using baseline techniques such as build-up or occlusion, replace the pred_ims function with one that fetches predictions from your model given a batch of examples.

Troubleshooting

If you encounter issues during installation or implementation, here are some troubleshooting tips:

- Ensure your Python version is between 3.6 and 3.8, and that you are using PyTorch 1.0 or higher.

- If certain layers aren’t recognized, double-check if the layers are supported by the current version.

- Consult the documentation for additional examples and clarification on hyperparameters.

- For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.

Related Work

For those interested in similar methodologies:

- CDEP (ICML 2020) – Penalizes ACD scores during training to enhance model generalization.

- TRIM (ICLR 2020 Workshop) – Facilitates the calculation of disentangled importances related to input transformations.

- PDR Framework (PNAS 2019) – Provides a comprehensive framework for interpretable machine learning.

- DAC – Deduces disentangled interpretations for random forests.

- Simple implementations of Integrated Gradients and gradient * input techniques can also be found in

score_funcs.py.

References

If you find this code useful for your research, please cite the following:

@inproceedings{singh2019hierarchical,

title={Hierarchical Interpretations for Neural Network Predictions},

author={Chandan Singh and W. James Murdoch and Bin Yu},

booktitle={International Conference on Learning Representations},

year={2019},

url={https://openreview.net/forum?id=SkEqro0ctQ}

}