In the ever-evolving landscape of Artificial Intelligence, Large Language Models (LLMs) have dramatically transformed how companies operate. However, these models come with their fair share of challenges, including inaccuracies and the potential to expose sensitive information. In this blog, we will guide you through the seamless process of auditing LLMs using Fiddler Auditor, ensuring a robust deployment of your AI models.

What is Fiddler Auditor?

The Fiddler Auditor is a sophisticated tool designed to help teams rigorously test and evaluate LLMs before they are deployed in production. By identifying weaknesses and predicting adversarial outcomes, this tool becomes essential for ML and software application teams aiming to mitigate risks.

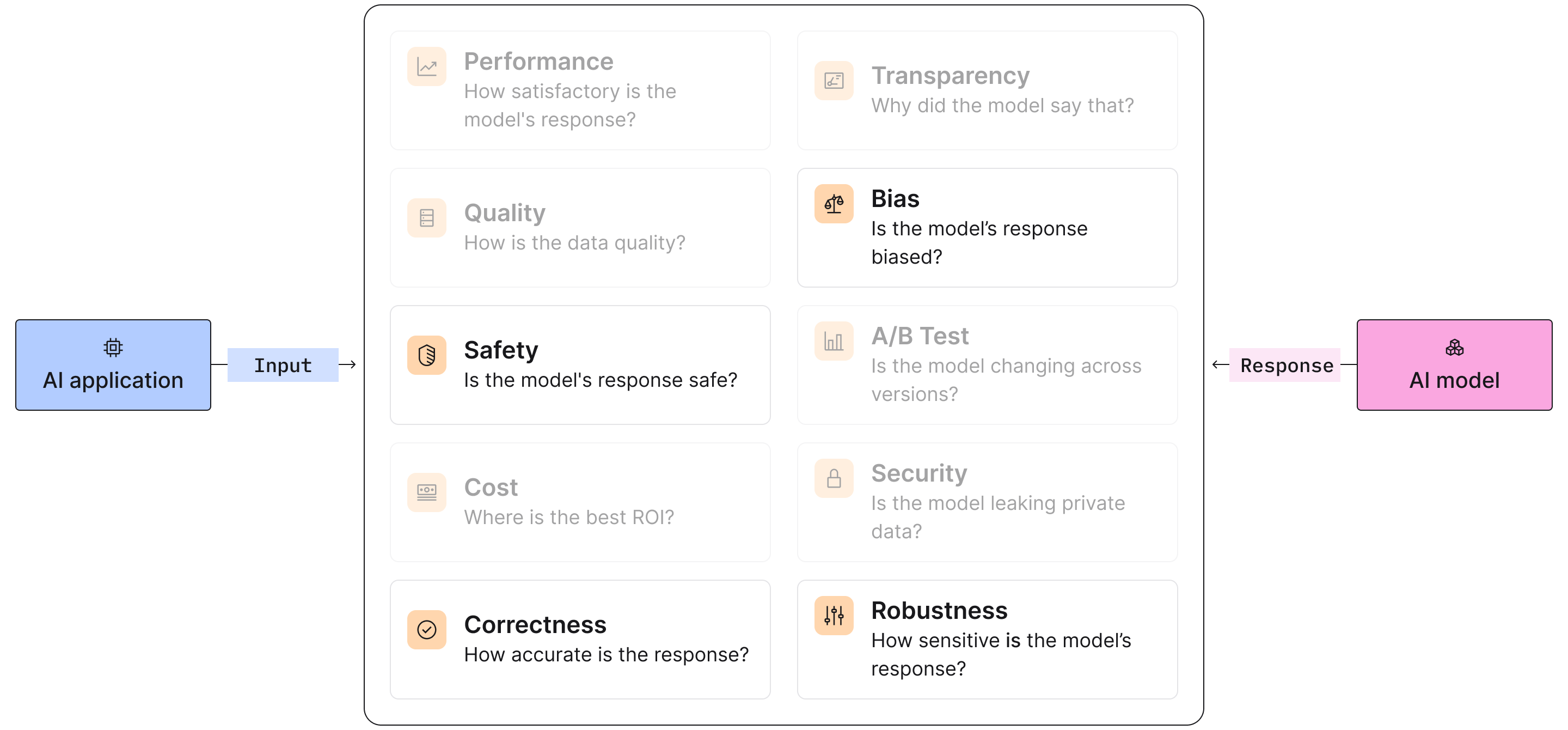

Features and Capabilities

Fiddler Auditor comes packed with features tailored for discerning users:

- Red-teaming LLMs for your use-case with prompt perturbation

- Integration with LangChain

- Custom evaluation metrics

- Support for Generative and Discriminative NLP models

- Comparison of various LLMs

How to Install Fiddler Auditor

From PyPI

To get started, you can install Fiddler Auditor from PyPI, which is compatible with Python 3.8 and above. We recommend creating a virtual Python environment for ease of management. Run the following command:

pip install fiddler-auditorFrom Source

If you prefer to install from source after cloning the repository, execute this command:

pip install .Quick-start Guides

Once installed, you can dive right into using Fiddler Auditor with these quick-start guides:

- Fiddler Auditor Quickstart [](https://colab.research.google.com/github/fiddler-labs/fiddler-auditor/blob/main/examples/LLM_Evaluation.ipynb)

- Evaluate LLMs with custom metrics [](https://colab.research.google.com/github/fiddler-labs/fiddler-auditor/blob/main/examples/Custom_Evaluation.ipynb)

- Prompt injection attack with custom transformation [](https://colab.research.google.com/github/fiddler-labs/fiddler-auditor/blob/main/examples/Custom_Transformation.ipynb)

Troubleshooting

If you encounter any issues while using Fiddler Auditor, here are some troubleshooting tips:

- Common Installation Errors: Ensure you have the correct version of Python installed, and check the compatibility of any dependencies.

- Feature Usage Confusion: If you’re having trouble using specific features, refer back to the quick-start guides or the issue tracker for possible solutions.

- Unexpected Results: Check your input data and the models being evaluated, as any discrepancies can affect the outcomes.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

Auditing LLMs can seem daunting, but with Fiddler Auditor, you have everything you need to ensure a safe and effective deployment of your AI models. As we embrace these advancements, it’s crucial to leverage tools that help maintain quality and integrity in our applications.

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.