The paper titled Benchmarking Neural Network Robustness to Common Corruptions and Perturbations provides researchers with tools and methodologies to test how well neural networks can withstand various corruptions and perturbations. This blog will guide you through the process of setting up your environment, downloading the necessary datasets, running evaluations, and troubleshooting common issues.

Getting Started

To get started with benchmarking your neural network for robustness, you’ll need a working environment set up. Follow these steps:

- Ensure you have Python 3+ installed on your system.

- Install PyTorch 0.3+ via the official installation guide.

- Familiarize yourself with the evaluation methods by reading through the code and instructions in the repository.

Downloading Required Datasets

You will need to download the following datasets to begin your evaluations:

- ImageNet-C (Standard size images)

- Tiny ImageNet-C (64×64 images with 200 classes)

- And if you’re aiming for quicker experiments, download CIFAR-10-C and CIFAR-100-C.

Evaluating the Models

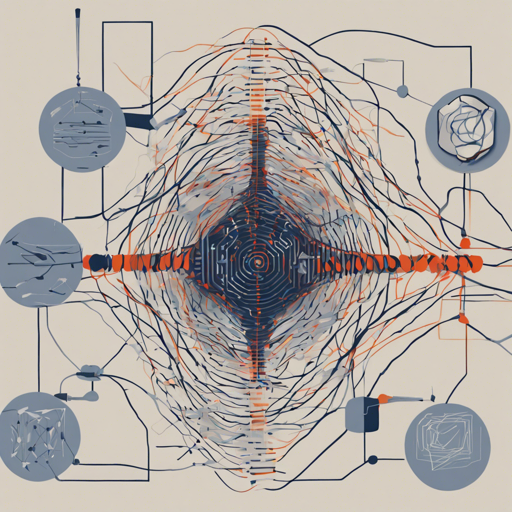

After setting up the environment and downloading the datasets, you can begin evaluating your models. The approach is akin to an athlete preparing for a competition by first warming up and then measuring their performance under various conditions. Here’s a breakdown of how the model evaluation process works:

- Preparation: Just as an athlete stretches before a race, you need to ensure your data is prepared, meaning the corrupted images are stored correctly.

- Testing: Similar to how an athlete tests their speed on different surfaces—grass, concrete, and track—you will evaluate the model on different datasets affected by corruption or perturbation.

- Analysis: After the testing, the model’s performance metrics such as mCE (mean Corruption Error) is calculated to determine robustness, akin to tracking an athlete’s timing statistics to know areas of improvement.

Understanding Metrics

Upon evaluation, you will be presented with data regarding model performance. This includes:

- mCE: A metric that summarizes the overall performance on the corruptions.

- Clean Error: This indicates how the model performs under normal, uncorrupted conditions.

Troubleshooting Common Issues

If you encounter issues at any step of your benchmarking process, consider the following troubleshooting steps:

- Installation Issues: Verify that your Python and PyTorch are installed correctly. You can check this using a simple command in your terminal or command prompt.

- Data Download Problems: Ensure that the URLs have been copied correctly and check your internet connection.

- Model Evaluation Errors: Review any error messages for clues on what might have gone wrong, such as file not found errors or compatibility issues between different versions of libraries.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Stay Informed

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.