In the world of natural language processing (NLP), language models like BERT (Bidirectional Encoder Representations from Transformers) serve as powerful tools for a variety of applications. In this article, we will guide you through building a BERT-Small CORD-19 model fine-tuned on SQuAD 2.0. This model is specifically trained to extract answers from medical literature, making it especially valuable for research in the health domain.

Prerequisites

- A basic understanding of Python and command line interfaces.

- Access to an environment with Python and necessary libraries installed.

- A training dataset (train-v2.0.json and dev-v2.0.json).

Step-by-Step Guide

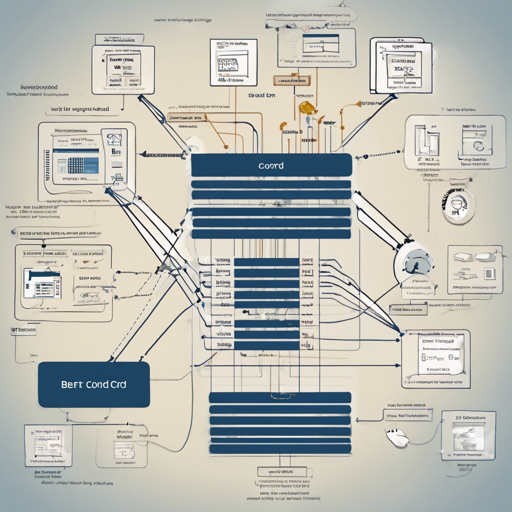

Let’s break down the command to build our model:

bash python run_squad.py \

--model_type bert \

--model_name_or_path bert-small-cord19 \

--do_train \

--do_eval \

--do_lower_case \

--version_2_with_negative \

--train_file train-v2.0.json \

--predict_file dev-v2.0.json \

--per_gpu_train_batch_size 8 \

--learning_rate 3e-5 \

--num_train_epochs 3.0 \

--max_seq_length 384 \

--doc_stride 128 \

--output_dir bert-small-cord19-squad2 \

--save_steps 0 \

--threads 8 \

--overwrite_cache \

--overwrite_output_dirNow, let’s make sense of this command using an analogy:

Think of building a model like baking a cake. Here’s how the different ingredients (command arguments) come together:

- bash python run_squad.py: This is like preheating your oven – it gets everything ready.

- –model_type bert: This tells us the type of cake we’re making (BERT cake) as opposed to other models.

- –model_name_or_path bert-small-cord19: This is the cake recipe we’re following. It states that we’re using BERT-Small specific to CORD-19.

- –do_train & –do_eval: Just like preparing the cake layers and checking for doneness, these commands allow for training the model and evaluating its performance.

- –do_lower_case: This ensures we format our ingredients uniformly, much like using all lowercase letters for consistency.

- –version_2_with_negative: This accounts for the possibility of not having an answer, like adding a tart flavor to balance the sweetness of the cake.

- –train_file & –predict_file: These are our ingredients, where the train-file is what we’ll bake with initially, and the predict-file assists in testing our cake later.

- –per_gpu_train_batch_size 8: This specifies how many layers we bake at once, optimizing our resources.

- –learning_rate 3e-5: This is the speed of the oven, determining how quickly we wanna bake our cake.

- –num_train_epochs 3.0: This indicates how many times we’ll iterate over our recipe for the perfect cake.

- –max_seq_length 384: This sets the maximum size of our cake (input length) so that it fits in the oven.

- –doc_stride 128: This allows us to slightly overlap between baking times, ensuring no portions of the cake go uncooked.

- –output_dir: The kitchen where we store our baked cake (output files).

- –save_steps 0: Decides if we want to save any interim results while baking.

- –threads 8: The number of helpers in our kitchen speeding up the preparation process.

- –overwrite_cache & –overwrite_output_dir: Either start new (reset) or use the leftover ingredients from previous bakes.

Troubleshooting

If you encounter any issues during this process, here are some common solutions:

- Ensure that all file paths (training and prediction files) are correct and accessible.

- Check if you have enough GPU memory for the specified batch size.

- Confirm that your environment has all necessary libraries installed, such as TensorFlow or PyTorch.

- Consult the model documentation for specific dependencies and installation guidelines.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

Building a BERT-Small CORD-19 model fine-tuned on SQuAD 2.0 is a fascinating journey into the realm of AI and healthcare. By following these steps, you’ll be on your way to developing an effective tool that can assist in understanding medical literature.

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.